Reset Filters

Articles

- 2022

- 2021

- 2020

- 2019

- 2018

- 2017

- 2016

Underwriting With 20:20 Vision

Risk data delivered to underwriting platforms via application programming interfaces (API) is bringing granular exposure information and model insights to high-volume risks The insurance industry boasts some of the most sophisticated modeling capabilities in the world. And yet the average property underwriter does not have access to the kind of predictive tools that carriers use at a portfolio level to manage risk aggregation, streamline reinsurance buying and optimize capitalization. Detailed probabilistic models are employed on large and complex corporate and industrial portfolios. But underwriters of high-volume business are usually left to rate risks with only a partial view of the risk characteristics at individual locations, and without the help of models and other tools. “There is still an insufficient amount of data being gathered to enable the accurate assessment and pricing of risks [that] our industry has been covering for decades,” says Talbir Bains, founder and CEO of managing general agent (MGA) platform Volante Global. Access to insights from models used at the portfolio level would help underwriters make decisions faster and more accurately, improving everything from risk screening and selection to technical pricing. However, accessing this intellectual property (IP) has previously been difficult for higher-volume risks, where to be competitive there simply isn’t the time available to liaise with cat modeling teams to configure full model runs and build a sophisticated profile of the risk. Many insurers invest in modeling post-bind in order to understand risk aggregation in their portfolios, but Ross Franklin, senior director of data product management at RMS, suggests this is too late. “From an underwriting standpoint, that’s after the horse has bolted — that insight is needed upfront when you are deciding whether to write and at what price.” By not seeing the full picture, he explains, underwriters are often making decisions with a completely different view of risk from the portfolio managers in their own company. “Right now, there is a disconnect in the analytics used when risks are being underwritten and those used downstream as these same risks move through to the portfolio.” Cut off From the Insight Historically, underwriters have struggled to access complete information that would allow them to better understand the risk characteristics at individual locations. They must manually gather what risk information they can from various public- and private-sector sources. This helps them make broad assessments of catastrophe exposures, such as FEMA flood zone or distance to coast. These solutions often deliver data via web portals or spreadsheets and reports — not into the underwriting systems they use every day. There has been little innovation to increase the breadth, and more importantly, the usability of data at the point of underwriting. “Vulnerability is critical to accurate underwriting. Hazard alone is not enough” Ross Franklin RMS “We have used risk data tools but they are too broad at the hazard level to be competitive — we need more detail,” notes one senior property underwriter, while another simply states: “When it comes to flood, honestly, we’re gambling.” Misaligned and incomplete information prevents accurate risk selection and pricing, leaving the insurer open to negative surprises when underwritten risks make their way onto the balance sheet. Yet very few data providers burrow down into granular detail on individual risks by identifying what material a property is made of, how many stories it is, when it was built and what it is used for, for instance, all of which can make a significant difference to the risk rating of that individual property. “Vulnerability is critical to accurate underwriting. Hazard alone is not enough. When you put building characteristics together with the hazard information, you form a deeper understanding of the vulnerability of a specific property to a particular hazard. For a given location, a five-story building built from reinforced concrete in the 1990s will naturally react very differently in a storm than a two-story wood-framed house built in 1964 — and yet current underwriting approaches often miss this distinction,” says Franklin. In response to demand for change, RMS developed a Location Intelligence application programming interface (API), which allows preformatted RMS risk information to be easily distributed from its cloud platform via the API into any third-party or in-house underwriting software. The technology gives underwriters access to key insights on their desktops, as well as informing fully automated risk screening and pricing algorithms. The API allows underwriters to systematically evaluate the profitability of submissions, triage referrals to cat modeling teams more efficiently and tailor decision-making based on individual property characteristics. It can also be overlaid with third-party risk information. “The emphasis of our latest product development has been to put rigorous cat peril risk analysis in the hands of users at the right points in the underwriting workflow,” says Franklin. “That’s a capability that doesn’t exist today on high-volume personal lines and SME business, for instance.” Historically, underwriters of high-volume business have relied on actuarial analysis to inform technical pricing and risk ratings. “This analysis is not usually backed up by probabilistic modeling of hazard or vulnerability and, for expediency, risks are grouped into broad classes. The result is a loss of risk specificity,” says Franklin. “As the data we are supplying derives from the same models that insurers use for their portfolio modeling, we are offering a fully connected-up, consistent view of risk across their property books, from inception through to reinsurance.” With additional layers of information at their disposal, underwriters can develop a more comprehensive risk profile for individual locations than before. “In the traditional insurance model, the bad risks are subsidized by the good — but that does not have to be the case. We can now use data to get a lot more specific and generate much deeper insights,” says Franklin. And if poor risks are screened out early, insurers can be much more precise when it comes to taking on and pricing new business that fits their risk appetite. Once risks are accepted, there should be much greater clarity on expected costs should a loss occur. The implications for profitability are clear. Harnessing Automation While improved data resolution should drive better loss ratios and underwriting performance, automation can attack the expense ratio by stripping out manual processes, says Franklin. “Insurers want to focus their expensive, scarce underwriting resources on the things they do best — making qualitative expert judgments on more complex risks.” This requires them to shift more decision-making to straight-through processing using sophisticated underwriting guidelines, driven by predictive data insight. Straight-through processing is already commonplace in personal lines and is expected to play a growing role in commercial property lines too. “Technology has a critical role to play in overcoming this data deficiency through greatly enhancing our ability to gather and analyze granular information, and then to feed that insight back into the underwriting process almost instantaneously to support better decision-making,” says Bains. “However, the infrastructure upon which much of the insurance model is built is in some instances decades old and making the fundamental changes required is a challenge.” Many insurers are already in the process of updating legacy IT systems, making it easier for underwriters to leverage information such as past policy information at the point of underwriting. But technology is only part of the solution. The quality and granularity of the data being input is also a critical factor. Are brokers collecting sufficient levels of data to help underwriters assess the risk effectively? That’s where Franklin hopes RMS can make a real difference. “For the cat element of risk, we have far more predictive, higher-quality data than most insurers use right now,” he says. “Insurers can now overlay that with other data they hold to give the underwriter a far more comprehensive view of the risk.” Bains thinks a cultural shift is needed across the entire insurance value chain when it comes to expectations of the quantity, quality and integrity of data. He calls on underwriters to demand more good quality data from their brokers, and for brokers to do the same of assureds. “Technology alone won’t enable that; the shift is reliant upon everyone in the chain recognizing what is required of them.”

Living in a World of Constant Catastrophes

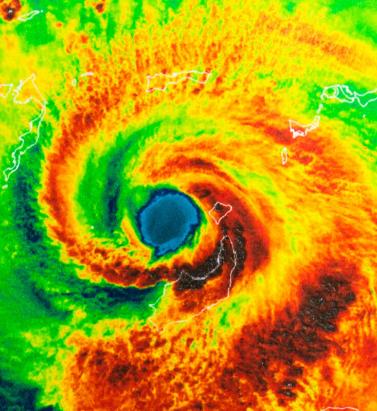

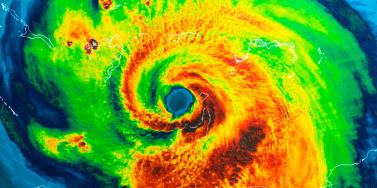

(Re)insurance companies are waking up to the reality that we are in a riskier world and the prospect of ‘constant catastrophes’ has arrived, with climate change a significant driver In his hotly anticipated annual letter to shareholders in February 2019, Warren Buffett, the CEO of Berkshire Hathaway and acclaimed “Oracle of Omaha,” warned about the prospect of “The Big One” — a major hurricane, earthquake or cyberattack that he predicted would “dwarf Hurricanes Katrina and Michael.” He warned that “when such a mega-catastrophe strikes, we will get our share of the losses and they will be big — very big.” The use of new technology, data and analytics will help us prepare for unpredicted ‘black swan’ events and minimize the catastrophic losses Mohsen Rahnama RMS The question insurance and reinsurance companies need to ask themselves is whether they are prepared for the potential of an intense U.S. landfalling hurricane, a Tōhoku-size earthquake event and a major cyber incident if these types of combined losses hit their portfolio each and every year, says Mohsen Rahnama, chief risk modeling officer at RMS. “We are living in a world of constant catastrophes,” he says. “The risk is changing, and carriers need to make an educated decision about managing the risk. “So how are (re)insurers going to respond to that? The broader perspective should be on managing and diversifying the risk in order to balance your portfolio and survive major claims each year,” he continues. “Technology, data and models can help balance a complex global portfolio across all perils while also finding the areas of opportunity.” A Barrage of Weather Extremes How often, for instance, should insurers and reinsurers expect an extreme weather loss year like 2017 or 2018? The combined insurance losses from natural disasters in 2017 and 2018 according to Swiss Re sigma were US$219 billion, which is the highest-ever total over a two-year period. Hurricanes Harvey, Irma and Maria delivered the costliest hurricane loss for one hurricane season in 2017. Contributing to the total annual insurance loss in 2018 was a combination of natural hazard extremes, including Hurricanes Michael and Florence, Typhoons Jebi, Trami and Mangkhut, as well as heatwaves, droughts, wildfires, floods and convective storms. While it is no surprise that weather extremes like hurricanes and floods occur every year, (re)insurers must remain diligent about how such risks are changing with respect to their unique portfolios. Looking at the trend in U.S. insured losses from 1980–2018, the data clearly shows losses are increasing every year, with climate-related losses being the primary drivers of loss, especially in the last four decades (even allowing for the fact that the completeness of the loss data over the years has improved). Measuring Climate Change With many non-life insurers and reinsurers feeling bombarded by the aggregate losses hitting their portfolios each year, insurance and reinsurance companies have started looking more closely at the impact that climate change is having on their books of business, as the costs associated with weather-related disasters increase. The ability to quantify the impact of climate change risk has improved considerably, both at a macro level and through attribution research, which considers the impact of climate change on the likelihood of individual events. The application of this research will help (re)insurers reserve appropriately and gain more insight as they build diversified books of business. Take Hurricane Harvey as an example. Two independent attribution studies agree that the anthropogenic warming of Earth’s atmosphere made a substantial difference to the storm’s record-breaking rainfall, which inundated Houston, Texas, in August 2017, leading to unprecedented flooding. In a warmer climate, such storms may hold more water volume and move more slowly, both of which lead to heavier rainfall accumulations over land. Attribution studies can also be used to predict the impact of climate change on the return-period of such an event, explains Pete Dailey, vice president of model development at RMS. “You can look at a catastrophic event, like Hurricane Harvey, and estimate its likelihood of recurring from either a hazard or loss point of view. For example, we might estimate that an event like Harvey would recur on average say once every 250 years, but in today’s climate, given the influence of climate change on tropical precipitation and slower moving storms, its likelihood has increased to say a 1-in-100-year event,” he explains. We can observe an incremental rise in sea level annually — it’s something that is happening right in front of our eyes Pete Dailey RMS “This would mean the annual probability of a storm like Harvey recurring has increased more than twofold from 0.4 percent to 1 percent, which to an insurer can have a dramatic effect on their risk management strategy.” Climate change studies can help carriers understand its impact on the frequency and severity of various perils and throw light on correlations between perils and/or regions, explains Dailey. “For a global (re)insurance company with a book of business spanning diverse perils and regions, they want to get a handle on the overall effect of climate change, but they must also pay close attention to the potential impact on correlated events. “For instance, consider the well-known correlation between the hurricane season in the North Atlantic and North Pacific,” he continues. “Active Atlantic seasons are associated with quieter Pacific seasons and vice versa. So, as climate change affects an individual peril, is it also having an impact on activity levels for another peril? Maybe in the same direction or in the opposite direction?” Understanding these “teleconnections” is just as important to an insurer as the more direct relationship of climate to hurricane activity in general, thinks Dailey. “Even though it’s hard to attribute the impact of climate change to a particular location, if we look at the impact on a large book of business, that’s actually easier to do in a scientifically credible way,” he adds. “We can quantify that and put uncertainty around that quantification, thus allowing our clients to develop a robust and objective view of those factors as a part of a holistic risk management approach.” Of course, the influence of climate change is easier to understand and measure for some perils than others. “For example, we can observe an incremental rise in sea level annually — it’s something that is happening right in front of our eyes,” says Dailey. “So, sea-level rise is very tangible in that we can observe the change year over year. And we can also quantify how the rise of sea levels is accelerating over time and then combine that with our hurricane model, measuring the impact of sea-level rise on the risk of coastal storm surge, for instance.” Each peril has a unique risk signature with respect to climate change, explains Dailey. “When it comes to a peril like severe convective storms — tornadoes and hail storms for instance — they are so localized that it’s difficult to attribute climate change to the future likelihood of such an event. But for wildfire risk, there’s high correlation with climate change because the fuel for wildfires is dry vegetation, which in turn is highly influenced by the precipitation cycle.” Satellite data from 1993 through to the present shows there is an upward trend in the rate of sea-level rise, for instance, with the current rate of change averaging about 3.2 millimeters per year. Sea-level rise, combined with increasing exposures at risk near the coastline, means that storm surge losses are likely to increase as sea levels rise more quickly. “In 2010, we estimated the amount of exposure within 1 meter above the sea level, which was US$1 trillion, including power plants, ports, airports and so forth,” says Rahnama. “Ten years later, the exact same exposure was US$2 trillion. This dramatic exposure change reflects the fact that every centimeter of sea-level rise is subjected to a US$2 billion loss due to coastal flooding and storm surge as a result of even small hurricanes. “And it’s not only the climate that is changing,” he adds. “It’s the fact that so much building is taking place along the high-risk coastline. As a result of that, we have created a built-up environment that is actually exposed to much of the risk.” Rahnama highlighted that because of an increase in the frequency and severity of events, it is essential to implement prevention measures by promoting mitigation credits to minimize the risk. He says: “How can the market respond to the significant losses year after year. It is essential to think holistically to manage and transfer the risk to the insurance chain from primary to reinsurance, capital market, ILS, etc.,” he continues. “The art of risk management, lessons learned from past events and use of new technology, data and analytics will help to prepare for responding to unpredicted ‘black swan’ type of events and being able to survive and minimize the catastrophic losses.” Strategically, risk carriers need to understand the influence of climate change whether they are global reinsurers or local primary insurers, particularly as they seek to grow their business and plan for the future. Mergers and acquisitions and/or organic growth into new regions and perils will require an understanding of the risks they are taking on and how these perils might evolve in the future. There is potential for catastrophe models to be used on both sides of the balance sheet as the influence of climate change grows. Dailey points out that many insurance and reinsurance companies invest heavily in real estate assets. “You still need to account for the risk of climate change on the portfolio, whether you’re insuring properties or whether you actually own them, there’s no real difference.” In fact, asset managers are more inclined to a longer-term view of risk when real estate is part of a long-term investment strategy. Here, climate change is becoming a critical part of that strategy. “What we have found is that often the team that handles asset management within a (re)insurance company is an entirely different team to the one that handles catastrophe modeling,” he continues. “But the same modeling tools that we develop at RMS can be applied to both of these problems of managing risk at the enterprise level. “In some cases, a primary insurer may have a one-to-three-year plan, while a major reinsurer may have a five-to-10-year view because they’re looking at a longer risk horizon,” he adds. “Every time I go to speak to a client — whether it be about our U.S. Inland Flood HD Model or our North America Hurricane Models — the question of climate change inevitably comes up. So, it’s become apparent this is no longer an academic question, it’s actually playing into critical business decisions on a daily basis.” Preparing for a Low-carbon Economy Regulation also has an important role in pushing both (re)insurers and large corporates to map and report on the likely impact of climate change on their business, as well as explain what steps they have taken to become more resilient. In the U.K., the Prudential Regulation Authority (PRA) and Bank of England have set out their expectations regarding firms’ approaches to managing the financial risks from climate change. Meanwhile, a survey carried out by the PRA found that 70 percent of U.K. banks recognize the risk climate change poses to their business. Among their concerns are the immediate physical risks to their business models — such as the exposure to mortgages on properties at risk of flood and exposure to countries likely to be impacted by increasing weather extremes. Many have also started to assess how the transition to a low-carbon economy will impact their business models and, in many cases, their investment and growth strategy. “Financial policymakers will not drive the transition to a low-carbon economy, but we will expect our regulated firms to anticipate and manage the risks associated with that transition,” said Bank of England Governor Mark Carney, in a statement. The transition to a low-carbon economy is a reality that (re)insurance industry players will need to prepare for, with the impact already being felt in some markets. In Australia, for instance, there is pressure on financial institutions to withdraw their support from major coal projects. In the aftermath of the Townsville floods in February 2019 and widespread drought across Queensland, there have been renewed calls to boycott plans for Australia’s largest thermal coal mine. To date, 10 of the world’s largest (re)insurers have stated they will not provide property or construction cover for the US$15.5 billion Carmichael mine and rail project. And in its “Mining Risk Review 2018,” broker Willis Towers Watson warned that finding insurance for coal “is likely to become increasingly challenging — especially if North American insurers begin to follow the European lead.”

The Flames Burn Higher

With California experiencing two of the most devastating seasons on record in consecutive years, EXPOSURE asks whether wildfire now needs to be considered a peak peril Some of the statistics for the 2018 U.S. wildfire season appear normal. The season was a below-average year for the number of fires reported — 58,083 incidents represented only 84 percent of the 10-year average. The number of acres burned — 8,767,492 acres — was marginally above average at 132 percent. Two factors, however, made it exceptional. First, for the second consecutive year, the Great Basin experienced intense wildfire activity, with some 2.1 million acres burned — 233 percent of the 10-year average. And second, the fires destroyed 25,790 structures, with California accounting for over 23,600 of the structures destroyed, compared to a 10-year U.S. annual average of 2,701 residences, according to the National Interagency Fire Center. As of January 28, 2019, reported insured losses for the November 2018 California wildfires, which included the Camp and Woolsey Fires, were at US$11.4 billion, according to the California Department of Insurance. Add to this the insured losses of US$11.79 billion reported in January 2018 for the October and December 2017 California events, and these two consecutive wildfire seasons constitute the most devastating on record for the wildfire-exposed state. Reaching its Peak? Such colossal losses in consecutive years have sent shockwaves through the (re)insurance industry and are forcing a reassessment of wildfire’s secondary status in the peril hierarchy. According to Mark Bove, natural catastrophe solutions manager at Munich Reinsurance America, wildfire’s status needs to be elevated in highly exposed areas. “Wildfire should certainly be considered a peak peril in areas such as California and the Intermountain West,” he states, “but not for the nation as a whole.” His views are echoed by Chris Folkman, senior director of product management at RMS. “Wildfire can no longer be viewed purely as a secondary peril in these exposed territories,” he says. “Six of the top 10 fires for structural destruction have occurred in the last 10 years in the U.S., while seven of the top 10, and 10 of the top 20 most destructive wildfires in California history have occurred since 2015. The industry now needs to achieve a level of maturity with regard to wildfire that is on a par with that of hurricane or flood.” “Average ember contributions to structure damage and destruction is approximately 15 percent, but in a wind-driven event such as the Tubbs Fire this figure is much higher” Chris Folkman RMS However, he is wary about potential knee-jerk reactions to this hike in wildfire-related losses. “There is a strong parallel between the 2017-18 wildfire seasons and the 2004-05 hurricane seasons in terms of people’s gut instincts. 2004 saw four hurricanes make landfall in Florida, with K-R-W causing massive devastation in 2005. At the time, some pockets of the industry wondered out loud if parts of Florida were uninsurable. Yet the next decade was relatively benign in terms of hurricane activity. “The key is to adopt a balanced, long-term view,” thinks Folkman. “At RMS, we think that fire severity is here to stay, while the frequency of big events may remain volatile from year-to-year.” A Fundamental Re-evaluation The California losses are forcing (re)insurers to overhaul their approach to wildfire, both at the individual risk and portfolio management levels. “The 2017 and 2018 California wildfires have forced one of the biggest re-evaluations of a natural peril since Hurricane Andrew in 1992,” believes Bove. “For both California wildfire and Hurricane Andrew, the industry didn’t fully comprehend the potential loss severities. Catastrophe models were relatively new and had not gained market-wide adoption, and many organizations were not systematically monitoring and limiting large accumulation exposure in high-risk areas. As a result, the shocks to the industry were similar.” For decades, approaches to underwriting have focused on the wildland-urban interface (WUI), which represents the area where exposure and vegetation meet. However, exposure levels in these areas are increasing sharply. Combined with excessive amounts of burnable vegetation, extended wildfire seasons, and climate-change-driven increases in temperature and extreme weather conditions, these factors are combining to cause a significant hike in exposure potential for the (re)insurance industry. A recent report published in PNAS entitled “Rapid Growth of the U.S. Wildland-Urban Interface Raises Wildfire Risk” showed that between 1990 and 2010 the new WUI area increased by 72,973 square miles (189,000 square kilometers) — larger than Washington State. The report stated: “Even though the WUI occupies less than one-tenth of the land area of the conterminous United States, 43 percent of all new houses were built there, and 61 percent of all new WUI houses were built in areas that were already in the WUI in 1990 (and remain in the WUI in 2010).” “The WUI has formed a central component of how wildfire risk has been underwritten,” explains Folkman, “but you cannot simply adopt a black-and-white approach to risk selection based on properties within or outside of the zone. As recent losses, and in particular the 2017 Northern California wildfires, have shown, regions outside of the WUI zone considered low risk can still experience devastating losses.” For Bove, while focus on the WUI is appropriate, particularly given the Coffey Park disaster during the 2017 Tubbs Fire, there is not enough focus on the intermix areas. This is the area where properties are interspersed with vegetation. “In some ways, the wildfire risk to intermix communities is worse than that at the interface,” he explains. “In an intermix fire, you have both a wildfire and an urban conflagration impacting the town at the same time, while in interface locations the fire has largely transitioned to an urban fire. “In an intermix community,” he continues, “the terrain is often more challenging and limits firefighter access to the fire as well as evacuation routes for local residents. Also, many intermix locations are far from large urban centers, limiting the amount of firefighting resources immediately available to start combatting the blaze, and this increases the potential for a fire in high-wind conditions to become a significant threat. Most likely we’ll see more scrutiny and investigation of risk in intermix towns across the nation after the Camp Fire’s decimation of Paradise, California.” Rethinking Wildfire Analysis According to Folkman, the need for greater market maturity around wildfire will require a rethink of how the industry currently analyzes the exposure and the tools it uses. “Historically, the industry has relied primarily upon deterministic tools to quantify U.S. wildfire risk,” he says, “which relate overall frequency and severity of events to the presence of fuel and climate conditions, such as high winds, low moisture and high temperatures.” While such tools can prove valuable for addressing “typical” wildland fire events, such as the 2017 Thomas Fire in Southern California, their flaws have been exposed by other recent losses. Burning Wildfire at Sunset “Such tools insufficiently address major catastrophic events that occur beyond the WUI into areas of dense exposure,” explains Folkman, “such as the Tubbs Fire in Northern California in 2017. Further, the unprecedented severity of recent wildfire events has exposed the weaknesses in maintaining a historically based deterministic approach.” While the scale of the 2017-18 losses has focused (re)insurer attention on California, companies must also recognize the scope for potential catastrophic wildfire risk extends beyond the boundaries of the western U.S. “While the frequency and severity of large, damaging fires is lower outside California,” says Bove, “there are many areas where the risk is far from negligible.” While acknowledging that the broader western U.S. is seeing increased risk due to WUI expansion, he adds: “Many may be surprised that similar wildfire risk exists across most of the southeastern U.S., as well as sections of the northeastern U.S., like in the Pine Barrens of southern New Jersey.” As well as addressing the geographical gaps in wildfire analysis, Folkman believes the industry must also recognize the data gaps limiting their understanding. “There are a number of areas that are understated in underwriting practices currently, such as the far-ranging impacts of ember accumulations and their potential to ignite urban conflagrations, as well as vulnerability of particular structures and mitigation measures such as defensible space and fire-resistant roof coverings.” In generating its US$9 billion to US$13 billion loss estimate for the Camp and Woolsey Fires, RMS used its recently launched North America Wildfire High-Definition (HD) Models to simulate the ignition, fire spread, ember accumulations and smoke dispersion of the fires. “In assessing the contribution of embers, for example,” Folkman states, “we modeled the accumulation of embers, their wind-driven travel and their contribution to burn hazard both within and beyond the fire perimeter. Average ember contributions to structure damage and destruction is approximately 15 percent, but in a wind-driven event such as the Tubbs Fire this figure is much higher. This was a key factor in the urban conflagration in Coffey Park.” The model also provides full contiguous U.S. coverage, and includes other model innovations such as ignition and footprint simulations for 50,000 years, flexible occurrence definitions, smoke and evacuation loss across and beyond the fire perimeter, and vulnerability and mitigation measures on which RMS collaborated with the Insurance Institute for Business & Home Safety. Smoke damage, which leads to loss from evacuation orders and contents replacement, is often overlooked in risk assessments, despite composing a tangible portion of the loss, says Folkman. “These are very high-frequency, medium-sized losses and must be considered. The Woolsey Fire saw 260,000 people evacuated, incurring hotel, meal and transport-related expenses. Add to this smoke damage, which often results in high-value contents replacement, and you have a potential sea of medium-sized claims that can contribute significantly to the overall loss.” A further data resolution challenge relates to property characteristics. While primary property attribute data is typically well captured, believes Bove, many secondary characteristics key to wildfire are either not captured or not consistently captured. “This leaves the industry overly reliant on both average model weightings and risk scoring tools. For example, information about defensible spaces, roofing and siding materials, protecting vents and soffits from ember attacks, these are just a few of the additional fields that the industry will need to start capturing to better assess wildfire risk to a property.” A Highly Complex Peril Bove is, however, conscious of the simple fact that “wildfire behavior is extremely complex and non-linear.” He continues: “While visiting Paradise, I saw properties that did everything correct with regard to wildfire mitigation but still burned and risks that did everything wrong and survived. However, mitigation efforts can improve the probability that a structure survives.” “With more data on historical fires,” Folkman concludes, “more research into mitigation measures and increasing awareness of the risk, wildfire exposure can be addressed and managed. But it requires a team mentality, with all parties — (re)insurers, homeowners, communities, policymakers and land-use planners — all playing their part.”

In Total Harmony

Karen White joined RMS as CEO in March 2018, followed closely by Moe Khosravy, general manager of software and platform activities. EXPOSURE talks to both, along with Mohsen Rahnama, chief risk modeling officer and one of the firm’s most long-standing team members, about their collective vision for the company, innovation, transformation and technology in risk management Karen and Moe, what was it that sparked your interest in joining RMS? Karen: What initially got me excited was the strength of the hand we have to play here and the fact that the insurance sector is at a very interesting time in its evolution. The team is fantastic — one of the most extraordinary groups of talent I have come across. At our core, we have hundreds of Ph.D.s, superb modelers and scientists, surrounded by top engineers, and computer and data scientists. I firmly believe no other modeling firm holds a candle to the quality of leadership and depth and breadth of intellectual property at RMS. We are years ahead of our competitors in terms of the products we deliver. Moe: For me, what can I say? When Karen calls with an idea it’s very hard to say no! However, when she called about the RMS opportunity, I hadn’t ever considered working in the insurance sector. My eureka moment came when I looked at the industry’s challenges and the technology available to tackle them. I realized that this wasn’t simply a cat modeling property insurance play, but was much more expansive. If you generalize the notion of risk and loss, the potential of what we are working on and the value to the insurance sector becomes much greater. I thought about the technologies entering the sector and how new developments on the AI [artificial intelligence] and machine learning front could vastly expand current analytical capabilities. I also began to consider how such technologies could transform the sector’s cost base. In the end, the decision to join RMS was pretty straightforward. “Developments such as AI and machine learning are not fairy dust to sprinkle on the industry’s problems” Karen White CEO, RMS Karen: The industry itself is reaching a eureka moment, which is precisely where I love to be. It is at a transformational tipping point — the technology is available to enable this transformation and the industry is compelled to undertake it. I’ve always sought to enter markets at this critical point. When I joined Oracle in the 1990s, the business world was at a transformational point — moving from client-server computing to Internet computing. This has brought about many of the huge changes we have seen in business infrastructure since, so I had a bird’s-eye view of what was a truly extraordinary market shift coupled with a technology shift. That experience made me realize how an architectural shift coupled with a market shift can create immense forward momentum. If the technology can’t support the vision, or if the challenges or opportunities aren’t compelling enough, then you won’t see that level of change occur. Do (re)insurers recognize the need to change and are they willing to make the digital transition required? Karen: I absolutely think so. There are incredible market pressures to become more efficient, assess risks more effectively, improve loss ratios, achieve better business outcomes and introduce more beneficial ways of capitalizing risk. You also have numerous new opportunities emerging. New perils, new products and new ways of delivering those products that have huge potential to fuel growth. These can be accelerated not just by market dynamics but also by a smart embrace of new technologies and digital transformation. Mohsen: Twenty-five years ago when we began building models at RMS, practitioners simply had no effective means of assessing risk. So, the adoption of model technology was a relatively simple step. Today, the extreme levels of competition are making the ability to differentiate risk at a much more granular level a critical factor, and our model advances are enabling that. In tandem, many of the Silicon Valley technologies have the potential to greatly enhance efficiency, improve processing power, minimize cost, boost speed to market, enable the development of new products, and positively impact every part of the insurance workflow. Data is the primary asset of our industry — it is the source of every risk decision, and every risk is itself an opportunity. The amount of data is increasing exponentially, and we can now capture more information much faster than ever before, and analyze it with much greater accuracy to enable better decisions. It is clear that the potential is there to change our industry in a positive way. The industry is renowned for being risk averse. Is it ready to adopt the new technologies that this transformation requires? Karen: The risk of doing nothing given current market and technology developments is far greater than that of embracing emerging tech to enable new opportunities and improve cost structures, even though there are bound to be some bumps in the road. I understand the change management can be daunting. But many of the technologies RMS is leveraging to help clients improve price performance and model execution are not new. AI, the Cloud and machine learning are already tried and trusted, and the insurance market will benefit from the lessons other industries have learned as it integrates these technologies. “The sector is not yet attracting the kind of talent that is attracted to firms such as Google, Microsoft or Amazon — and it needs to” Moe Khosravy EVP, Software and Platform, RMS Moe: Making the necessary changes will challenge the perceived risk-averse nature of the insurance market as it will require new ground to be broken. However, if we can clearly show how these capabilities can help companies be measurably more productive and achieve demonstrable business gains, then the market will be more receptive to new user experiences. Mohsen: The performance gains that technology is introducing are immense. A few years ago, we were using computation fluid dynamics to model storm surge. We were conducting the analysis through CPU [central processing unit] microprocessors, which was taking weeks. With the advent of GPU [graphics processing unit] microprocessors, we can carry out the same level of analysis in hours. When you add the supercomputing capabilities possible in the Cloud, which has enabled us to deliver HD-resolution models to our clients — in particular for flood, which requires a high-gradient hazard model to differentiate risk effectively — it has enhanced productivity significantly and in tandem price performance. Is an industry used to incremental change able to accept the stepwise change technology can introduce? Karen: Radical change often happens in increments. The change from client-server to Internet computing did not happen overnight, but was an incremental change that came in waves and enabled powerful market shifts. Amazon is a good example of market leadership out of digital transformation. It launched in 1994 as an online bookstore in a mature, relatively sleepy industry. It evolved into broad e-commerce and again with the introduction of Cloud services when it launched AWS [Amazon Web Services] 12 years ago — now a US$17 billion business that has disrupted the computer industry and is a huge portion of its profit. Amazon has total revenue of US$178 billion from nothing over 25 years, having disrupted the retail sector. Retail consumption has changed dramatically, but I can still go shopping on London’s Oxford Street and about 90 percent of retail is still offline. My point is, things do change incrementally but standing still is not a great option when technology-fueled market dynamics are underway. Getting out in front can be enormously rewarding and create new leadership. However, we must recognize that how we introduce technology must be driven by the challenges it is being introduced to address. I am already hearing people talk about developments such as AI, machine learning and neural networks as if they are fairy dust to sprinkle on the industry’s problems. That is not how this transformation process works. How are you approaching the challenges that this transformation poses? Karen: At RMS, we start by understanding the challenges and opportunities from our customers’ perspectives and then look at what value we can bring that we have not brought before. Only then can we look at how we deliver the required solution. Moe: It’s about having an “outward-in” perspective. We have amazing technology expertise across modeling, computer science and data science, but to deploy that effectively we must listen to what the market wants. We know that many companies are operating multiple disparate systems within their networks that have simply been built upon again and again. So, we must look at harnessing technology to change that, because where you have islands of data, applications and analysis, you lose fidelity, time and insight and costs rise. Moe: While there is a commonality of purpose spanning insurers, reinsurers and brokers, every organization is different. At RMS, we must incorporate that into our software and our platforms. There is no one-size-fits-all and we can’t force everyone to go down the same analytical path. That’s why we are adopting a more modular approach in terms of our software. Whether the focus is portfolio management or underwriting decision-making, it’s about choosing those modules that best meet your needs. “Data is the primary asset of our industry — it is the source of every risk decision, and every risk is itself an opportunity” Mohsen Rahmana, PhD Chief Risk Modeling Officer, RMS Mohsen: When constructing models, we focus on how we can bring the right technology to solve the specific problems our clients have. This requires a huge amount of critical thinking to bring the best solution to market. How strong is the talent base that is helping to deliver this level of capability? Mohsen: RMS is extremely fortunate to have such a fantastic array of talent. This caliber of expertise is what helps set us apart from competitors, enabling us to push boundaries and advance our modeling capabilities at the speed we are. Recently, we have set up teams of modelers and data and computer scientists tasked with developing a range of innovations. It’s fantastic having this depth of talent, and when you create an environment in which innovative minds can thrive you quickly reap the rewards — and that is what we are seeing. In fact, I have seen more innovation at RMS in the last six months than over the past several years. Moe: I would add though that the sector is not yet attracting the kind of talent seen at firms such as Google, Microsoft or Amazon, and it needs to. These companies are either large-scale customer-service providers capitalizing on big data platforms and leading-edge machine-learning techniques to achieve the scale, simplicity and flexibility their customers demand, or enterprises actually building these core platforms themselves. When you bring new blood into an organization or industry, you generate new ideas that challenge current thinking and practices, from the user interface to the underlying platform or the cost of performance. We need to do a better PR job as a technology sector. The best and brightest people in most cases just want the greatest problems to tackle — and we have a ton of those in our industry. Karen: The critical component of any successful team is a balance of complementary skills and capabilities focused on having a high impact on an interesting set of challenges. If you get that dynamic right, then that combination of different lenses correctly aligned brings real clarity to what you are trying to achieve and how to achieve it. I firmly believe at RMS we have that balance. If you look at the skills, experience and backgrounds of Moe, Mohsen and myself, for example, they couldn’t be more different. Bringing Moe and Mohsen together, however, has quickly sparked great and different thinking. They work incredibly well together despite their vastly different technical focus and career paths. In fact, we refer to them as the “Moe-Moes” and made them matching inscribed giant chain necklaces and presented them at an all-hands meeting recently. Moe: Some of the ideas we generate during our discussions and with other members of the modeling team are incredibly powerful. What’s possible here at RMS we would never have been able to even consider before we started working together. Mohsen: Moe’s vast experience of building platforms at companies such as HP, Intel and Microsoft is a great addition to our capabilities. Karen brings a history of innovation and building market platforms with the discipline and the focus we need to deliver on the vision we are creating. If you look at the huge amount we have been able to achieve in the months that she has been at RMS, that is a testament to the clear direction we now have. Karen: While we do come from very different backgrounds, we share a very well-defined culture. We care deeply about our clients and their needs. We challenge ourselves every day to innovate to meet those needs, while at the same time maintaining a hell-bent pragmatism to ensure we deliver. Mohsen: To achieve what we have set out to achieve requires harmony. It requires a clear vision, the scientific know-how, the drive to learn more, the ability to innovate and the technology to deliver — all working in harmony. Career Highlights Karen White is an accomplished leader in the technology industry, with a 25-year track record of leading, innovating and scaling global technology businesses. She started her career in Silicon Valley in 1993 as a senior executive at Oracle. Most recently, Karen was president and COO at Addepar, a leading fintech company serving the investment management industry with data and analytics solutions. Moe Khosravy (center) has over 20 years of software innovation experience delivering enterprise-grade products and platforms differentiated by data science, powerful analytics and applied machine learning to help transform industries. Most recently he was vice president of software at HP Inc., supporting hundreds of millions of connected devices and clients. Mohsen Rahnama leads a global team of accomplished scientists, engineers and product managers responsible for the development and delivery of all RMS catastrophe models and data. During his 20 years at RMS, he has been a dedicated, hands-on leader of the largest team of catastrophe modeling professionals in the industry.

IFRS17: Under the Microscope

How new accounting standards could reduce demand for reinsurance as cedants are forced to look more closely at underperforming books of business They may not be coming into effect until January 1, 2021, but the new IFRS 17 accounting standards are already shaking up the insurance industry. And they are expected to have an impact on the January 1, 2019, renewals as insurers ready themselves for the new regime. Crucially, IFRS 17 will require insurers to recognize immediately the full loss on any unprofitable insurance business. “The standard states that reinsurance contracts must now be valued and accounted for separate to the underlying contracts, meaning that traditional ‘netting down’ (gross less reinsured) and approximate methods used for these calculations may no longer be valid,” explained PwC partner Alex Bertolotti in a blog post. “Even an individual reinsurance contract could be material in the context of the overall balance sheet, and so have the potential to create a significant mismatch between the value placed on reinsurance and the value placed on the underlying risks,” he continued. “This problem is not just an accounting issue, and could have significant strategic and operational implications as well as an impact on the transfer of risk, on tax, on capital and on Solvency II for European operations.” In fact, the requirements under IFRS 17 could lead to a drop in reinsurance purchasing, according to consultancy firm Hymans Robertson, as cedants are forced to question why they are deriving value from reinsurance rather than the underlying business on unprofitable accounts. “This may dampen demand for reinsurance that is used to manage the impact of loss making business,” it warned in a white paper. Cost of Compliance The new accounting standards will also be a costly compliance burden for many insurance companies. Ernst & Young estimates that firms with over US$25 billion in Gross Written Premium (GWP) could be spending over US$150 million preparing for IFRS 17. Under the new regime, insurers will need to account for their business performance at a more granular level. In order to achieve this, it is important to capture more detailed information on the underlying business at the point of underwriting, explained Corina Sutter, director of government and regulatory affairs at RMS. This can be achieved by deploying systems and tools that allow insurers to capture, manage and analyze such granular data in increasingly high volumes, she said. “It is key for those systems or tools to be well-integrated into any other critical data repositories, analytics systems and reporting tools. “From a modeling perspective, analyzing performance at contract level means precisely understanding the risk that is being taken on by insurance firms for each individual account,” continued Sutter. “So, for P&C lines, catastrophe risk modeling may be required at account level. Many firms already do this today in order to better inform their pricing decisions. IFRS 17 is a further push to do so. “It is key to use tools that not only allow the capture of the present risk, but also the risk associated with the future expected value of a contract,” she added. “Probabilistic modeling provides this capability as it evaluates risk over time.”

A Model Operation

EXPOSURE explores the rationale, challenges and benefits of adopting an outsourced model function Business process outsourcing has become a mainstay of the operational structure of many organizations. In recent years, reflecting new technologies and changing market dynamics, the outsourced function has evolved significantly to fit seamlessly within existing infrastructure. On the modeling front, the exponential increase in data coupled with the drive to reduce expense ratios while enhancing performance levels is making the outsourced model proposition an increasingly attractive one. The Business Rationale The rationale for outsourcing modeling activities spans multiple possible origin points, according to Neetika Kapoor Sehdev, senior manager at RMS. “Drivers for adopting an outsourced modeling strategy vary significantly depending on the company itself and their specific ambitions. It may be a new startup that has no internal modeling capabilities, with outsourcing providing access to every component of the model function from day one.” There is also the flexibility that such access provides, as Piyush Zutshi, director of RMS Analytical Services points out. “That creates a huge value-add in terms of our catastrophe response capabilities — knowing that we are able to report our latest position has made a big difference on this front” Judith Woo Starstone “In those initial years, companies often require the flexibility of an outsourced modeling capability, as there is a degree of uncertainty at that stage regarding potential growth rates and the possibility that they may change track and consider alternative lines of business or territories should other areas not prove as profitable as predicted.” Another big outsourcing driver is the potential to free up valuable internal expertise, as Sehdev explains. “Often, the daily churn of data processing consumes a huge amount of internal analytical resources,” she says, “and limits the opportunities for these highly skilled experts to devote sufficient time to analyzing the data output and supporting the decision-making process.” This all-too-common data stumbling block for many companies is one that not only affects their ability to capitalize fully on their data, but also to retain key analytical staff. “Companies hire highly skilled analysts to boost their data performance,” Zutshi says, “but most of their working day is taken up by data crunching. That makes it extremely challenging to retain that caliber of staff as they are massively overqualified for the role and also have limited potential for career growth.” Other reasons for outsourcing include new model testing. It provides organizations with a sandbox testing environment to assess the potential benefits and impact of a new model on their underwriting processes and portfolio management capabilities before committing to the license fee. The flexibility of outsourced model capabilities can also prove critical during renewal periods. These seasonal activity peaks can be factored into contracts to ensure that organizations are able to cope with the spike in data analysis required as they reanalyze portfolios, renew contracts, add new business and write off old business. “At RMS Analytical Services,” Zutshi explains, “we prepare for data surge points well in advance. We work with clients to understand the potential size of the analytical spike, and then we add a factor of 20 to 30 percent to that to ensure that we have the data processing power on hand should that surge prove greater than expected.” Things to Consider Integrating an outsourced function into existing modeling processes can prove a demanding undertaking, particularly in the early stages where companies will be required to commit time and resources to the knowledge transfer required to ensure a seamless integration. The structure of the existing infrastructure will, of course, be a major influencing factor in the ease of transition. “There are those companies that over the years have invested heavily in their in-house capabilities and developed their own systems that are very tightly bound within their processes,” Sehdev points out, “which can mean decoupling certain aspects is more challenging. For those operations that run much leaner infrastructures, it can often be more straightforward to decouple particular components of the processing.” RMS Analytical Services has, however, addressed this issue and now works increasingly within the systems of such clients, rather than operating as an external function. “We have the ability to work remotely, which means our teams operate fully within their existing framework. This removes the need to decouple any parts of the data chain, and we can fit seamlessly into their processes.” This also helps address any potential data transfer issues companies may have, particularly given increasingly stringent information management legislation and guidelines. There are a number of factors that will influence the extent to which a company will outsource its modeling function. Unsurprisingly, smaller organizations and startup operations are more likely to take the fully outsourced option, while larger companies tend to use it as a means of augmenting internal teams — particularly around data engineering. RMS Analytical Services operate various different engagement models. Managed services are based on annual contracts governed by volume for data engineering and risk analytics. On-demand services are available for one-off risk analytics projects, renewals support, bespoke analysis such as event response, and new IP adoption. “Modeler down the hall” is a third option that provides ad hoc work, while the firm also offers consulting services around areas such as process optimization, model assessment and transition support. Making the Transition Work Starstone Insurance, a global specialty insurer providing a diversified range of property, casualty and specialty insurance to customers worldwide, has been operating an outsourced modeling function for two and a half years. “My predecessor was responsible for introducing the outsourced component of our modeling operations,” explains Judith Woo, head of exposure management at Starstone. “It was very much a cost-driven decision as outsourcing can provide a very cost-effective model.” The company operates a hybrid model, with the outsourced team working on most of the pre- and post-bind data processing, while its internal modeling team focuses on the complex specialty risks that fall within its underwriting remit. “The volume of business has increased over the years as has the quality of data we receive,” she explains. “The amount of information we receive from our brokers has grown significantly. A lot of the data processing involved can be automated and that allows us to transfer much of this work to RMS Analytical Services.” On a day-to-day basis, the process is straightforward, with the Starstone team uploading the data to be processed via the RMS data portal. The facility also acts as a messaging function with the two teams communicating directly. “In fact,” Woo points out, “there are email conversations that take place directly between our underwriters and the RMS Analytical Service team that do not always require our modeling division’s input.” However, reaching this level of integration and trust has required a strong commitment from Starstone to making the relationship work. “You are starting to work with a third-party operation that does not understand your business or its data processes. You must invest time and energy to go through the various systems and processes in detail,” she adds, “and that can take months depending on the complexity of the business. “You are essentially building an extension of your team, and you have to commit to making that integration work. You can’t simply bring them in, give them a particular problem and expect them to solve it without there being the necessary knowledge transfer and sharing of information.” Her internal modeling team of six has access to an outsourced team of 26, she explains, which greatly enhances the firm’s data-handling capabilities. “With such a team, you can import fresh data into the modeling process on a much more frequent basis, for example. That creates a huge value-add in terms of our catastrophe response capabilities — knowing that we are able to report our latest position has made a big difference on this front.” Creating a Partnership As with any working partnership, the initial phases are critical as they set the tone for the ongoing relationship. “We have well-defined due diligence and transition methodologies,” Zutshi states. “During the initial phase, we work to understand and evaluate their processes. We then create a detailed transition methodology, in which we define specific data templates, establish monthly volume loads, lean periods and surge points, and put in place communication and reporting protocols.” At the end, both parties have a full documented data dictionary with business rules governing how data will be managed, coupled with the option to choose from a repository of 1,000+ validation rules for data engineering. This is reviewed on a regular basis to ensure all processes remain aligned with the practices and direction of the organization. “Often, the daily churn of data processing consumes a huge amount of internal analytical resources and limits the opportunities to devote sufficient time to analyzing the data output” — Neetika Kapoor Sehdev, RMS Service level agreements (SLAs) also form also form a central tenet of the relationship plus stringent data compliance procedures. “Robust data security and storage is critical,” says Woo. “We have comprehensive NDAs [non-disclosure agreements] in place that are GDPR compliant to ensure that the integrity of our data is maintained throughout. We also have stringent SLAs in place to guarantee data processing turnaround times. Although, you need to agree on a reasonable time period reflecting the data complexity and also when it is delivered.” According to Sehdev, most SLAs that the analytical team operates require a 24-hour data turnaround rising to 48-72 hours for more complex data requirements, but clients are able to set priorities as needed. “However, there is no point delivering on turnaround times,” she adds, “if the quality of the data supplied is not fit for purpose. That’s why we apply a number of data quality assurance processes, which means that our first-time accuracy level is over 98 percent.” The Value-Add Most clients of RMS Analytical Services have outsourced modeling functions to the division for over seven years, with a number having worked with the team since it launched in 2004. The decision to incorporate their services is not taken lightly given the nature of the information involved and the level of confidence required in their capabilities. “The majority of our large clients bring us on board initially in a data-engineering capacity,” explains Sehdev. “It’s the building of trust and confidence in our ability, however, that helps them move to the next tranche of services.” The team has worked to strengthen and mature these relationships, which has enabled them to increase both the size and scope of the engagements they undertake. “With a number of clients, our role has expanded to encompass account modeling, portfolio roll-up and related consulting services,” says Zutshi. “Central to this maturing process is that we are interacting with them daily and have a dedicated team that acts as the primary touch point. We’re also working directly with the underwriters, which helps boost comfort and confidence levels. “For an outsourced model function to become an integral part of the client’s team,” he concludes, “it must be a close, coordinated effort between the parties. That’s what helps us evolve from a standard vendor relationship to a trusted partner.”

Taking Cloud Adoption to the Core

Insurance and reinsurance companies have been more reticent than other business sectors in embracing Cloud technology. EXPOSURE explores why it is time to ditch “the comfort blanket” The main benefits of Cloud computing are well-established and include scale, efficiency and cost effectiveness. The Cloud also offers economical access to huge amounts of computing power, ideal to tackle the big data/big analytics challenge. And exciting innovations such as microservices — allowing access to prebuilt, Cloud-hosted algorithms, artificial intelligence (AI) and machine learning applications, which can be assembled to build rapidly deployed new services — have the potential to transform the (re)insurance industry. And yet the industry has continued to demonstrate a reluctance in moving its core services onto a Cloud-based infrastructure. While a growing number of insurance and reinsurance companies are using Cloud services (such as those offered by Amazon Web Services, Microsoft Azure and Google Cloud) for nonessential office and support functions, most have been reluctant to consider Cloud for their mission-critical infrastructure. In its research of Cloud adoption rates in regulated industries, such as banking, insurance and health care, McKinsey found, “Many enterprises are stuck supporting both their inefficient traditional data-center environments and inadequately planned Cloud implementations that may not be as easy to manage or as affordable as they imagined.” No Magic Bullet It also found that “lift and shift” is not enough, where companies attempt to move existing, monolithic business applications to the Cloud, expecting them to be “magically endowed with all the dynamic features.” “We’ve come up against a lot of that when explaining the difference what a cloud-based risk platform offers,” says Farhana Alarakhiya, vice president of products at RMS. “Basically, what clients are showing us is their legacy offering placed on a new Cloud platform. It’s potentially a better user interface, but it’s not really transforming the process.” Now is the time for the market-leading (re)insurers to make that leap and really transform how they do business, she says. “It’s about embracing the new and different and taking comfort in what other industries have been able to do. A lot of Cloud providers are making it very easy to deliver analytics on the Cloud. So, you’ve got the story of agility, scalability, predictability, compliance and security on the Cloud and access to new analytics, new algorithms, use of microservices when it comes to delivering predictive analytics.” This ease to tap into highly advanced analytics and new applications, unburdened from legacy systems, makes the Cloud highly attractive. Hussein Hassanali, managing partner at VTX Partners, a division of Volante Global, commented: “Cloud can also enhance long-term pricing adequacy and profitability driven by improved data capture, historical data analytics and automated links to third-party market information. Further, the ‘plug-and-play’ aspect allows you to continuously innovate by connecting to best-in-class third-party applications.” While moving from a server-based platform to the Cloud can bring numerous advantages, there is a perceived unwillingness to put high-value data into the environment, with concerns over security and the regulatory implications that brings. This includes data protection rules governing whether or not data can be moved across borders. “There are some interesting dichotomies in terms of attitude and reality,” says Craig Beattie, analyst at Celent Consulting. “Cloud-hosting providers in western Europe and North America are more likely to have better security than (re)insurers do in their internal data centers, but the board will often not support a move to put that sort of data outside of the company’s infrastructure. “Today, most CIOs and executive boards have moved beyond the knee-jerk fears over security, and the challenges have become more practical,” he continues. “They will ask, ‘What can we put in the Cloud? What does it cost to move the data around and what does it cost to get the data back? What if it fails? What does that backup look like?’” With a hybrid Cloud solution, insurers wanting the ability to tap into the scalability and cost efficiencies of a software-as-a-service (SaaS) model, but unwilling to relinquish their data sovereignty, dedicated resources can be developed in which to place customer data alongside the Cloud infrastructure. But while a private or hybrid solution was touted as a good compromise for insurers nervous about data security, these are also more costly options. The challenge is whether the end solution can match the big Cloud providers with global footprints that have compliance and data sovereignty issues already covered for their customers. “We hear a lot of things about the Internet being cheap — but if you partially adopt the Internet and you’ve got significant chunks of data, it gets very costly to shift those back and forth,” says Beattie. A Cloud-first approach Not moving to the Cloud is no longer a viable option long term, particularly as competitors make the transition and competition and disruption change the industry beyond recognition. Given the increasing cost and complexity involved in updating and linking legacy systems and expanding infrastructure to encompass new technology solutions, Cloud is the obvious choice for investment, thinks Beattie. “If you’ve already built your on-premise infrastructure based on classic CPU-based processing, you’ve tied yourself in and you’re committed to whatever payback period you were expecting,” he says. “But predictive analytics and the infrastructure involved is moving too quickly to make that capital investment. So why would an insurer do that? In many ways it just makes sense that insurers would move these services into the Cloud. “State-of-the-art for machine learning processing 10 years ago was grids of generic CPUs,” he adds. “Five years ago, this was moving to GPU-based neural network analyses, and now we’ve got ‘AI chips’ coming to market. In an environment like that, the only option is to rent the infrastructure as it’s needed, lest we invest in something that becomes legacy in less time than it takes to install.” Taking advantage of the power and scale of Cloud computing also advances the march toward real-time, big data analytics. Ricky Mahar, managing partner at VTX Partners, a division of Volante Global, added: “Cloud computing makes companies more agile and scalable, providing flexible resources for both power and space. It offers an environment critical to the ability of companies to fully utilize the data available and capitalize on real-time analytics. Running complex analytics using large data sets enhances both internal decision-making and profitability.” As discussed, few (re)insurers have taken the plunge and moved their mission-critical business to a Cloud-based SaaS platform. But there are a handful. Among these first movers are some of the newer, less legacy-encumbered carriers, but also some of the industry’s more established players. The latter includes U.S.-based life insurer MetLife, which announced it was collaborating with IBM Cloud last year to build a platform designed specifically for insurers. Meanwhile Munich Re America is offering a Cloud-hosted AI platform to its insurer clients. “The ice is thawing and insurers and reinsurers are changing,” says Beattie. “Reinsurers [like Munich Re] are not just adopting Cloud but are launching new innovative products on the Cloud.” What’s the danger of not adopting the Cloud? “If your reasons for not adopting the Cloud are security-based, this reason really doesn’t hold up any more. If it is about reliability, scalability, remember that the largest online enterprises such as Amazon, Netflix are all Cloud-based,” comments Farhana Alarakhiya. “The real worry is that there are so many exciting, groundbreaking innovations built in the Cloud for the (re)insurance industry, such as predictive analytics, which will transform the industry, that if you miss out on these because of outdated fears, you will damage your business. The industry is waiting for transformation, and it’s progressing fast in the Cloud.”