Tag: RMS Risk Modeler

RMS HWind Hurricane Forecasting and Response and ExposureIQ: Exposure Management Without the Grind

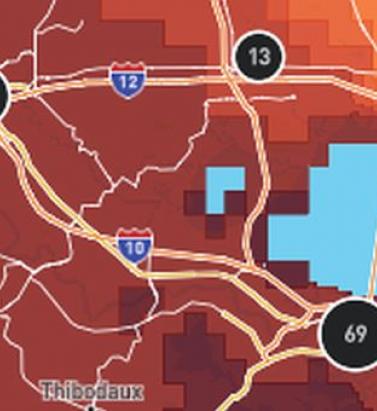

June 19, 2022Accessing data in real-time to assess and manage an insurance carrier’s potential liabilities from a loss event remains the holy grail for exposure management teams and is high on a business’ overall wish list A 2021 PwC Pulse Survey of U.S. risk management leaders found that risk executives are increasingly taking advantage of “tech solutions for real-time and automated processes, including dynamic risk monitoring (30 percent), new risk management tech solutions (25 percent), data analytics (24 percent) [and] integrated risk management tools on a single platform (19 percent)”. PwC suggested that as part of an organization’s wider digital and business transformation process, risk management teams should therefore: “use technologies that work together, draw on common data sources, build enterprise-wide analytics and define common sets of metrics.” Separately, Deloitte’s 2021 third-party risk management (TPRM) survey found that 53 percent of respondents across a range of industry sectors wanted to improve real-time information, risk metrics, and reporting in their organizations. With the pandemic providing the unlikely backdrop for driving innovation across the business world, the Deloitte survey explained the statistic with the suggestion that one impact of COVID-19 “has been a greater need for real-time continuous assessment and alerts, rather than traditional point-in-time third-party assessment.” Event Forecasting and Response with HWind and ExposureIQ Natural catastrophe events are a risk analytics flash point. And while growing board-level awareness of the importance of real-time reporting might seem like a positive, without marrying the data with the right tools to gather and process that data, together with a more integrated approach to risk management and modeling functions, the pain points for exposure managers on the event frontline, are unlikely to be relieved. RMS® ExposureIQ™ is an exposure management application available on the cloud-native RMS Intelligent Risk Platform™, which enables clients to centralize exposure data, process it, write direct reports and then run deterministic scenarios to quickly and accurately assess their exposure. When an event is threatening or impacts risks, an exposure management team needs to rapidly process the available data to work out their overall exposure and the likely effect on insured assets. The integration of event response data such as HWind into the ExposureIQ application is where the acquisition of this hazard data really starts to make a difference. The 2022 North Atlantic hurricane season, for example, is upon us, and access to regular, real-time data is relied upon as a crucial part of event response to tropical cyclones. With reliable event response analytics, updated in real-time, businesses can get fully prepared and ensure solvency through additional reinsurance cover, more accurately reserve funds, and confidently communicate risk to all stakeholders. The National Oceanic and Atmospheric Administration’s (NOAA) National Hurricane Center (NHC) has long been viewed as a valuable resource for forecasts on the expected track and severity of hurricanes. However, according to Callum Higgins, product manager, global climate, at RMS, “There are some limitations with what you get [from the NHC]. Forecasts lack detailed insights into the spatial variability of hazard severity and while uncertainty is accounted for, this is based on historical data rather than the forecast uncertainty specific to the storm. Hurricane Henri in 2021 was a good example of this. While the ultimate landfall location fell outside the NHC ‘cone of uncertainty’ four days in advance of landfall, given the large model uncertainty in the track for Henri, HWind forecasts were able to account for this possibility.” Introducing HWind RMS HWind provides observation-based tropical cyclone data for both real-time and historical events and was originally developed as a data service for the NHC by renowned meteorologist Dr. Mark Powell. It combines the widest set of observations for a particular storm in order to create the most accurate representation of its wind field. Since RMS acquired HWind in 2015, it has continually evolved as a solution that can be leveraged more easily by insurers to benefit individual use cases. HWind provides snapshots (instantaneous views of the storm’s wind field) and cumulative footprints (past swaths of the maximum wind speeds) every six hours. In addition, RMS delivers hurricane forecast data that includes a series of forecast scenarios of both the wind and surge hazard, enabling users to understand the potential severity of the event up to five days in advance of landfall. “Because HWind real-time products are released up to every six hours, you can adapt your response as forecasts shift. After an event has struck you very quickly get a good view of which areas have been impacted and to what level of severity,” explains Higgins. The level of detail is another key differentiator. In contrast to the NHC forecasts, which do not include a detailed wind field, HWind provides much more data granularity, with forecast wind and surge scenarios developed by leveraging the RMS North Atlantic Hurricane Models. Snapshots and cumulative footprints, meanwhile, represent the wind field on a 1x1 kilometer grid. And while the NHC does provide uncertainty metrics in its forecasts, such as the “cone of uncertainty” around where the center of the storm will track, these are typically based on historical statistics. “HWind accounts for the actual level of model convergence for a particular storm. That provides you with the insights you need to make decisions around how much confidence to place in each forecast, including whether a more conservative approach is required in cases of heightened uncertainty,” Higgins explains. HWind’s observational approach and access to more than 30 data sources, some of which are exclusive to RMS, means users are better able to capture a particular wind field and apply that data across a wide variety of use cases. Some HWind clients – most notably, Swiss Re – also use it as a trigger for parametric insurance policies. “That’s a critical component for some of our clients,” says Higgins. “For a parametric trigger, you want to make sure you have as accurate as possible a view of the wind speed experienced at underwritten locations when a hurricane strikes.” Real-time data is only one part of the picture. The HWind Enhanced Archive is a catalog of data – including high-resolution images, snapshots, and footprints from historical hurricanes extending back almost 30 years that can be used to validate historical claims and loss experience. “When we're creating forecasts in real-time, we only have the information of what has come before [in that particular storm],” says Higgins. “With the archive, we can take advantage of the data that comes in after we produce the snapshots and use all of that to produce an enhanced archive to improve what we do in real-time.” Taking the Stress out of Event Response “Event response is quite a stressful time for the insurance industry, because they've got to make business decisions based around what their losses could be,” Higgins adds. “At the time of these live events, there's always increased scrutiny around their exposure and reporting.” HWind has plugged the gap in the market for a tool that can provide earlier, more frequent, and more detailed insights into the potential impact of a hurricane before, during, and following landfall. “The key reason for having HWind available with ExposureIQ is to have it all in one place,” explains Higgins. “There are many different sources of information out there, and during a live event the last thing you want to do is be scrambling across websites trying to see who's released what and then pull it across to your environment, so you can overlay it on your live portfolio of risks. As soon as we release the accumulation footprints, they are uploaded directly into the application, making it faster and more convenient for users to generate an understanding of potential loss for their specific portfolios." RMS applications such as ExposureIQ, and the modeling application Risk Modeler™, all use the same cloud-native Intelligent Risk Platform. This allows for a continuous workflow, allowing users to generate both accumulation analytics as well as modeled losses from the same set of exposure data. During an event, for example, with the seven hurricane scenarios that form part of the HWind data flow, the detailed wind fields and tracks (see Figure below) and the storm surge footprints for each scenario can be viewed on the ExposureIQ application for clients to run accumulations against. The application has a robust integrated mapping service that allows users to view their losses and hot spots on a map, and it also includes the functionality to switch to see the same data distributed in loss tables if that is preferred. “Now that we have both those on view in the cloud, you can overlay the footprint files on top of your exposures, and quickly see it before you even run [the accumulations],” says Higgins. Figure 1: RMS HWind forecast probability of peak gusts greater than 80 miles per hour from Hurricane Ida at 1200UTC August 29, 2021, overlaid on exposure data within the RMS ExposureIQ applicationOne-Stop-Shop This close interaction between HWind and the ExposureIQ application indicates another advantage of the RMS product suite – the use of consistent event response data across the platform so exposure mapping and modeling are all in one place. “The idea is that by having it on the cloud, it is much more performant; you can analyze online portfolios a lot more quickly, and you can get those reports to your board a lot faster than previously,” says Higgins. In contrast to other solutions in the market, which typically use third-party hazard tools and modeling platforms, the RMS suite has a consistent model methodology flowing through the entire product chain. “That's really where the sweet spot of ExposureIQ is – this is all one connected ecosystem,” commented Higgins. “I get my data into ExposureIQ and it is in the same format as Risk Modeler, so I don't need to convert anything. Both products use a consistent financial model too – so you are confident the underlying policy and reinsurance terms are being applied in the same way.” The modular nature of the RMS application ecosystem means that, in addition to hurricane risks, data on perils such as floods, earthquakes, and wildfires are also available – and then processed by the relevant risk modeling tool to give clients insights on their potential losses. “With that indication of where you might expect to experience claims, and how severe those claims might be, you can start to reach out to policyholders to understand if they've been affected.” At this point, clients are then in a good position to start building their claims deployment strategy, preparing claims adjusters to visit impacted sites and briefing reserving and other teams on when to start processing payments. But even before a hurricane has made landfall, clients can make use of forecast wind fields to identify locations that might be affected in advance of the storm and warn policyholders to prepare accordingly. “That can not only help policyholders to protect their property but also mitigate insurance losses as well,” says Higgins. “Similarly, you can use it to apply an underwriting moratorium in advance of a storm. Identify areas that are likely to be impacted, and then feed that into underwriting processes to ensure that no one can write a policy in the region when a storm is approaching.” The First Unified Risk Modeling Platform Previously, before moving to an integrated, cloud-based platform, these tools would likely have been hosted using on-premises servers with all the significant infrastructure costs that implies. Now, in addition to accessing a full suite of products via a single cloud-native platform, RMS clients can also leverage the company’s three decades of modeling expertise, benefiting from a strong foundation of trusted exposure data to help manage their exposures. “A key goal for a lot of people responding to events is initially to understand what locations are affected, how severely they're affected, and what their total exposed limit is, and to inform things like deploying claims adjusters,” says Higgins. And beyond the exposure management function, argues Higgins, it’s about gearing up for the potential pain of those claims, the processes that go around that, and the communication to the board. “These catastrophic events can have a significant impact on a company’s revenue, and the full implications – and any potential mitigation – needs to be well understood.” Find out more about the award-winning ExposureIQ.

Location, Location, Location: A New Era in Data Resolution

February 11, 2021The insurance industry has reached a transformational point in its ability to accurately understand the details of exposure at risk. It is the point at which three fundamental components of exposure management are coming together to enable (re)insurers to systematically quantify risk at the location level: the availability of high-resolution location data, access to the technology to capture that data and advances in modeling capabilities to use that data. Data resolution at the individual building level has increased considerably in recent years, including the use of detailed satellite imagery, while advances in data sourcing technology have provided companies with easier access to this more granular information. In parallel, the evolution of new innovations, such as RMS® High Definition Models™ and the transition to cloud-based technologies, has facilitated a massive leap forward in the ability of companies to absorb, analyze and apply this new data within their actuarial and underwriting ecosystems. Quantifying Risk Uncertainty “Risk has an inherent level of uncertainty,” explains Mohsen Rahnama, chief modeling officer at RMS. “The key is how you quantify that uncertainty. No matter what hazard you are modeling, whether it is earthquake, flood, wildfire or hurricane, there are assumptions being made. These catastrophic perils are low-probability, high-consequence events as evidenced, for example, by the 2017 and 2018 California wildfires or Hurricane Katrina in 2005 and Hurricane Harvey in 2017. For earthquake, examples include Tohoku in 2011, the New Zealand earthquakes in 2010 and 2011, and Northridge in 1994. For this reason, risk estimation based on an actuarial approach cannot be carried out for these severe perils; physical models based upon scientific research and event characteristic data for estimating risk are needed.” A critical element in reducing uncertainty is a clear understanding of the sources of uncertainty from the hazard, vulnerability and exposure at risk. “Physical models, such as those using a high-definition approach, systematically address and quantify the uncertainties associated with the hazard and vulnerability components of the model,” adds Rahnama. “There are significant epistemic (also known as systematic) uncertainties in the loss results, which users should consider in their decision-making process. This epistemic uncertainty is associated with a lack of knowledge. It can be subjective and is reducible with additional information.” What are the sources of this uncertainty? For earthquake, there is uncertainty about the ground motion attenuation functions, soil and geotechnical data, the size of the events, or unknown faults. Rahnama explains: “Addressing the modeling uncertainty is one side of the equation. Computational power enables millions of events and more than 50,000 years of simulation to be used, to accurately capture the hazard and reduce the epistemic uncertainty. Our findings show that in the case of earthquakes the main source of uncertainty for portfolio analysis is ground motion; however, vulnerability is the main driver of uncertainty for a single location.” The quality of the exposure data as the input to any mathematical models is essential to assess the risk accurately and reduce the loss uncertainty. However, exposure could represent the main source of loss uncertainty, especially when exposure data is provided in aggregate form. Assumptions can be made to disaggregate exposure using other sources of information, which helps to some degree reduce the associated uncertainty. Rahnama concludes, “Therefore, it is essential in order to minimize the uncertainty related to exposure to try to get location-level information about the exposure, in particular for the region with the potential of liquification for earthquake or for high-gradient hazard such as flood and wildfire.” A critical element in reducing that uncertainty, removing those assumptions and enhancing risk understanding is combining location-level data and hazard information. That combination provides the data basis for quantifying risk in a systematic way. Understanding the direct correlation between risk or hazard and exposure requires location-level data. The potential damage caused to a location by flood, earthquake or wind will be significantly influenced by factors such as first-floor elevation of a building, distance to fault lines or underlying soil conditions through to the quality of local building codes and structural resilience. And much of that granular data is now available and relatively easy to access. “The amount of location data that is available today is truly phenomenal,” believes Michael Young, vice president of product management at RMS, “and so much can be accessed through capabilities as widely available as Google Earth. Straightforward access to this highly detailed satellite imagery means that you can conduct desktop analysis of individual properties and get a pretty good understanding of many of the building and location characteristics that can influence exposure potential to perils such as wildfire.” Satellite imagery is already a core component of RMS model capabilities, and by applying machine learning and artificial intelligence (AI) technologies to such images, damage quantification and differentiation at the building level is becoming a much more efficient and faster undertaking — as demonstrated in the aftermath of Hurricanes Laura and Delta. “Within two days of Hurricane Laura striking Louisiana at the end of August 2020,” says Rahnama, “we had been able to assess roof damage to over 180,000 properties by applying our machine-learning capabilities to satellite images of the affected areas. We have ‘trained’ our algorithms to understand damage degree variations and can then superimpose wind speed and event footprint specifics to group the damage degrees into different wind speed ranges. What that also meant was that when Hurricane Delta struck the same region weeks later, we were able to see where damage from these two events overlapped.” The Data Intensity of Wildfire Wildfire by its very nature is a data-intensive peril, and the risk has a steep gradient where houses in the same neighborhood can have drastically different risk profiles. The range of factors that can make the difference between total loss, partial loss and zero loss is considerable, and to fully grasp their influence on exposure potential requires location-level data. The demand for high-resolution data has increased exponentially in the aftermath of recent record-breaking wildfire events, such as the series of devastating seasons in California in 2017-18, and unparalleled bushfire losses in Australia in 2019-20. Such events have also highlighted myriad deficiencies in wildfire risk assessment including the failure to account for structural vulnerabilities, the inability to assess exposure to urban conflagrations, insufficient high-resolution data and the lack of a robust modeling solution to provide insight about fire potential given the many years of drought. Wildfires in 2017 devastated the town of Paradise, California In 2019, RMS released its U.S. Wildfire HD Model, built to capture the full impact of wildfire at high resolution, including the complex behaviors that characterize fire spread, ember accumulation and smoke dispersion. Able to simulate over 72 million wildfires across the contiguous U.S., the model creates ultrarealistic fire footprints that encompass surface fuels, topography, weather conditions, moisture and fire suppression measures. “To understand the loss potential of this incredibly nuanced and multifactorial exposure,” explains Michael Young, “you not only need to understand the probability of a fire starting but also the probability of an individual building surviving. “If you look at many wildfire footprints,” he continues, “you will see that sometimes up to 60 percent of buildings within that footprint survived, and the focus is then on what increases survivability — defensible space, building materials, vegetation management, etc. We were one of the first modelers to build mitigation factors into our model, such as those building and location attributes that can enhance building resilience.” Moving the Differentiation Needle In a recent study by RMS and the Center for Insurance Policy Research, the Insurance Institute for Business and Home Safety and the National Fire Protection Association, RMS applied its wildfire model to quantifying the benefits of two mitigation strategies — structural mitigation and vegetation management — assessing hypothetical loss reduction benefits in nine communities across California, Colorado and Oregon. Young says: “By knowing what the building characteristics and protection measures are within the first 5 feet and 30 feet at a given property, we were able to demonstrate that structural modifications can reduce wildfire risk up to 35 percent, while structural and vegetation modifications combined can reduce it by up to 75 percent. This level of resolution can move the needle on the availability of wildfire insurance as it enables development of robust rating algorithms to differentiate specific locations — and means that entire neighborhoods don’t have to be non-renewed.” “By knowing what the building characteristics and protection measures are within the first 5 feet and 30 feet at a given property, we were able to demonstrate that structural modifications can reduce wildfire risk up to 35 percent, while structural and vegetation modifications combined can reduce it by up to 75 percent” Michael Young, RMS While acknowledging that modeling mitigation measures at a 5-foot resolution requires an immense granularity of data, RMS has demonstrated that its wildfire model is responsive to data at that level. “The native resolution of our model is 50-meter cells, which is a considerable enhancement on the zip-code level underwriting grids employed by some insurers. That cell size in a typical suburban neighborhood encompasses approximately three-to-five buildings. By providing the model environment that can utilize information within the 5-to-30-foot range, we are enabling our clients to achieve the level of data fidelity to differentiate risks at that property level. That really is a potential market game changer.” Evolving Insurance Pricing It is not hyperbolic to suggest that being able to combine high-definition modeling with high-resolution data can be market changing. The evolution of risk-based pricing in New Zealand is a case in point. The series of catastrophic earthquakes in the Christchurch region of New Zealand in 2010 and 2011 provided a stark demonstration of how insufficient data meant that the insurance market was blindsided by the scale of liquefaction-related losses from those events. “The earthquakes showed that the market needed to get a lot smarter in how it approached earthquake risk,” says Michael Drayton, consultant at RMS, “and invest much more in understanding how individual building characteristics and location data influenced exposure performance, particularly in relation to liquefaction. “To get to grips with this component of the earthquake peril, you need location-level data,” he continues. “To understand what triggers liquefaction, you must analyze the soil profile, which is far from homogenous. Christchurch, for example, sits on an alluvial plain, which means there are multiple complex layers of silt, gravel and sand that can vary significantly from one location to the next. In fact, across a large commercial or industrial complex, the soil structure can change significantly from one side of the building footprint to the other.” Extensive building damage in downtown Christchurch, New Zealand after 2011 earthquakeThe aftermath of the earthquake series saw a surge in soil data as teams of geotech engineers conducted painstaking analysis of layer composition. With multiple event sets to use, it was possible to assess which areas suffered soil liquefaction and from which specific ground-shaking intensity. “Updating our model with this detailed location information brought about a step-change in assessing liquefaction exposures. Previously, insurers could only assess average liquefaction exposure levels, which was of little use where you have highly concentrated risks in specific areas. Through our RMS® New Zealand Earthquake HD Model, which incorporates 100-meter grid resolution and the application of detailed ground data, it is now possible to assess liquefaction exposure potential at a much more localized level.” “Through our RMS® New Zealand Earthquake HD model, which incorporates 100-meter grid resolution and the application of detailed ground data, it is now possible to assess liquefaction exposure potential at a much more localized level” — Michael Drayton, RMS This development represents a notable market shift from community to risk-based pricing in New Zealand. With insurers able to differentiate risks at the location level, this has enabled companies such as Tower Insurance to more accurately adjust premium levels to reflect risk to the individual property or area. In its annual report in November 2019, Tower stated: “Tower led the way 18 months ago with risk-based pricing and removing cross-subsidization between low- and high-risk customers. Risk-based pricing has resulted in the growth of Tower’s portfolio in Auckland while also reducing exposure to high-risk areas by 16 percent. Tower’s fairer approach to pricing has also allowed the company to grow exposure by 4 percent in the larger, low-risk areas like Auckland, Hamilton, and Taranaki.” Creating the Right Ecosystem The RMS commitment to enable companies to put high-resolution data to both underwriting and portfolio management use goes beyond the development of HD Models™ and the integration of multiple layers of location-level data. Through the launch of RMS Risk Intelligence™, its modular, unified risk analytics platform, and the Risk Modeler™ application, which enables users to access, evaluate, compare and deploy all RMS models, the company has created an ecosystem built to support these next-generation data capabilities. Deployed within the Cloud, the ecosystem thrives on the computational power that this provides, enabling proprietary and tertiary data analytics to rapidly produce high-resolution risk insights. A network of applications — including the ExposureIQ™ and SiteIQ™ applications and Location Intelligence API — support enhanced access to data and provide a more modular framework to deliver that data in a much more customized way. “Because we are maintaining this ecosystem in the Cloud,” explains Michael Young, “when a model update is released, we can instantly stand that model side-by-side with the previous version. As more data becomes available each season, we can upload that new information much faster into our model environment, which means our clients can capitalize on and apply that new insight straightaway.” Michael Drayton adds: “We’re also offering access to our capabilities in a much more modular fashion, which means that individual teams can access the specific applications they need, while all operating in a data-consistent environment. And the fact that this can all be driven through APIs means that we are opening up many new lines of thought around how clients can use location data.” Exploring What Is Possible There is no doubt that the market is on the cusp of a new era of data resolution — capturing detailed hazard and exposure and using the power of analytics to quantify the risk and risk differentiation. Mohsen Rahnama believes the potential is huge. “I foresee a point in the future where virtually every building will essentially have its own social-security-like number,” he believes, “that enables you to access key data points for that particular property and the surrounding location. It will effectively be a risk score, including data on building characteristics, proximity to fault lines, level of elevation, previous loss history, etc. Armed with that information — and superimposing other data sources such as hazard data, geological data and vegetation data — a company will be able to systematically price risk and assess exposure levels for every asset up to the portfolio level.” “The only way we can truly assess this rapidly changing risk is by being able to systematically evaluate exposure based on high-resolution data and advanced modeling techniques that incorporate building resilience and mitigation measures” — Mohsen Rahnama, RMS Bringing the focus back to the here and now, he adds, the expanding impacts of climate change are making the need for this data transformation a market imperative. “If you look at how many properties around the globe are located just one meter above sea level, we are talking about trillions of dollars of exposure. The only way we can truly assess this rapidly changing risk is by being able to systematically evaluate exposure based on high-resolution data and advanced modeling techniques that incorporate building resilience and mitigation measures. How will our exposure landscape look in 2050? The only way we will know is by applying that data resolution underpinned by the latest model science to quantify this evolving risk.”

This Changes Everything

May 05, 2020At Exceedance 2020, RMS explored the key forces currently disrupting the industry, from technology, data analytics and the cloud through to rising extremes of catastrophic events like the pandemic and climate change. This coupling of technological and environmental disruption represents a true inflection point for the industry. EXPOSURE asked six experts across RMS for their views on why they believe these forces will change everything Cloud Computing: Moe Khosravy, Executive Vice President, Software and Platforms How are you seeing businesses transition their workloads over to the cloud? I have to say it’s been remarkable. We’re way past basic conversations on the value proposition of the cloud to now having deep technical discussions that are truly transformative plays. Customers are looking for solutions that seamlessly scale with their business and platforms that lower their cost of ownership while delivering capabilities that can be consumed from anywhere in the world. Why is the cloud so important or relevant now? It is now hard for a business to beat the benefits that the cloud offers and getting harder to justify buying and supporting complex in-house IT infrastructure. There is also a mindset shift going on — why is an in-house IT team responsible for running and supporting another vendor’s software on their systems if the vendor itself can provide that solution? This burden can now be lifted using the cloud, letting the business concentrate on what it does best. Has the pandemic affected views of being in the cloud? I would say absolutely. We have always emphasized the importance of cloud and true SaaS architectures to enable business continuity — allowing you to do your work from anywhere, decoupled from your IT and physical footprint. Never has the importance of this been more clearly underlined than during the past few months. Risk Analytics: Cihan Biyikoglu, Executive Vice President, Product What are the specific industry challenges that risk analytics is solving or has the potential to solve? Risk analytics really is a wide field, but in the immediate short term one of the focus areas for us is improving productivity around data. So much time is spent by businesses trying to manually process data — cleansing, completing and correcting data — and on conversion between incompatible datasets. This alone is a huge barrier just to get a single set of results. If we can take this burden away, give decision-makers the power to get results in real time with automated and efficient data handling, then with that I believe we will liberate them to use the latest insights to drive business results. Another important innovation here are the HD Models™. The power of the new engine with its improved accuracy I believe is a game changer that will give our customers a competitive edge. How will risk analytics impact activities and capabilities within the market? As seen in other industries, the more data you can combine, the better the analytics become — that’s the universal law of analytics. Getting all of this data on a unified platform and combining different datasets unearths new insights, which could produce opportunities to serve customers better and drive profit or growth. What are the longer-term implications for risk analytics? In my view, it’s about generating more effective risk insights from analytics, results in better decision- making and the ability to explore new product areas with more confidence. It will spark a wave of innovation to profitably serve customers with exciting products and understand the risk and cost drivers more clearly. How is RMS capitalizing on risk analytics? At RMS, we have the pieces in place for clients to accelerate their risk analytics with the unified, open platform, Risk Intelligence™, which is built on a Risk Data Lake™ in the cloud and is ready to take all sources of data and unearth new insights. Applications such as Risk Modeler™ and ExposureIQ™ can quickly get decision-makers to the analytics they need to influence their business. Open Standards: Dr. Paul Reed, Technical Program Manager, RDOS Why are open standards so important and relevant now? I think the challenges of risk data interoperability and supporting new lines of business have been recognized for many years, as companies have been forced to rework existing data standards to try to accommodate emerging risks and to squeeze more data into proprietary standards that can trace their origins to the 1990s. Today, however, with the availability of big data technology, cloud platforms such as RMS Risk Intelligence and standards such as the Risk Data Open Standard™ (RDOS) allow support for high-resolution risk modeling, new classes of risk, complex contract structures and simplified data exchange. Are there specific industry challenges that open standards are solving or have the potential to solve? I would say that open standards such as the RDOS are helping to solve risk data interoperability challenges, which have been hindering the industry, and provide support for new lines of business. In the case of the RDOS, it’s specifically designed for extensibility, to create a risk data exchange standard that is future-proof and can be readily modified and adapted to meet both current and future requirements. Open standards in other industries, such as Kubernetes, Hadoop and HTML, have proven to be catalysts for collaborative innovation, enabling accelerated development of new capabilities. How is RMS responding to and capitalizing on this development? RMS contributed the RDOS to the industry, and we are using it as the data framework for our platform called Risk Intelligence. The RDOS is free for anyone to use, and anyone can contribute updates that can expand the value and utility of the standard — so its development and direction is not dependent on a single vendor. We’ve put in place an independent steering committee to guide the development of the standard, currently made up of 15 companies. It provides benefits to RMS clients not only by enhancing the new RMS platform and applications, but also by enabling other industry users who create new and innovative products and address new and emerging risk classes. Pandemic Risk: Dr. Gordon Woo, Catastrophist How does pandemic risk affect the market? There’s no doubt that the current pandemic represents a globally systemic risk across many market sectors, and insurers are working out both what the impact from claims will be and the impact on capital. For very good reasons, people are categorizing the COVID-19 disease as a game-changer. However, in my view, SARS [severe acute respiratory syndrome] in 2003, MERS [Middle East respiratory syndrome] in 2012 and Ebola in 2014 should also have been game-changers. Over the last decade alone, we have seen multiple near misses. It’s likely that suppression strategies to combat the coronavirus will probably continue in some form until a vaccine is developed, and governments must strike this uneasy balance between their economies and the opening of their populations to exposure from the virus. What are the longer-term implications of this current pandemic for the industry? It’s clear that the mitigation of pandemic risk will need to be prioritized and given far more urgency than before. There’s no doubt in my mind that events such as the 2014 Ebola crisis were a missed opportunity for new initiatives in pandemic risk mitigation. Away from the life and health sector, all insurers will need to have a better grasp on future pandemics, after seeing the impact of COVID-19 and its wide business impact. The market could look to bold initiatives with governments to examine how to cover future pandemics, similar to how terror attacks are covered as a pooled risk. How is RMS helping its clients in relation to COVID-19? Since early January when the first cases emerged from Wuhan, China, we’ve been supporting our clients and the wider market in gaining a better understanding of the diverse loss implications of COVID-19. Our LifeRisks® team has been actively assisting in pandemic risk management, with regular communications and briefings, and will incorporate new perspectives from COVID-19 into our infectious diseases modeling. Climate Change: Ryan Ogaard, Senior Vice President, Model Product Management Why is climate change so relevant to the market now? There are many reasons. Insurers and their stakeholders are looking at the constant flow of catastrophes, from the U.S. hurricane season of 2017, wildfires in California and bushfires in Australia, to recent major typhoons and wondering if climate change is driving extreme weather risk, and what it could do in the future. They’re asking whether the current extent of climate change risk is priced into their premiums. Regulators are also beginning to conduct stress tests on the potential impact of climate change in the future, and insurers must respond. How will climate change impact how the market operates? Similar to any risk, insurers need to understand and quantify how the physical risk of climate change will impact their portfolios and adjust their strategy accordingly. Also, over the coming years it appears likely that regulators will incorporate climate change reporting into their regimes. Once an insurer understands their exposure to climate change risk, they can then start to take action — which will impact how the market operates. These actions could be in the form of premium changes, mitigating actions such as supporting physical defenses, diversifying the risk or taking on more capital. How is RMS responding to market needs around climate change? RMS is listening to the needs of clients to understand their pain points around climate change risk, what actions they are taking and how we can add value. We’re working with a number of clients on bespoke studies that modify the current view of risk to project into the future and/or test the sensitivity of current modeling assumptions. We’re also working to help clients understand the extent to which climate change is already built into risk models, to educate clients on emerging climate change science and to explain whether there is or isn’t a clear climate change signal for a particular peril. Cyber: Dr. Christos Mitas, Vice President, Model Development How is this change currently manifesting itself? While cyber risk itself is not new, for anyone involved in protecting or insuring organizations against cyberattacks, they will know that the nature of cyber risk is forever evolving. This could involve changes in those perpetrating the attacks, from lone wolf criminals to state-backed actors or the type of target from an unpatched personal computer to a power-plant control system. If you take the current COVID-19 pandemic, this has seen cybercriminals look to take advantage of millions of employees working from home or vulnerable business IT infrastructure. Change to the threat landscape is a constant for cyber risk. Why is cyber risk so important and relevant right now? Simply because new cyber risks emerge, and insurers who are active in this area need to ensure they are ahead of the curve in terms of awareness and have the tools and knowledge to manage new risks. There have been systemic ransomware attacks over the last few years, and criminals continue to look for potential weaknesses in networked systems, third-party software, supply chains — all requiring constant vigilance. It’s this continual threat of a systemic attack that requires insurers to use effective tools based on cutting-edge science, to capture the latest threats and identify potential risk aggregation. How is RMS responding to market needs around cyber risk? With our latest RMS Cyber Solutions, which is version 4.0, we’ve worked closely with clients and the market to really understand the pain points within their businesses, with a wealth of new data assets and modeling approaches. One area is the ability to know the potential cyber risk of the type of business you are looking to insure. In version 4.0, we have a database of over 13 million businesses that can help enrich the information you have about your portfolio and prospective clients, which then leads to more prudent and effective risk modeling. A Time to Change Our industry is undergoing a period of significant disruption on multiple fronts. From the rapidly evolving exposure landscape and the extraordinary changes brought about by the pandemic to step-change advances in technology and seismic shifts in data analytics capabilities, the market is undergoing an unparalleled transition period. As Exceedance 2020 demonstrated, this is no longer a time for business as usual. This is what defines leaders and culls the rest. This changes everything.