Tag: EXPOSURE MANAGEMENT

RMS HWind Hurricane Forecasting and Response and ExposureIQ: Exposure Management Without the Grind

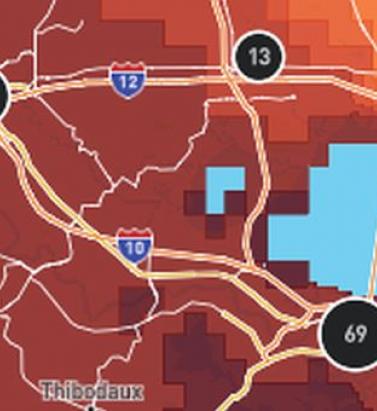

June 19, 2022Accessing data in real-time to assess and manage an insurance carrier’s potential liabilities from a loss event remains the holy grail for exposure management teams and is high on a business’ overall wish list A 2021 PwC Pulse Survey of U.S. risk management leaders found that risk executives are increasingly taking advantage of “tech solutions for real-time and automated processes, including dynamic risk monitoring (30 percent), new risk management tech solutions (25 percent), data analytics (24 percent) [and] integrated risk management tools on a single platform (19 percent)”. PwC suggested that as part of an organization’s wider digital and business transformation process, risk management teams should therefore: “use technologies that work together, draw on common data sources, build enterprise-wide analytics and define common sets of metrics.” Separately, Deloitte’s 2021 third-party risk management (TPRM) survey found that 53 percent of respondents across a range of industry sectors wanted to improve real-time information, risk metrics, and reporting in their organizations. With the pandemic providing the unlikely backdrop for driving innovation across the business world, the Deloitte survey explained the statistic with the suggestion that one impact of COVID-19 “has been a greater need for real-time continuous assessment and alerts, rather than traditional point-in-time third-party assessment.” Event Forecasting and Response with HWind and ExposureIQ Natural catastrophe events are a risk analytics flash point. And while growing board-level awareness of the importance of real-time reporting might seem like a positive, without marrying the data with the right tools to gather and process that data, together with a more integrated approach to risk management and modeling functions, the pain points for exposure managers on the event frontline, are unlikely to be relieved. RMS® ExposureIQ™ is an exposure management application available on the cloud-native RMS Intelligent Risk Platform™, which enables clients to centralize exposure data, process it, write direct reports and then run deterministic scenarios to quickly and accurately assess their exposure. When an event is threatening or impacts risks, an exposure management team needs to rapidly process the available data to work out their overall exposure and the likely effect on insured assets. The integration of event response data such as HWind into the ExposureIQ application is where the acquisition of this hazard data really starts to make a difference. The 2022 North Atlantic hurricane season, for example, is upon us, and access to regular, real-time data is relied upon as a crucial part of event response to tropical cyclones. With reliable event response analytics, updated in real-time, businesses can get fully prepared and ensure solvency through additional reinsurance cover, more accurately reserve funds, and confidently communicate risk to all stakeholders. The National Oceanic and Atmospheric Administration’s (NOAA) National Hurricane Center (NHC) has long been viewed as a valuable resource for forecasts on the expected track and severity of hurricanes. However, according to Callum Higgins, product manager, global climate, at RMS, “There are some limitations with what you get [from the NHC]. Forecasts lack detailed insights into the spatial variability of hazard severity and while uncertainty is accounted for, this is based on historical data rather than the forecast uncertainty specific to the storm. Hurricane Henri in 2021 was a good example of this. While the ultimate landfall location fell outside the NHC ‘cone of uncertainty’ four days in advance of landfall, given the large model uncertainty in the track for Henri, HWind forecasts were able to account for this possibility.” Introducing HWind RMS HWind provides observation-based tropical cyclone data for both real-time and historical events and was originally developed as a data service for the NHC by renowned meteorologist Dr. Mark Powell. It combines the widest set of observations for a particular storm in order to create the most accurate representation of its wind field. Since RMS acquired HWind in 2015, it has continually evolved as a solution that can be leveraged more easily by insurers to benefit individual use cases. HWind provides snapshots (instantaneous views of the storm’s wind field) and cumulative footprints (past swaths of the maximum wind speeds) every six hours. In addition, RMS delivers hurricane forecast data that includes a series of forecast scenarios of both the wind and surge hazard, enabling users to understand the potential severity of the event up to five days in advance of landfall. “Because HWind real-time products are released up to every six hours, you can adapt your response as forecasts shift. After an event has struck you very quickly get a good view of which areas have been impacted and to what level of severity,” explains Higgins. The level of detail is another key differentiator. In contrast to the NHC forecasts, which do not include a detailed wind field, HWind provides much more data granularity, with forecast wind and surge scenarios developed by leveraging the RMS North Atlantic Hurricane Models. Snapshots and cumulative footprints, meanwhile, represent the wind field on a 1x1 kilometer grid. And while the NHC does provide uncertainty metrics in its forecasts, such as the “cone of uncertainty” around where the center of the storm will track, these are typically based on historical statistics. “HWind accounts for the actual level of model convergence for a particular storm. That provides you with the insights you need to make decisions around how much confidence to place in each forecast, including whether a more conservative approach is required in cases of heightened uncertainty,” Higgins explains. HWind’s observational approach and access to more than 30 data sources, some of which are exclusive to RMS, means users are better able to capture a particular wind field and apply that data across a wide variety of use cases. Some HWind clients – most notably, Swiss Re – also use it as a trigger for parametric insurance policies. “That’s a critical component for some of our clients,” says Higgins. “For a parametric trigger, you want to make sure you have as accurate as possible a view of the wind speed experienced at underwritten locations when a hurricane strikes.” Real-time data is only one part of the picture. The HWind Enhanced Archive is a catalog of data – including high-resolution images, snapshots, and footprints from historical hurricanes extending back almost 30 years that can be used to validate historical claims and loss experience. “When we're creating forecasts in real-time, we only have the information of what has come before [in that particular storm],” says Higgins. “With the archive, we can take advantage of the data that comes in after we produce the snapshots and use all of that to produce an enhanced archive to improve what we do in real-time.” Taking the Stress out of Event Response “Event response is quite a stressful time for the insurance industry, because they've got to make business decisions based around what their losses could be,” Higgins adds. “At the time of these live events, there's always increased scrutiny around their exposure and reporting.” HWind has plugged the gap in the market for a tool that can provide earlier, more frequent, and more detailed insights into the potential impact of a hurricane before, during, and following landfall. “The key reason for having HWind available with ExposureIQ is to have it all in one place,” explains Higgins. “There are many different sources of information out there, and during a live event the last thing you want to do is be scrambling across websites trying to see who's released what and then pull it across to your environment, so you can overlay it on your live portfolio of risks. As soon as we release the accumulation footprints, they are uploaded directly into the application, making it faster and more convenient for users to generate an understanding of potential loss for their specific portfolios." RMS applications such as ExposureIQ, and the modeling application Risk Modeler™, all use the same cloud-native Intelligent Risk Platform. This allows for a continuous workflow, allowing users to generate both accumulation analytics as well as modeled losses from the same set of exposure data. During an event, for example, with the seven hurricane scenarios that form part of the HWind data flow, the detailed wind fields and tracks (see Figure below) and the storm surge footprints for each scenario can be viewed on the ExposureIQ application for clients to run accumulations against. The application has a robust integrated mapping service that allows users to view their losses and hot spots on a map, and it also includes the functionality to switch to see the same data distributed in loss tables if that is preferred. “Now that we have both those on view in the cloud, you can overlay the footprint files on top of your exposures, and quickly see it before you even run [the accumulations],” says Higgins. Figure 1: RMS HWind forecast probability of peak gusts greater than 80 miles per hour from Hurricane Ida at 1200UTC August 29, 2021, overlaid on exposure data within the RMS ExposureIQ applicationOne-Stop-Shop This close interaction between HWind and the ExposureIQ application indicates another advantage of the RMS product suite – the use of consistent event response data across the platform so exposure mapping and modeling are all in one place. “The idea is that by having it on the cloud, it is much more performant; you can analyze online portfolios a lot more quickly, and you can get those reports to your board a lot faster than previously,” says Higgins. In contrast to other solutions in the market, which typically use third-party hazard tools and modeling platforms, the RMS suite has a consistent model methodology flowing through the entire product chain. “That's really where the sweet spot of ExposureIQ is – this is all one connected ecosystem,” commented Higgins. “I get my data into ExposureIQ and it is in the same format as Risk Modeler, so I don't need to convert anything. Both products use a consistent financial model too – so you are confident the underlying policy and reinsurance terms are being applied in the same way.” The modular nature of the RMS application ecosystem means that, in addition to hurricane risks, data on perils such as floods, earthquakes, and wildfires are also available – and then processed by the relevant risk modeling tool to give clients insights on their potential losses. “With that indication of where you might expect to experience claims, and how severe those claims might be, you can start to reach out to policyholders to understand if they've been affected.” At this point, clients are then in a good position to start building their claims deployment strategy, preparing claims adjusters to visit impacted sites and briefing reserving and other teams on when to start processing payments. But even before a hurricane has made landfall, clients can make use of forecast wind fields to identify locations that might be affected in advance of the storm and warn policyholders to prepare accordingly. “That can not only help policyholders to protect their property but also mitigate insurance losses as well,” says Higgins. “Similarly, you can use it to apply an underwriting moratorium in advance of a storm. Identify areas that are likely to be impacted, and then feed that into underwriting processes to ensure that no one can write a policy in the region when a storm is approaching.” The First Unified Risk Modeling Platform Previously, before moving to an integrated, cloud-based platform, these tools would likely have been hosted using on-premises servers with all the significant infrastructure costs that implies. Now, in addition to accessing a full suite of products via a single cloud-native platform, RMS clients can also leverage the company’s three decades of modeling expertise, benefiting from a strong foundation of trusted exposure data to help manage their exposures. “A key goal for a lot of people responding to events is initially to understand what locations are affected, how severely they're affected, and what their total exposed limit is, and to inform things like deploying claims adjusters,” says Higgins. And beyond the exposure management function, argues Higgins, it’s about gearing up for the potential pain of those claims, the processes that go around that, and the communication to the board. “These catastrophic events can have a significant impact on a company’s revenue, and the full implications – and any potential mitigation – needs to be well understood.” Find out more about the award-winning ExposureIQ.

The Peril of Ignoring The Tail

September 04, 2017Drawing on several new data sources and gaining a number of new insights from recent earthquakes on how different fault segments might interact in future earthquakes, Version 17 of the RMS North America Earthquake Models sees the frequency of larger events increasing, making for a fatter tail. EXPOSURE asks what this means for (re)insurers from a pricing and exposure management perspective. Recent major earthquakes, including the M9.0 Tohoku Earthquake in Japan in 2011 and the Canterbury Earthquake Sequence in New Zealand (2010-2011), have offered new insight into the complexities and interdependencies of losses that occur following major events. This insight, as well as other data sources, was incorporated into the latest seismic hazard maps released by the U.S. Geological Survey (USGS). In addition to engaging with USGS on its 2014 update, RMS went on to invest more than 100 person-years of work in implementing the main findings of this update as well as comprehensively enhancing and updating all components in its North America Earthquake Models (NAEQ). The update reflects the deep complexities inherent in the USGS model and confirms the adage that “earthquake is the quintessential tail risk.” Among the changes to the RMS NAEQ models was the recognition that some faults can interconnect, creating correlations of risk that were not previously appreciated. Lessons from Kaikoura While there is still a lot of uncertainty surrounding tail risk, the new data sets provided by USGS and others have improved the understanding of events with a longer return period. “Global earthquakes are happening all of the time, not all large, not all in areas with high exposures,” explains Renee Lee, director, product management at RMS. “Instrumentation has become more advanced and coverage has expanded such that scientists now know more about earthquakes than they did eight years ago when NAEQ was last released in Version 9.0.” This includes understanding about how faults creep and release energy, how faults can interconnect, and how ground motions attenuate through soil layers and over large distances. “Soil plays a very important role in the earthquake risk modeling picture,” says Lee. “Soil deposits can amplify ground motions, which can potentially magnify the building’s response leading to severe damage.” The 2016 M7.8 earthquake in Kaikoura, on New Zealand’s South Island, is a good example of a complex rupture where fault segments connected in more ways than had previously been realized. In Kaikoura, at least six fault segments were involved, where the rupture “jumped” from one fault segment to the next, producing a single larger earthquake. “The Kaikoura quake was interesting in that we did have some complex energy release moving from fault to fault,” says Glenn Pomeroy, CEO of the California Earthquake Authority (CEA). “We can’t hide our heads in the sand and pretend that scientific awareness doesn’t exist. The probability has increased for a very large, but very infrequent, event, and we need to determine how to manage that risk.” San Andreas Correlations Looking at California, the updated models include events that extend from the north of San Francisco to the south of Palm Springs, correlating exposures along the length of the San Andreas fault. While the prospect of a major earthquake impacting both northern and southern California is considered extremely remote, it will nevertheless affect how reinsurers seek to diversify different types of quake risk within their book of business. “In the past, earthquake risk models have considered Los Angeles as being independent of San Francisco,” says Paul Nunn, head of catastrophe risk modeling at SCOR. “Now we have to consider that these cities could have losses at the same time (following a full rupture of the San Andreas Fault). In Kaikoura, at least six fault segments were involved, where the rupture “jumped” from one fault segment to the next “However, it doesn’t make that much difference in the sense that these events are so far out in the tail … and we’re not selling much coverage beyond the 1-in-500-year or 1-in-1,000-year return period. The programs we’ve sold will already have been exhausted long before you get to that level of severity.” While the contribution of tail events to return period losses is significant, as Nunn explains, this could be more of an issue for insurance companies than (re)insurers, from a capitalization standpoint. “From a primary insurance perspective, the bigger the magnitude and event footprint, the more separate claims you have to manage. So, part of the challenge is operational — in terms of mobilizing loss adjusters and claims handlers — but primary insurers also have the risk that losses from tail events could go beyond the (re)insurance program they have bought. “It’s less of a challenge from the perspective of global (re)insurers, because most of the risk we take is on a loss limited basis — we sell layers of coverage,” he continues. “Saying that, pricing for the top layers should always reflect the prospect of major events in the tail and the uncertainty associated with that.” He adds: “The magnitude of the Tohoku earthquake event is a good illustration of the inherent uncertainties in earthquake science and wasn’t represented in modeled scenarios at that time.” While U.S. regulation stipulates that carriers writing quake business should capitalize to the 1-in-200-year event level, in Canada capital requirements are more conservative in an effort to better account for tail risk. “So, Canadian insurance companies should have less overhang out of the top of their (re)insurance programs,” says Nunn. Need for Post-Event Funding For the CEA, the updated earthquake models could reinvigorate discussions around the need for a mechanism to raise additional claims-paying capacity following a major earthquake. Set up after the Northridge Earthquake in 1994, the CEA is a not-for-profit, publicly managed and privately funded earthquake pool. “It is pretty challenging for a stand-alone entity to take on large tail risk all by itself,” says Pomeroy. “We have, from time to time, looked at the possibility of creating some sort of post-event risk-transfer mechanism. “A few years ago, for instance, we had a proposal in front of the U.S. Congress that would have created the ability for the CEA to have done some post-event borrowing if we needed to pay for additional claims,” he continues. “It would have put the U.S. government in the position of guaranteeing our debt. The proposal didn’t get signed into law, but it is one example of how you could create an additional claim-paying capacity for that very large, very infrequent event.” “(Re)insurers will be considering how to adjust the balance between the LA and San Francisco business they’re writing” Paul Nunn SCOR The CEA leverages both traditional and non-traditional risk-transfer mechanisms. “Risk transfer is important. No one entity can take it on alone,” says Pomeroy. “Through risk transfer from insurer to (re)insurer the risk is spread broadly through the entrance of the capital markets as another source for claim-paying capability and another way of diversifying the concentration of the risk. “We manage our exposure very carefully by staying within our risk-transfer guidelines,” he continues. “When we look at spreading our risk, we look at spreading it through a large number of (re)insurance companies from 15 countries around the world. And we know the (re)insurers have their own strict guidelines on how big their California quake exposure should be.” The prospect of a higher frequency of larger events producing a “fatter” tail also raises the prospect of an overall reduction in average annual loss (AAL) for (re)insurance portfolios, a factor that is likely to add to pricing pressure as the industry approaches the key January 1 renewal date, predicts Nunn. “The AAL for Los Angeles coming down in the models will impact the industry in the sense that it will affect pricing and how much probable maximum loss people think they’ve got. Most carriers are busy digesting the changes and carrying out due diligence on the new model updates. “Although the eye-catching change is the possibility of the ‘big one,’ the bigger immediate impact on the industry is what’s happening at lower return periods where we’re selling a lot of coverage,” he says. “LA was a big driver of risk in the California quake portfolio and that’s coming down somewhat, while the risk in San Francisco is going up. So (re)insurers will be considering how to adjust the balance between the LA and San Francisco business they’re writing.”

Beware the Private Catastrophe

July 25, 2016Having a poor handle on the exposure on their books can result in firms facing disproportionate losses relative to their peers following a catastrophic event, but is easily avoidable, says Shaheen Razzaq, senior director – product management, at RMS. The explosions at Tianjin port, the floods in Thailand and most recently the Fort McMurray wildfires in Canada. What these major events have in common is the disproportionate impact of losses incurred by certain firms’ portfolios. Take the Thai floods in 2011, an event which, at the time, was largely unmodeled. The floods that inundated several major industrial estates around Bangkok caused an accumulation of losses for some reinsurers, resulting in negative rating action, loss in share price and withdrawals from the market. Last year’s Tianjin Port explosions in China also resulted in substantial insurance losses, which had an outsized impact on some firms, with significant concentrations of risk at the port or within impacted supply chains. The insured property loss from Asia’s most expensive human-caused catastrophe and the marine industry’s biggest loss since Superstorm Sandy is thought to be as high as US$3.5 billion, with significant “cost creep” as a result of losses from business interruption and contingent business interruption, clean-up and contamination expenses. “While events such as the Tianjin port explosions, Thai floods and more recent Fort McMurray wildfires may have occurred in so-called industry ‘cold spots,’ the impact of such events can be evaluated using deterministic scenarios to stress test a firm’s book of business.” Some of the highest costs from Tianjin were suffered by European firms, with some firms experiencing losses reaching US$275 million. The event highlighted the significant accumulation risk to non-modeled, man-made events in large transportation hubs such as ports, where much of the insurable content (cargo) is mobile and changeable and requires a deeper understanding of the exposures. Speaking about the firm’s experience in an interview with Bloomberg in early 2016, Zurich Insurance Group chairman and acting CEO Tom de Swann noted how due to the accumulation of risk that had not been sufficiently detected, the firm was looking at ways to strengthen its exposure management to avoid such losses in the future. There is a growing understanding that firms can avoid suffering disproportionate impacts from catastrophic events by taking a more analytical approach to mapping the aggregation risk within their portfolios. According to Validus chairman and CEO Ed Noonan, in statements following Tianjin last year, it is now “unacceptable” for the marine insurance industry not to seek to improve its modeling of risk in complex, ever-changing port environments. Women carrying sandbags to protect ancient ruins in Ayuttaya, Thailand during the seasonal monsoon flooding. While events such as the Tianjin port explosions, Thai floods and more recent Fort McMurray wildfires may have occurred in so-called industry “cold spots,” the impact of such events can be evaluated using deterministic scenarios to stress test a firm’s book of business. This can either provide a view of risk where there is a gap in probabilistic model coverage or supplement the view of risk from probabilistic models. Although much has been written about Nassim Taleb’s highly improbable “black swan” events, in a global and interconnected world firms’ increasingly must contend with the reality of “grey swan” and “white swan” events. According to risk consultant Geary Sikich in his article, “Are We Seeing the Emergence of More White Swan Events?” the definition of a grey swan is “a highly probable event with three principal characteristics: It is predictable; it carries an impact that can easily cascade…and, after the fact, we shift the focus to errors in judgment or some other human form of causation.” A white swan is a “highly certain event” with “an impact that can easily be estimated” where, once again, after the fact there is a shift to focus on “errors in judgment.” “Addressing unpredictability requires that we change how Enterprise Risk Management programs operate,” states Sikich. “Forecasts are often based on a “static” moment; frozen in time, so to speak…. Assumptions, on the other hand, depend on situational analysis and the ongoing tweaking via assessment of new information. An assumption can be changed and adjusted as new information becomes available.” “Best-in-class exposure management analytics is all about challenging assumptions and using disaster scenarios to test how your portfolio would respond if a major event were to occur in a non-modeled peril region.” It is clear Sikich’s observations on unpredictability are becoming the new normal in the industry. Firms are investing to fully entrench strong exposure management practices across their entire enterprise to protect against private catastrophes. They are also reaping other benefits from this type of investment: Sophisticated exposure management tools are not just designed to help firms better manage their risks and exposures, but also to identify new areas of opportunity. By gaining a deeper understanding of their global portfolio across all regions and perils, firms are able to make more informed strategic decisions when looking to grow their business. In specific regions for certain perils, firms’ can use exposure-based analytics to contextualize their modeled loss results. This allows them to “what if” on the range of possible deterministic losses so they can stress test their portfolio against historical benchmarks, look for sensitivities and properly set expectations. Exposure Management Analytics Best-in-class exposure management analytics is all about challenging assumptions and using disaster scenarios to test how your portfolio would respond if a major event were to occur in a non-modeled peril region. Such analytics can identify the pinch points – potential accumulations both within and across classes of business – that may exist while also offering valuable information on where to grow your business. Whether it is through M&A or organic growth, having a better grasp of exposure across your portfolio enables strategic decision-making and can add value to a book of business. The ability to analyze exposure across the entire organization and understand how it is likely to impact accumulations and loss potential is a powerful tool for today’s C-suite. Exposure management tools enable firms to understand the risk in their business today but also how changes can impact their portfolio – whether acquiring a book, moving into new territories or divesting a nonperforming book of business.