Reset Filters

Innovation

- 2022

- 2021

- 2020

- 2019

- 2018

- 2017

- 2016

The Art of Empowerment

A new app – SiteIQ™ from RMS intuitively synthesizes complex risk data for a single location, helping underwriters and coverholders to rate and select risks at the touch of a button The more holistic view of risk a property underwriter can get, the better decisions they are likely to make. In order to build up a detailed picture of risk at an individual location, underwriters or agents at coverholders have, until now, had to request exposure analytics on single risks from their portfolio managers and brokers. Also, they had to gather supplementary risk data from a range of external resources, whether it is from Catastrophe Risk Evaluation and Standardizing Target Accumulations (CRESTA) zones to look-ups on Google Maps. This takes valuable time, requires multiple user licenses and can generate information that is inconsistent with the underlying modeling data at the portfolio level. As the senior manager at one managing general agent (MGA) tells EXPOSURE, this misalignment of data means underwriting decisions are not always being made with confidence. This makes the buildup of unwanted risk aggregation in a particular area a very real possibility, invariably resulting in “senior management breathing down my neck.” With underwriters in desperate need of better multi-peril data at the point of underwriting, RMS has developed an app, SiteIQ, that leverages sophisticated modeling information, as well as a view of the portfolio of locations underwritten, to be easily understood and quickly actionable at the point of underwriting. But it also goes further as SiteIQ can integrate with a host of data providers so users can enter any address into the app and quickly see a detailed breakdown of the natural and human-made hazards that may put the property at risk. SiteIQ allows the underwriter to generate detailed risk scores for each location in a matter of seconds In addition to synthesized RMS data, users can also harness third-party risk data to overlay responsive map layers such as, arson, burglary and fire-protection insights, and other indicators that can help the underwriter better understand the characteristics of a building and assess whether it is well maintained or at greater risk. The app allows the underwriter to generate detailed risk scores for each location in a matter of seconds. It also assigns a simple color coding for each hazard, in line with the insurer’s appetite: whether that’s green for acceptable levels of risk all the way to red for risks that require more complex analysis. Crucially, users can view individual locations in the context of the wider portfolio, helping them avoid unwanted risk aggregation and write more consistently to the correct risk appetite. The app goes a level further by allowing clients to use a sophisticated rules engine that takes into account the client’s underwriting rules. This enables SiteIQ to recommend possible next steps for each location — whether that’s to accept the risk, refer it for further investigation or reject it based on breaching certain criteria. “We decided to build an app exclusively for underwriters to help them make quick decisions when assessing risks,” explains Shaheen Razzaq, senior director at RMS. “SiteIQ provides a systematic method to identify locations that don’t meet your risk strategy so you can focus on finding the risks that do. “People are moving toward simple digital tools that synthesize information quickly,” he adds. “Underwriters tell us they want access to science without having to rely on others and the ability to screen and understand risks within seconds.” And as the underlying data behind the application is based on the same RMS modeling information used at the portfolio level, this guarantees data consistency at all points in the chain. “Deep RMS science, including data from all of our high-definition models, is now being delivered to people upstream, building consistency and understanding,” says Razzaq. SiteIQ has made it simple to build in the customer’s risk appetite and their view of risk. “One of the major advantages of the app is that it is completely configurable by the customer. This could be assigning red-amber-green to perils with certain scores, setting rules for when it should recommend rejecting a location, or integrating a customer’s proprietary data that may have been developed using their underwriting and claims experience — which is unique to each company.” Reporting to internal and external stakeholders is also managed by the app. And above all, says Razzaq, it is simple to use, priced at an accessible level and requires no technical skill, allowing underwriters to make quick, informed decisions from their desktops and tablet devices — and soon their smartphones. In complex cases where deeper analysis is required or when models should be run, working together with cat modelers will still be a necessity. But for most risks, underwriters will be able to quickly screen and filter risk factors, reducing the need to consult their portfolio managers or cat modeling teams. “With underwriting assistants a thing of the past, and the expertise the cat modelers offer being a valuable but finite resource, it’s our responsibility to understand risk at the point of underwriting,” one underwriter explains. “As a risk decision-maker, when I need to make an assessment on a particular location, I need access to insights in a timely and efficient manner, so that I can make the best possible decision based on my business,” another underwriter adds. The app is not intended to replace the deep analysis that portfolio management teams do, but instead reduce the number of times they are asked for information by their underwriters, giving them more time to focus on the job at hand — helping underwriters assess the most complex of risks. Bringing Coverholders on Board Similar efficiencies can be gained on cover-holder/delegated-authority business. In the past, there have been issues with cover-holders providing coverage that takes a completely different view of risk to the syndicate or managing agent that is providing the capacity. RMS has ensured SiteIQ works for coverholders, to give them access to shared analytics, managing agent rules and an enhanced view of hazards. It is hoped this will both improve underwriting decision-making by the coverholders and strengthen delegated-authority relationships. Coverholder business continues to grow in the Lloyd’s and company markets, and delegating authorities often worry whether the risks underwritten on their behalf are done so with the best possible information available. A better scenario is when the coverholder contacts the delegating authority to ask for advice on a particular location, but receiving multiple referral calls each day from coverholders seeking decisions on individual risks can be a drain on these growing businesses’ resources. “Delegated authorities obviously want coverholders to write business doing the proper risk assessments, but on the other hand, if the coverholder is constantly pinging the managing agent for referrals, they aren’t a good partner,” says a senior manager at one MGA. “We can increase profitability if we improve our current workflow, and that can only be done with smart tools that make risk management simpler,” he notes, adding that better risk information tools would allow his company to redeploy staff. A recent Lloyd’s survey found that 55 percent of managing agents are struggling with resources in their delegated-authority teams. And with the Lloyd’s Corporation also seeking to cleanse the market of sub-par performers after swinging to a loss in 2018, any solution that drives efficiency and enables coverholders to make more informed decisions can only help drive up standards. “It was actually an idea that stemmed from our clients’ underwriting coverholder business. If we can equip coverholders with these tools, managing agents will receive fewer phone calls while being confident that the coverholder is writing good business in line with the agreed rules,” says Razzaq. “Most coverholders lack the infrastructure, budget and human resources to run complex models. With SiteIQ, RMS can now offer them deeper analytics, by leveraging expansive model science, in a more accessible way and at a more affordable price.”

Opening Pandora's Box

With each new stride in hazard research and science comes the ability to better calculate and differentiate risk Efforts by RMS scientists and engineers to better understand liquefaction vulnerability is shedding new light on the secondary earthquake hazard. However, this also makes it more likely that, unless they can charge for the risk, (re)insurance appetite will diminish for some locations while also increasing in other areas. A more differentiated approach to underwriting and pricing is an inevitable consequence of investment in academic research. Once something has been learned, it cannot be unlearned, explains Robert Muir-Wood, chief research officer at RMS. “In the old days, everybody paid the same for insurance because no one had the means to actually determine how risk varied from location to location, but once you learn how to differentiate risk well, there’s just no going back. It’s like Pandora’s box has been opened. “There are two general types of liquefaction that are just so severe that no one should build on them” Tim Ancheta RMS “At RMS we are neutral on risk,” he adds. “It’s our job to work for all parties and provide the best neutral science-based perspective on risk, whether that’s around climate change in California or earthquake risk in New Zealand. And we and our clients believe that by having the best science-based assessment of risk they can make effective decisions about their risk management.” Spotting a Gap in the Science On September 28, 2018, a large and shallow M7.5 earthquake struck Central Sulawesi, Indonesia, triggering a tsunami over 2 meters in height. The shaking and tsunami caused widespread devastation in and around the provincial capital Palu, but according to a report published by the GEER Association, it was liquefaction and landslides that caused thousands of buildings to collapse in a catastrophe that claimed over 4,000 lives. It was the latest example of a major earthquake that showed that liquefaction — where the ground moves and behaves as if it is a liquid — can be a much bigger driver of loss than previously thought. The Tōhoku Earthquake in Japan during 2011 and the New Zealand earthquakes in Christchurch in 2010 and 2011 were other high-profile examples. The earthquakes in New Zealand caused a combined insurance industry loss of US$22.8-US$26.2 billion, with widespread liquefaction undermining the structural integrity of hundreds of buildings. Liquefaction has been identified by a local engineer as causing 50 percent of the loss. Now, research carried out by RMS scientists is helping insurers and other stakeholders to better understand the impact that liquefaction can have on earthquake-related losses. It is also helping to pinpoint other parts of the world that are highly vulnerable to liquefaction following earthquake. “Before Christchurch we had not appreciated that you could have a situation where a midrise building may be completely undamaged by the earthquake shaking, but the liquefaction means that the building has suffered differential settlement leaving the floors with a slight tilt, sufficient to be declared a 100 percent loss,” explains Muir-Wood. “We realized for the first time that you actually have to model the damage separately,” he continues. “Liquefaction is completely separate to the damage caused by shaking. But in the past we treated them as much of the same. Separating out the hazards has big implications for how we go about modeling the risk, or identifying other situations where you are likely to have extreme liquefaction at some point in the future.” The Missing Link Tim Ancheta, a risk modeler for RMS based in Newark, California, is responsible for developing much of the understanding about the interaction between groundwater depth and liquefaction. Using data from the 2011 earthquake in Christchurch and boring data from numerous sites across California to calculate groundwater depth, he has been able to identify sites that are particularly prone to liquefaction. “I was hired specifically for evaluating liquefaction and trying to develop a model,” he explains. “That was one of the key goals for my position. Before I joined RMS about seven years back, I was a post-doctoral researcher at PEER — the Pacific Earthquake Engineering Research Center at Berkeley — working on ground motion research. And my doctoral thesis was on the spatial variability of ground motions.” Joining RMS soon after the earthquakes in Christchurch had occurred meant that Ancheta had access to a wealth of new data on the behavior of liquefaction. For the first time, it showed the significance of ground- water depth in determining where the hazard was likely to occur. Research, funded by the New Zealand government, included a survey of liquefaction observations, satellite imagery, a time series of groundwater levels as well as the building responses. It also included data collected from around 30,000 borings. “All that had never existed on such a scale before,” says Ancheta. “And the critical factor here was they investigated both liquefaction sites and non-liquefaction sites — prior surveys had only focused on the liquefaction sites.” Whereas the influence of soil type on liquefaction had been reasonably well understood prior to his research, previous studies had not adequately incorporated groundwater depth. “The key finding was that if you don’t have a clear understanding of where the groundwater is shallow or where it is deep, or the transition — which is important — where you go from a shallow to deep groundwater depth, you can’t turn on and off the liquefaction properly when an earthquake happens,” reveals Ancheta. Ancheta and his team have gone on to collect and digitize groundwater data, geology and boring data in California, Japan, Taiwan and India with a view to gaining a granular understanding of where liquefaction is most likely to occur. “Many researchers have said that liquefaction properties are not regionally dependent, so that if you know the geologic age or types of soils, then you know approximately how susceptible soils can be to liquefaction. So an important step for us is to validate that claim,” he explains. The ability to use groundwater depth has been one of the factors in predicting potential losses that has significantly reduced uncertainty within the RMS suite of earthquake models, concentrating the losses in smaller areas rather than spreading them over an entire region. This has clear implications for (re)insurers and policymakers, particularly as they seek to determine whether there are any “no-go” areas within cities. “There are two general types of liquefaction that are just so severe that no one should build on them,” says Ancheta. “One is lateral spreading where the extensional strains are just too much for buildings. In New Zealand, lateral spreading was observed at numerous locations along the Avon River, for instance.” California is altogether more challenging, he explains. “If you think about all the rivers that flow through Los Angeles or the San Francisco Bay Area, you can try and model them in the same way as we did with the Avon River in Christchurch. We discovered that not all rivers have a similar lateral spreading on either side of the riverbank. Where the river courses have been reworked with armored slopes or concrete linings — essentially reinforcement — it can actually mitigate liquefaction-related displacements.” The second type of severe liquefaction is called “flow slides” triggered by liquefaction, which is where the soil behaves almost like a landslide. This was the type of liquefaction that occurred in Central Sulawesi when the village of Balaroa was entirely destroyed by rivers of soil, claiming entire neighborhoods. “It’s a type of liquefaction that is extremely rare,” he adds. “but they can cause tens to hundreds of meters of displacement, which is why they are so devastating. But it’s much harder to predict the soils that are going to be susceptible to them as well as you can for other types of liquefaction surface expressions.” Ancheta is cognizant of the fact that a no-build zone in a major urban area is likely to be highly contentious from the perspective of homeowners, insurers and policymakers, but insists that now the understanding is there, it should be acted upon. “The Pandora’s box for us in the Canterbury Earthquake Sequence was the fact that the research told us where the lateral spreading would occur,” he says. “We have five earthquakes that produced lateral spreading so we knew with some certainty where the lateral spreading would occur and where it wouldn’t occur. With severe lateral spreading you just have to demolish the buildings affected because they have been extended so much.”

Underwriting With 20:20 Vision

Risk data delivered to underwriting platforms via application programming interfaces (API) is bringing granular exposure information and model insights to high-volume risks The insurance industry boasts some of the most sophisticated modeling capabilities in the world. And yet the average property underwriter does not have access to the kind of predictive tools that carriers use at a portfolio level to manage risk aggregation, streamline reinsurance buying and optimize capitalization. Detailed probabilistic models are employed on large and complex corporate and industrial portfolios. But underwriters of high-volume business are usually left to rate risks with only a partial view of the risk characteristics at individual locations, and without the help of models and other tools. “There is still an insufficient amount of data being gathered to enable the accurate assessment and pricing of risks [that] our industry has been covering for decades,” says Talbir Bains, founder and CEO of managing general agent (MGA) platform Volante Global. Access to insights from models used at the portfolio level would help underwriters make decisions faster and more accurately, improving everything from risk screening and selection to technical pricing. However, accessing this intellectual property (IP) has previously been difficult for higher-volume risks, where to be competitive there simply isn’t the time available to liaise with cat modeling teams to configure full model runs and build a sophisticated profile of the risk. Many insurers invest in modeling post-bind in order to understand risk aggregation in their portfolios, but Ross Franklin, senior director of data product management at RMS, suggests this is too late. “From an underwriting standpoint, that’s after the horse has bolted — that insight is needed upfront when you are deciding whether to write and at what price.” By not seeing the full picture, he explains, underwriters are often making decisions with a completely different view of risk from the portfolio managers in their own company. “Right now, there is a disconnect in the analytics used when risks are being underwritten and those used downstream as these same risks move through to the portfolio.” Cut off From the Insight Historically, underwriters have struggled to access complete information that would allow them to better understand the risk characteristics at individual locations. They must manually gather what risk information they can from various public- and private-sector sources. This helps them make broad assessments of catastrophe exposures, such as FEMA flood zone or distance to coast. These solutions often deliver data via web portals or spreadsheets and reports — not into the underwriting systems they use every day. There has been little innovation to increase the breadth, and more importantly, the usability of data at the point of underwriting. “Vulnerability is critical to accurate underwriting. Hazard alone is not enough” Ross Franklin RMS “We have used risk data tools but they are too broad at the hazard level to be competitive — we need more detail,” notes one senior property underwriter, while another simply states: “When it comes to flood, honestly, we’re gambling.” Misaligned and incomplete information prevents accurate risk selection and pricing, leaving the insurer open to negative surprises when underwritten risks make their way onto the balance sheet. Yet very few data providers burrow down into granular detail on individual risks by identifying what material a property is made of, how many stories it is, when it was built and what it is used for, for instance, all of which can make a significant difference to the risk rating of that individual property. “Vulnerability is critical to accurate underwriting. Hazard alone is not enough. When you put building characteristics together with the hazard information, you form a deeper understanding of the vulnerability of a specific property to a particular hazard. For a given location, a five-story building built from reinforced concrete in the 1990s will naturally react very differently in a storm than a two-story wood-framed house built in 1964 — and yet current underwriting approaches often miss this distinction,” says Franklin. In response to demand for change, RMS developed a Location Intelligence application programming interface (API), which allows preformatted RMS risk information to be easily distributed from its cloud platform via the API into any third-party or in-house underwriting software. The technology gives underwriters access to key insights on their desktops, as well as informing fully automated risk screening and pricing algorithms. The API allows underwriters to systematically evaluate the profitability of submissions, triage referrals to cat modeling teams more efficiently and tailor decision-making based on individual property characteristics. It can also be overlaid with third-party risk information. “The emphasis of our latest product development has been to put rigorous cat peril risk analysis in the hands of users at the right points in the underwriting workflow,” says Franklin. “That’s a capability that doesn’t exist today on high-volume personal lines and SME business, for instance.” Historically, underwriters of high-volume business have relied on actuarial analysis to inform technical pricing and risk ratings. “This analysis is not usually backed up by probabilistic modeling of hazard or vulnerability and, for expediency, risks are grouped into broad classes. The result is a loss of risk specificity,” says Franklin. “As the data we are supplying derives from the same models that insurers use for their portfolio modeling, we are offering a fully connected-up, consistent view of risk across their property books, from inception through to reinsurance.” With additional layers of information at their disposal, underwriters can develop a more comprehensive risk profile for individual locations than before. “In the traditional insurance model, the bad risks are subsidized by the good — but that does not have to be the case. We can now use data to get a lot more specific and generate much deeper insights,” says Franklin. And if poor risks are screened out early, insurers can be much more precise when it comes to taking on and pricing new business that fits their risk appetite. Once risks are accepted, there should be much greater clarity on expected costs should a loss occur. The implications for profitability are clear. Harnessing Automation While improved data resolution should drive better loss ratios and underwriting performance, automation can attack the expense ratio by stripping out manual processes, says Franklin. “Insurers want to focus their expensive, scarce underwriting resources on the things they do best — making qualitative expert judgments on more complex risks.” This requires them to shift more decision-making to straight-through processing using sophisticated underwriting guidelines, driven by predictive data insight. Straight-through processing is already commonplace in personal lines and is expected to play a growing role in commercial property lines too. “Technology has a critical role to play in overcoming this data deficiency through greatly enhancing our ability to gather and analyze granular information, and then to feed that insight back into the underwriting process almost instantaneously to support better decision-making,” says Bains. “However, the infrastructure upon which much of the insurance model is built is in some instances decades old and making the fundamental changes required is a challenge.” Many insurers are already in the process of updating legacy IT systems, making it easier for underwriters to leverage information such as past policy information at the point of underwriting. But technology is only part of the solution. The quality and granularity of the data being input is also a critical factor. Are brokers collecting sufficient levels of data to help underwriters assess the risk effectively? That’s where Franklin hopes RMS can make a real difference. “For the cat element of risk, we have far more predictive, higher-quality data than most insurers use right now,” he says. “Insurers can now overlay that with other data they hold to give the underwriter a far more comprehensive view of the risk.” Bains thinks a cultural shift is needed across the entire insurance value chain when it comes to expectations of the quantity, quality and integrity of data. He calls on underwriters to demand more good quality data from their brokers, and for brokers to do the same of assureds. “Technology alone won’t enable that; the shift is reliant upon everyone in the chain recognizing what is required of them.”

A Model Operation

EXPOSURE explores the rationale, challenges and benefits of adopting an outsourced model function Business process outsourcing has become a mainstay of the operational structure of many organizations. In recent years, reflecting new technologies and changing market dynamics, the outsourced function has evolved significantly to fit seamlessly within existing infrastructure. On the modeling front, the exponential increase in data coupled with the drive to reduce expense ratios while enhancing performance levels is making the outsourced model proposition an increasingly attractive one. The Business Rationale The rationale for outsourcing modeling activities spans multiple possible origin points, according to Neetika Kapoor Sehdev, senior manager at RMS. “Drivers for adopting an outsourced modeling strategy vary significantly depending on the company itself and their specific ambitions. It may be a new startup that has no internal modeling capabilities, with outsourcing providing access to every component of the model function from day one.” There is also the flexibility that such access provides, as Piyush Zutshi, director of RMS Analytical Services points out. “That creates a huge value-add in terms of our catastrophe response capabilities — knowing that we are able to report our latest position has made a big difference on this front” Judith Woo Starstone “In those initial years, companies often require the flexibility of an outsourced modeling capability, as there is a degree of uncertainty at that stage regarding potential growth rates and the possibility that they may change track and consider alternative lines of business or territories should other areas not prove as profitable as predicted.” Another big outsourcing driver is the potential to free up valuable internal expertise, as Sehdev explains. “Often, the daily churn of data processing consumes a huge amount of internal analytical resources,” she says, “and limits the opportunities for these highly skilled experts to devote sufficient time to analyzing the data output and supporting the decision-making process.” This all-too-common data stumbling block for many companies is one that not only affects their ability to capitalize fully on their data, but also to retain key analytical staff. “Companies hire highly skilled analysts to boost their data performance,” Zutshi says, “but most of their working day is taken up by data crunching. That makes it extremely challenging to retain that caliber of staff as they are massively overqualified for the role and also have limited potential for career growth.” Other reasons for outsourcing include new model testing. It provides organizations with a sandbox testing environment to assess the potential benefits and impact of a new model on their underwriting processes and portfolio management capabilities before committing to the license fee. The flexibility of outsourced model capabilities can also prove critical during renewal periods. These seasonal activity peaks can be factored into contracts to ensure that organizations are able to cope with the spike in data analysis required as they reanalyze portfolios, renew contracts, add new business and write off old business. “At RMS Analytical Services,” Zutshi explains, “we prepare for data surge points well in advance. We work with clients to understand the potential size of the analytical spike, and then we add a factor of 20 to 30 percent to that to ensure that we have the data processing power on hand should that surge prove greater than expected.” Things to Consider Integrating an outsourced function into existing modeling processes can prove a demanding undertaking, particularly in the early stages where companies will be required to commit time and resources to the knowledge transfer required to ensure a seamless integration. The structure of the existing infrastructure will, of course, be a major influencing factor in the ease of transition. “There are those companies that over the years have invested heavily in their in-house capabilities and developed their own systems that are very tightly bound within their processes,” Sehdev points out, “which can mean decoupling certain aspects is more challenging. For those operations that run much leaner infrastructures, it can often be more straightforward to decouple particular components of the processing.” RMS Analytical Services has, however, addressed this issue and now works increasingly within the systems of such clients, rather than operating as an external function. “We have the ability to work remotely, which means our teams operate fully within their existing framework. This removes the need to decouple any parts of the data chain, and we can fit seamlessly into their processes.” This also helps address any potential data transfer issues companies may have, particularly given increasingly stringent information management legislation and guidelines. There are a number of factors that will influence the extent to which a company will outsource its modeling function. Unsurprisingly, smaller organizations and startup operations are more likely to take the fully outsourced option, while larger companies tend to use it as a means of augmenting internal teams — particularly around data engineering. RMS Analytical Services operate various different engagement models. Managed services are based on annual contracts governed by volume for data engineering and risk analytics. On-demand services are available for one-off risk analytics projects, renewals support, bespoke analysis such as event response, and new IP adoption. “Modeler down the hall” is a third option that provides ad hoc work, while the firm also offers consulting services around areas such as process optimization, model assessment and transition support. Making the Transition Work Starstone Insurance, a global specialty insurer providing a diversified range of property, casualty and specialty insurance to customers worldwide, has been operating an outsourced modeling function for two and a half years. “My predecessor was responsible for introducing the outsourced component of our modeling operations,” explains Judith Woo, head of exposure management at Starstone. “It was very much a cost-driven decision as outsourcing can provide a very cost-effective model.” The company operates a hybrid model, with the outsourced team working on most of the pre- and post-bind data processing, while its internal modeling team focuses on the complex specialty risks that fall within its underwriting remit. “The volume of business has increased over the years as has the quality of data we receive,” she explains. “The amount of information we receive from our brokers has grown significantly. A lot of the data processing involved can be automated and that allows us to transfer much of this work to RMS Analytical Services.” On a day-to-day basis, the process is straightforward, with the Starstone team uploading the data to be processed via the RMS data portal. The facility also acts as a messaging function with the two teams communicating directly. “In fact,” Woo points out, “there are email conversations that take place directly between our underwriters and the RMS Analytical Service team that do not always require our modeling division’s input.” However, reaching this level of integration and trust has required a strong commitment from Starstone to making the relationship work. “You are starting to work with a third-party operation that does not understand your business or its data processes. You must invest time and energy to go through the various systems and processes in detail,” she adds, “and that can take months depending on the complexity of the business. “You are essentially building an extension of your team, and you have to commit to making that integration work. You can’t simply bring them in, give them a particular problem and expect them to solve it without there being the necessary knowledge transfer and sharing of information.” Her internal modeling team of six has access to an outsourced team of 26, she explains, which greatly enhances the firm’s data-handling capabilities. “With such a team, you can import fresh data into the modeling process on a much more frequent basis, for example. That creates a huge value-add in terms of our catastrophe response capabilities — knowing that we are able to report our latest position has made a big difference on this front.” Creating a Partnership As with any working partnership, the initial phases are critical as they set the tone for the ongoing relationship. “We have well-defined due diligence and transition methodologies,” Zutshi states. “During the initial phase, we work to understand and evaluate their processes. We then create a detailed transition methodology, in which we define specific data templates, establish monthly volume loads, lean periods and surge points, and put in place communication and reporting protocols.” At the end, both parties have a full documented data dictionary with business rules governing how data will be managed, coupled with the option to choose from a repository of 1,000+ validation rules for data engineering. This is reviewed on a regular basis to ensure all processes remain aligned with the practices and direction of the organization. “Often, the daily churn of data processing consumes a huge amount of internal analytical resources and limits the opportunities to devote sufficient time to analyzing the data output” — Neetika Kapoor Sehdev, RMS Service level agreements (SLAs) also form also form a central tenet of the relationship plus stringent data compliance procedures. “Robust data security and storage is critical,” says Woo. “We have comprehensive NDAs [non-disclosure agreements] in place that are GDPR compliant to ensure that the integrity of our data is maintained throughout. We also have stringent SLAs in place to guarantee data processing turnaround times. Although, you need to agree on a reasonable time period reflecting the data complexity and also when it is delivered.” According to Sehdev, most SLAs that the analytical team operates require a 24-hour data turnaround rising to 48-72 hours for more complex data requirements, but clients are able to set priorities as needed. “However, there is no point delivering on turnaround times,” she adds, “if the quality of the data supplied is not fit for purpose. That’s why we apply a number of data quality assurance processes, which means that our first-time accuracy level is over 98 percent.” The Value-Add Most clients of RMS Analytical Services have outsourced modeling functions to the division for over seven years, with a number having worked with the team since it launched in 2004. The decision to incorporate their services is not taken lightly given the nature of the information involved and the level of confidence required in their capabilities. “The majority of our large clients bring us on board initially in a data-engineering capacity,” explains Sehdev. “It’s the building of trust and confidence in our ability, however, that helps them move to the next tranche of services.” The team has worked to strengthen and mature these relationships, which has enabled them to increase both the size and scope of the engagements they undertake. “With a number of clients, our role has expanded to encompass account modeling, portfolio roll-up and related consulting services,” says Zutshi. “Central to this maturing process is that we are interacting with them daily and have a dedicated team that acts as the primary touch point. We’re also working directly with the underwriters, which helps boost comfort and confidence levels. “For an outsourced model function to become an integral part of the client’s team,” he concludes, “it must be a close, coordinated effort between the parties. That’s what helps us evolve from a standard vendor relationship to a trusted partner.”

Taking Cloud Adoption to the Core

Insurance and reinsurance companies have been more reticent than other business sectors in embracing Cloud technology. EXPOSURE explores why it is time to ditch “the comfort blanket” The main benefits of Cloud computing are well-established and include scale, efficiency and cost effectiveness. The Cloud also offers economical access to huge amounts of computing power, ideal to tackle the big data/big analytics challenge. And exciting innovations such as microservices — allowing access to prebuilt, Cloud-hosted algorithms, artificial intelligence (AI) and machine learning applications, which can be assembled to build rapidly deployed new services — have the potential to transform the (re)insurance industry. And yet the industry has continued to demonstrate a reluctance in moving its core services onto a Cloud-based infrastructure. While a growing number of insurance and reinsurance companies are using Cloud services (such as those offered by Amazon Web Services, Microsoft Azure and Google Cloud) for nonessential office and support functions, most have been reluctant to consider Cloud for their mission-critical infrastructure. In its research of Cloud adoption rates in regulated industries, such as banking, insurance and health care, McKinsey found, “Many enterprises are stuck supporting both their inefficient traditional data-center environments and inadequately planned Cloud implementations that may not be as easy to manage or as affordable as they imagined.” No Magic Bullet It also found that “lift and shift” is not enough, where companies attempt to move existing, monolithic business applications to the Cloud, expecting them to be “magically endowed with all the dynamic features.” “We’ve come up against a lot of that when explaining the difference what a cloud-based risk platform offers,” says Farhana Alarakhiya, vice president of products at RMS. “Basically, what clients are showing us is their legacy offering placed on a new Cloud platform. It’s potentially a better user interface, but it’s not really transforming the process.” Now is the time for the market-leading (re)insurers to make that leap and really transform how they do business, she says. “It’s about embracing the new and different and taking comfort in what other industries have been able to do. A lot of Cloud providers are making it very easy to deliver analytics on the Cloud. So, you’ve got the story of agility, scalability, predictability, compliance and security on the Cloud and access to new analytics, new algorithms, use of microservices when it comes to delivering predictive analytics.” This ease to tap into highly advanced analytics and new applications, unburdened from legacy systems, makes the Cloud highly attractive. Hussein Hassanali, managing partner at VTX Partners, a division of Volante Global, commented: “Cloud can also enhance long-term pricing adequacy and profitability driven by improved data capture, historical data analytics and automated links to third-party market information. Further, the ‘plug-and-play’ aspect allows you to continuously innovate by connecting to best-in-class third-party applications.” While moving from a server-based platform to the Cloud can bring numerous advantages, there is a perceived unwillingness to put high-value data into the environment, with concerns over security and the regulatory implications that brings. This includes data protection rules governing whether or not data can be moved across borders. “There are some interesting dichotomies in terms of attitude and reality,” says Craig Beattie, analyst at Celent Consulting. “Cloud-hosting providers in western Europe and North America are more likely to have better security than (re)insurers do in their internal data centers, but the board will often not support a move to put that sort of data outside of the company’s infrastructure. “Today, most CIOs and executive boards have moved beyond the knee-jerk fears over security, and the challenges have become more practical,” he continues. “They will ask, ‘What can we put in the Cloud? What does it cost to move the data around and what does it cost to get the data back? What if it fails? What does that backup look like?’” With a hybrid Cloud solution, insurers wanting the ability to tap into the scalability and cost efficiencies of a software-as-a-service (SaaS) model, but unwilling to relinquish their data sovereignty, dedicated resources can be developed in which to place customer data alongside the Cloud infrastructure. But while a private or hybrid solution was touted as a good compromise for insurers nervous about data security, these are also more costly options. The challenge is whether the end solution can match the big Cloud providers with global footprints that have compliance and data sovereignty issues already covered for their customers. “We hear a lot of things about the Internet being cheap — but if you partially adopt the Internet and you’ve got significant chunks of data, it gets very costly to shift those back and forth,” says Beattie. A Cloud-first approach Not moving to the Cloud is no longer a viable option long term, particularly as competitors make the transition and competition and disruption change the industry beyond recognition. Given the increasing cost and complexity involved in updating and linking legacy systems and expanding infrastructure to encompass new technology solutions, Cloud is the obvious choice for investment, thinks Beattie. “If you’ve already built your on-premise infrastructure based on classic CPU-based processing, you’ve tied yourself in and you’re committed to whatever payback period you were expecting,” he says. “But predictive analytics and the infrastructure involved is moving too quickly to make that capital investment. So why would an insurer do that? In many ways it just makes sense that insurers would move these services into the Cloud. “State-of-the-art for machine learning processing 10 years ago was grids of generic CPUs,” he adds. “Five years ago, this was moving to GPU-based neural network analyses, and now we’ve got ‘AI chips’ coming to market. In an environment like that, the only option is to rent the infrastructure as it’s needed, lest we invest in something that becomes legacy in less time than it takes to install.” Taking advantage of the power and scale of Cloud computing also advances the march toward real-time, big data analytics. Ricky Mahar, managing partner at VTX Partners, a division of Volante Global, added: “Cloud computing makes companies more agile and scalable, providing flexible resources for both power and space. It offers an environment critical to the ability of companies to fully utilize the data available and capitalize on real-time analytics. Running complex analytics using large data sets enhances both internal decision-making and profitability.” As discussed, few (re)insurers have taken the plunge and moved their mission-critical business to a Cloud-based SaaS platform. But there are a handful. Among these first movers are some of the newer, less legacy-encumbered carriers, but also some of the industry’s more established players. The latter includes U.S.-based life insurer MetLife, which announced it was collaborating with IBM Cloud last year to build a platform designed specifically for insurers. Meanwhile Munich Re America is offering a Cloud-hosted AI platform to its insurer clients. “The ice is thawing and insurers and reinsurers are changing,” says Beattie. “Reinsurers [like Munich Re] are not just adopting Cloud but are launching new innovative products on the Cloud.” What’s the danger of not adopting the Cloud? “If your reasons for not adopting the Cloud are security-based, this reason really doesn’t hold up any more. If it is about reliability, scalability, remember that the largest online enterprises such as Amazon, Netflix are all Cloud-based,” comments Farhana Alarakhiya. “The real worry is that there are so many exciting, groundbreaking innovations built in the Cloud for the (re)insurance industry, such as predictive analytics, which will transform the industry, that if you miss out on these because of outdated fears, you will damage your business. The industry is waiting for transformation, and it’s progressing fast in the Cloud.”

Pushing Back the Water

Flood Re has been tasked with creating a risk-reflective, affordable U.K. flood insurance market by 2039. Moving forward, data resolution that supports critical investment decisions will be key Millions of properties in the U.K. are exposed to some form of flood risk. While exposure levels vary massively across the country, coastal, fluvial and pluvial floods have the potential to impact most locations across the U.K. Recent flood events have dramatically demonstrated this with properties in perceived low-risk areas being nevertheless severely affected. Before the launch of Flood Re, securing affordable household cover in high-risk areas had become more challenging — and for those impacted by flooding, almost impossible. To address this problem, Flood Re — a joint U.K. Government and insurance-industry initiative — was set up in April 2016 to help ensure available, affordable cover for exposed properties. The reinsurance scheme’s immediate aim was to establish a system whereby insurers could offer competitive premiums and lower excesses to highly exposed households. To date it has achieved considerable success on this front. Of the 350,000 properties deemed at high risk, over 150,000 policies have been ceded to Flood Re. Over 60 insurance brands representing 90 percent of the U.K. home insurance market are able to cede to the scheme. Premiums for households with prior flood claims fell by more than 50 percent in most instances, and a per-claim excess of £250 per claim (as opposed to thousands of pounds) was set. While there is still work to be done, Flood Re is now an effective, albeit temporary, barrier to flood risk becoming uninsurable in high-risk parts of the U.K. However, in some respects, this success could be considered low-hanging fruit. A Temporary Solution Flood Re is intended as a temporary solution, granted with a considerable lifespan. By 2039, when the initiative terminates, it must leave behind a flood insurance market based on risk-reflective pricing that is affordable to most households. To achieve this market nirvana, it is also tasked with working to manage flood risks. According to Gary McInally, chief actuary at Flood Re, the scheme must act as a catalyst for this process. “Flood Re has a very clear remit for the longer term,” he explains. “That is to reduce the risk of flooding over time, by helping reduce the frequency with which properties flood and the impact of flooding when it does occur. Properties ought to be presenting a level of risk that is insurable in the future. It is not about removing the risk, but rather promoting the transformation of previously uninsurable properties into insurable properties for the future.” To facilitate this transition to improved property-level resilience, Flood Re will need to adopt a multifaceted approach promoting research and development, consumer education and changes to market practices to recognize the benefit. Firstly, it must assess the potential to reduce exposure levels through implementing a range of resistance (the ability to prevent flooding) and resilience (the ability to recover from flooding) measures at the property level. Second, it must promote options for how the resulting risk reduction can be reflected in reduced flood cover prices and availability requiring less support from Flood Re. According to Andy Bord, CEO of Flood Re: “There is currently almost no link between the action of individuals in protecting their properties against floods and the insurance premium which they are charged by insurers. In principle, establishing such a positive link is an attractive approach, as it would provide a direct incentive for households to invest in property-level protection. “Flood Re is building a sound evidence base by working with academics and others to quantify the benefits of such mitigation measures. We are also investigating ways the scheme can recognize the adoption of resilience measures by householders and ways we can practically support a ‘build-back-better’ approach by insurers.” Modeling Flood Resilience Multiple studies and reports have been conducted in recent years into how to reduce flood exposure levels in the U.K. However, an extensive review commissioned by Flood Re spanning over 2,000 studies and reports found that while helping to clarify potential appropriate measures, there is a clear lack of data on the suitability of any of these measures to support the needs of the insurance market. A 2014 report produced for the U.K. Environment Agency identified a series of possible packages of resistance and resilience measures. The study was based on the agency’s Long-Term Investment Scenario (LTIS) model and assessed the potential benefit of the various packages to U.K. properties at risk of flooding. The 2014 study is currently being updated by the Environment Agency, with the new study examining specific subsets based on the levels of benefit delivered. “It is not about removing the risk, but rather promoting the transformation of previously uninsurable properties into insurable properties” Gary McInally Flood Re Packages considered will encompass resistance and resilience measures spanning both active and passive components. These include: waterproof external walls, flood-resistant doors, sump pumps and concrete flooring. The effectiveness of each is being assessed at various levels of flood severity to generate depth damage curves. While the data generated will have a foundational role in helping support outcomes around flood-related investments, it is imperative that the findings of the study undergo rigorous testing, as McInally explains. “We want to promote the use of the best-available data when making decisions,” he says. “That’s why it was important to independently verify the findings of the Environment Agency study. If the findings differ from studies conducted by the insurance industry, then we should work together to understand why.” To assess the results of key elements of the study, Flood Re called upon the flood modeling capabilities of RMS, and its Europe Inland Flood High-Definition (HD) Models, which provide the most comprehensive and granular view of flood risk currently available in Europe, covering 15 countries including the U.K. The models enable the assessment of flood risk and the uncertainties associated with that risk right down to the individual property and coverage level. In addition, it provides a much longer simulation timeline, capitalizing on advances in computational power through Cloud-based computing to span 50,000 years of possible flood events across Europe, generating over 200,000 possible flood scenarios for the U.K. alone. The model also enables a much more accurate and transparent means of assessing the impact of permanent and temporary flood defenses and their role to protect against both fluvial and pluvial flood events. Putting Data to the Test “The recent advances in HD modeling have provided greater transparency and so allow us to better understand the behavior of the model in more detail than was possible previously,” McInally believes. “That is enabling us to pose much more refined questions that previously we could not address.” While the Environment Agency study provided significant data insights, the LTIS model does not incorporate the capability to model pluvial and fluvial flooding at the individual property level, he explains. RMS used its U.K. Flood HD model to conduct the same analysis recently carried out by the Environment Agency, benefiting from its comprehensive set of flood events together with the vulnerability, uncertainty and loss modeling framework. This meant that RMS could model the vulnerability of each resistance/resilience package for a particular building at a much more granular level. RMS took the same vulnerability data used by the Environment Agency, which is relatively similar to the one used within the model, and ran this through the flood model, to assess the impact of each of the resistance and resilience packages against a vulnerability baseline to establish their overall effectiveness. The results revealed a significant difference between the model numbers generated by the LTIS model and those produced by the RMS Europe Inland Flood HD Models. Since hazard data used by the Environment Agency did not include pluvial flood risk, combined with general lower resolution layers than used in the RMS model, the LTIS study presented an overconcentration and hence overestimation of flood depths at the property level. As a result, the perceived benefits of the various resilience and resistance measures were underestimated — the potential benefits attributed to each package in some instances were almost double those of the original study. The findings can show how using a particular package across a subset of about 500,000 households in certain specific locations, could achieve a potential reduction in annual average losses from flood events of up to 40 percent at a country level. This could help Flood Re understand how to allocate resources to generate the greatest potential and achieve the most significant benefit. A Return on Investment? There is still much work to be done to establish an evidence base for the specific value of property-level resilience and resistance measures of sufficient granularity to better inform flood-related investment decisions. “The initial indications from the ongoing Flood Re cost-benefit analysis work are that resistance measures, because they are cheaper to implement, will prove a more cost-effective approach across a wider group of properties in flood-exposed areas,” McInally indicates. “However, in a post-repair scenario, the cost-benefit results for resilience measures are also favorable.” However, he is wary about making any definitive statements at this early stage based on the research to date. “Flood by its very nature includes significant potential ‘hit-and-miss factors’,” he points out. “You could, for example, make cities such as Hull or Carlisle highly flood resistant and resilient, and yet neither location might experience a major flood event in the next 30 years while the Lake District and West Midlands might experience multiple floods. So the actual impact on reducing the cost of flooding from any program of investment will, in practice, be very different from a simple modeled long-term average benefit. Insurance industry modeling approaches used by Flood Re, which includes the use of the RMS Europe Inland Flood HD Models, could help improve understanding of the range of investment benefit that might actually be achieved in practice.”

Bringing Clarity to Slab Claims

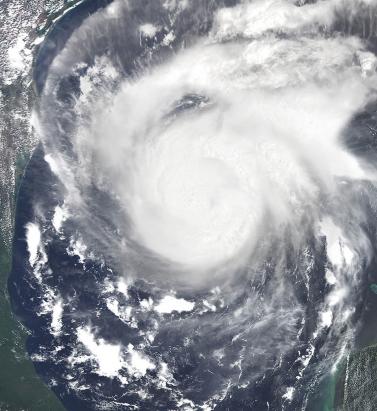

How will a new collaboration between a major Texas insurer, RMS, Accenture and Texas Tech University provide the ability to determine with accuracy the source of slab claim loss? The litigation surrounding “slab claims” in the U.S. in the aftermath of a major hurricane has long been an issue within the insurance industry. When nothing is left of a coastal property but the concrete slab on which it was built, how do claims handlers determine whether the damage was predominantly caused by water or wind? The decision that many insurers take can spark protracted litigation, as was the case following Hurricane Ike, a powerful storm that caused widespread damage across the state after it made landfall over Galveston in September 2008. The storm had a very large footprint for a Category 2 hurricane, with sustained wind speeds of 110 mph and a 22-foot storm surge. Five years on, litigation surrounding how slab claim damage had been wrought rumbled on in the courts. Recognizing the extent of the issue, major coastal insurers knew they needed to improve their methodologies. It sparked a new collaboration between RMS, a major Texas insurer, Accenture and Texas Tech University (TTU). And from this year, the insurer will be able to utilize RMS data, hurricane modeling methodologies, and software analyses to track the likelihood of slab claims before a tropical cyclone makes landfall and document the post-landfall wind, storm surge and wave impacts over time. The approach will help determine the source of the property damage with greater accuracy and clarity, reducing the need for litigation post-loss, thus improving the overall claims experience for both the policyholder and insurer. To provide super accurate wind field data, RMS has signed a contract with TTU to expand a network of mobile meteorological stations that are ultimately positioned in areas predicted to experience landfall during a real-time event. “Our contract is focused on Texas, but they could also be deployed anywhere in the southern and eastern U.S.,” says Michael Young, senior director of product management at RMS. “The rapidly deployable weather stations collect peak and mean wind speed characteristics and transmit via the cell network the wind speeds for inclusion into our tropical cyclone data set. This is in addition to a wide range of other data sources, which this year includes 5,000 new data stations from our partner Earth Networks.” The storm surge component of this project utilizes the same hydrodynamic storm surge model methodologies embedded within the RMS North Atlantic Hurricane Models to develop an accurate view of the timing, extent and severity of storm surge and wave-driven hazards post-landfall. Similar to the wind field modeling process, this approach will also be informed by ground-truth terrain and observational data, such as high-resolution bathymetry data, tide and stream gauge sensors and high-water marks. “The whole purpose of our involvement in this project is to help the insurer get those insights into what’s causing the damage,” adds Jeff Waters, senior product manager at RMS. “The first eight hours of the time series at a particular location might involve mostly damaging surge, followed by eight hours of damaging wind and surge. So, we’ll know, for instance, that a lot of that damage that occurred in the first eight hours was probably caused by surge. It’s a very exciting and pretty unique project to be part of.”