Reset Filters

Disruption

- 2022

- 2021

- 2020

- 2019

- 2018

- 2017

- 2016

The Future of Risk Management

(Re)insuring new and emerging risks requires data and, ideally, a historical loss record upon which to manage an exposure. But what does the future of risk management look like when so many of these exposures are intangible or unexpected? Sudden and dramatic breakdowns become more likely in a highly interconnected and increasingly polarized world, warns the “Global Risks Report 2019” from the World Economic Forum (WEF). “Firms should focus as much on risk response as on risk mitigation,” advises John Drzik, president of global risk and digital at Marsh, one of the report sponsors. “There’s an inevitability to having a certain number of shock events, and firms should focus on how to respond to fast-moving events with a high degree of uncertainty.” Macrotrends such as climate change, urbanization and digitization are all combining in a way that makes major claims more impactful when things go wrong. But are all low-probability/high-consequence events truly beyond our ability to identify and manage? Dr. Gordon Woo, catastrophist at RMS, believes that in an age of big data and advanced analytics, information is available that can help corporates, insurers and reinsurers to understand the plethora of new and emerging risks they face. “The sources of emerging risk insight are out there,” says Woo. “The challenge is understanding the significance of the information available and ensuring it is used to inform decision-makers.” However, it is not always possible to gain access to the insight needed. “Some of the near-miss data regarding new software and designs may be available online,” says Woo. “For example, with the Boeing 737 Max 8, there were postings by pilots where control problems were discussed prior to the Lion Air disaster of October 2018. Equally, intelligence information on terrorist plots may be available from online terrorist chatter. But typically, it is much harder for individuals to access this information, other than security agencies. “Peter Drucker [consultant and author] was right when he said: ‘If you can’t measure it, you can’t improve it,’” he adds. “And this is the issue for (re)insurers when it comes to emerging risks. There is currently not a lot of standardization between risk compliance systems and the way the information is gathered, and corporations are still very reluctant to give information away to insurers.” The Intangibles Protection Gap While traditional physical risks, such as fire and flood, are well understood, well modeled and widely insured, new and emerging risks facing businesses and communities are increasingly intangible and risk transfer solutions are less widely available. While there is an important upside to many technological innovations, for example, there are also downsides that are not yet fully understood or even recognized, thinks Robert Muir-Wood, chief research officer of science and technology at RMS. “Last year’s Typhoon Jebi caused coastal flooding in the Kansai region of Japan,” he says. “There were a lot of cars on the quayside close to where the storm made landfall and many of these just caught on fire. It burnt out a large number of cars that were heading for export. “The reason for the fires was the improved capability of batteries in cars,” he explains. “And when these batteries are immersed in water they burst into flames. So, with this technology you’ve created a whole new peril. There is currently not a lot of standardization between risk compliance systems and the way the information is gathered Gordon Woo RMS “As new technology emerges, new risks emerge,” he concludes. “And it’s not as though the old risks go away. They sort of morph and they always will. Clearly the more that software becomes a critical part of how things function, then there is more of an opportunity for things to go wrong.” From nonphysical-damage business interruption and reputational harm to the theft of intellectual property and a cyber data breach, the ability for underwriters to get a handle on these risks and potential losses is one of the industry’s biggest modern-day challenges. The dearth of products and services for esoteric commercial risks is known as the “intangibles protection gap,” explains Muir-Wood. “There is this question within the whole span of risk management of organizations — of which an increasing amount is intangible — whether they will be able to buy insurance for those elements of their risk that they feel they do not have control over.” While the (re)insurance industry is responding with new products and services geared toward emerging risks, such as cyber, there are some organizational perils, such as reputational risk, that are best addressed by instilling the right risk management culture and setting the tone from the top within organizations, thinks Wayne Ratcliffe, head of risk management at SCOR. “Enterprise risk management is about taking a holistic view of the company and having multidisciplinary teams brainstorming together,” he says. “It’s a tendency of human nature to work in silos in which everyone has their own domain to protect and to work on, but working across an organization is the only way to carry out proper risk management. “There are many causes and consequences of reputational risk, for instance,” he continues. “When I think of past examples where things have gone horribly wrong — and there are so many of them, from Deepwater Horizon to Enron — in certain cases there were questionable ethics and a failure in risk management culture. Companies have to set the tone at the top and then ensure it has spread across the whole organization. This requires constant checking and vigilance.” The best way of checking that risk management procedures are being adhered to is by being really close to the ground, thinks Ratcliffe. “We’re moving too far into a world of emails and communication by Skype. What people need to be doing is talking to each other in person and cross-checking facts. Human contact is essential to understanding the risk.” Spotting the Next “Black Swan” What of future black swans? As per Donald Rumsfeld’s “unknown unknowns,” so called black swan events are typically those that come from left field. They take everyone by surprise (although are often explained away in hindsight) and have an impact that cascades through economic, political and social systems in ways that were previously unimagined, with severe and widespread consequences. “As (re)insurers we can look at past data, but you have to be aware of the trends and forces at play,” thinks Ratcliffe. “You have to be aware of the source of the risk. In ‘The Big Short’ by Michael Lewis, the only person who really understood the impending subprime collapse was the one who went house-to-house asking people if they were having trouble paying their mortgages, which they were. New technologies are creating more opportunities but they’re also making society more vulnerable to sophisticated cyberattacks Wayne Ratcliffe SCOR “Sometimes you need to go out of the bounds of data analytics into a more intuition-based way of picking up signals where there is no data,” he continues. “You need imagination and to come up with scenarios that can happen based on a group of experts talking together and debating how exposures can connect and interconnect. “It’s a little dangerous to base everything on big data measurement and statistics, and at SCOR we talk about the ‘art and science of risk,’” he continues. “And science is more than statistics. We often need hard science behind what we are measuring. A single-point estimate of the measure is not sufficient. We also need confidence intervals corresponding to a range of probabilities.” In its “Global Risks Report 2019,” the WEF examines a series of “what-if” future shocks and asks if its scenarios, while not predictions, are at least “a reminder of the need to think creatively about risk and to expect the unexpected?” The WEF believes future shocks could come about as a result of advances in technology, the depletion of global resources and other major macrotrends clashing in new and extreme ways. “The world is becoming hyperconnected,” says Ratcliffe. “People are becoming more dependent on social media, which is even shaping political decisions, and organizations are increasingly connected via technology and the internet of things. New technologies are creating more opportunities but they’re also making society more vulnerable to sophisticated cyberattacks. We have to think about the systemic nature of it all.” As governments are pressured to manage the effects of climate change, for instance, will the use of weather manipulation tools — such as cloud seeding to induce or suppress rainfall — result in geopolitical conflict? Could biometrics and AI that recognize and respond to emotions be used to further polarize and/or control society? And will quantum computing render digital cryptography obsolete, leaving sensitive data exposed? The risk of cyberattack was the No. 1 risk identified by business leaders in virtually all advanced economies in the WEF’s “Global Risks Report 2019,” with concern about both data breach and direct attacks on company infrastructure causing business interruption. The report found that cyberattacks continue to pose a risk to critical infrastructure, noting the attack in July 2018 that compromised many U.S. power suppliers. In the attack, state-backed Russian hackers gained remote access to utility- company control rooms in order to carry out reconnaissance. However, in a more extreme scenario the attackers were in a position to trigger widespread blackouts across the U.S., according to the Department of Homeland Security. Woo points to a cyberattack that impacted Norsk Hydro, the company that was responsible for a massive bauxite spill at an aluminum plant in Brazil last year, with a targeted strain of ransomware known as “LockerGoga.” With an apparent motivation to wreak revenge for the environmental damage caused, hackers gained access to the company’s IT infrastructure, including the control systems at its aluminum smelting plants. He thinks a similar type of attack by state-sponsored actors could cause significantly greater disruption if the attackers’ motivation was simply to cause damage to industrial control systems. Woo thinks cyber risk has significant potential to cause a major global shock due to the interconnected nature of global IT systems. “WannaCry was probably the closest we’ve come to a cyber 911,” he explains. “If the malware had been released earlier, say January 2017 before the vulnerability was patched, losses would have been a magnitude higher as the malware would have spread like measles as there was no herd immunity. The release of a really dangerous cyber weapon with the right timing could be extremely powerful.”

In Total Harmony

Karen White joined RMS as CEO in March 2018, followed closely by Moe Khosravy, general manager of software and platform activities. EXPOSURE talks to both, along with Mohsen Rahnama, chief risk modeling officer and one of the firm’s most long-standing team members, about their collective vision for the company, innovation, transformation and technology in risk management Karen and Moe, what was it that sparked your interest in joining RMS? Karen: What initially got me excited was the strength of the hand we have to play here and the fact that the insurance sector is at a very interesting time in its evolution. The team is fantastic — one of the most extraordinary groups of talent I have come across. At our core, we have hundreds of Ph.D.s, superb modelers and scientists, surrounded by top engineers, and computer and data scientists. I firmly believe no other modeling firm holds a candle to the quality of leadership and depth and breadth of intellectual property at RMS. We are years ahead of our competitors in terms of the products we deliver. Moe: For me, what can I say? When Karen calls with an idea it’s very hard to say no! However, when she called about the RMS opportunity, I hadn’t ever considered working in the insurance sector. My eureka moment came when I looked at the industry’s challenges and the technology available to tackle them. I realized that this wasn’t simply a cat modeling property insurance play, but was much more expansive. If you generalize the notion of risk and loss, the potential of what we are working on and the value to the insurance sector becomes much greater. I thought about the technologies entering the sector and how new developments on the AI [artificial intelligence] and machine learning front could vastly expand current analytical capabilities. I also began to consider how such technologies could transform the sector’s cost base. In the end, the decision to join RMS was pretty straightforward. “Developments such as AI and machine learning are not fairy dust to sprinkle on the industry’s problems” Karen White CEO, RMS Karen: The industry itself is reaching a eureka moment, which is precisely where I love to be. It is at a transformational tipping point — the technology is available to enable this transformation and the industry is compelled to undertake it. I’ve always sought to enter markets at this critical point. When I joined Oracle in the 1990s, the business world was at a transformational point — moving from client-server computing to Internet computing. This has brought about many of the huge changes we have seen in business infrastructure since, so I had a bird’s-eye view of what was a truly extraordinary market shift coupled with a technology shift. That experience made me realize how an architectural shift coupled with a market shift can create immense forward momentum. If the technology can’t support the vision, or if the challenges or opportunities aren’t compelling enough, then you won’t see that level of change occur. Do (re)insurers recognize the need to change and are they willing to make the digital transition required? Karen: I absolutely think so. There are incredible market pressures to become more efficient, assess risks more effectively, improve loss ratios, achieve better business outcomes and introduce more beneficial ways of capitalizing risk. You also have numerous new opportunities emerging. New perils, new products and new ways of delivering those products that have huge potential to fuel growth. These can be accelerated not just by market dynamics but also by a smart embrace of new technologies and digital transformation. Mohsen: Twenty-five years ago when we began building models at RMS, practitioners simply had no effective means of assessing risk. So, the adoption of model technology was a relatively simple step. Today, the extreme levels of competition are making the ability to differentiate risk at a much more granular level a critical factor, and our model advances are enabling that. In tandem, many of the Silicon Valley technologies have the potential to greatly enhance efficiency, improve processing power, minimize cost, boost speed to market, enable the development of new products, and positively impact every part of the insurance workflow. Data is the primary asset of our industry — it is the source of every risk decision, and every risk is itself an opportunity. The amount of data is increasing exponentially, and we can now capture more information much faster than ever before, and analyze it with much greater accuracy to enable better decisions. It is clear that the potential is there to change our industry in a positive way. The industry is renowned for being risk averse. Is it ready to adopt the new technologies that this transformation requires? Karen: The risk of doing nothing given current market and technology developments is far greater than that of embracing emerging tech to enable new opportunities and improve cost structures, even though there are bound to be some bumps in the road. I understand the change management can be daunting. But many of the technologies RMS is leveraging to help clients improve price performance and model execution are not new. AI, the Cloud and machine learning are already tried and trusted, and the insurance market will benefit from the lessons other industries have learned as it integrates these technologies. “The sector is not yet attracting the kind of talent that is attracted to firms such as Google, Microsoft or Amazon — and it needs to” Moe Khosravy EVP, Software and Platform, RMS Moe: Making the necessary changes will challenge the perceived risk-averse nature of the insurance market as it will require new ground to be broken. However, if we can clearly show how these capabilities can help companies be measurably more productive and achieve demonstrable business gains, then the market will be more receptive to new user experiences. Mohsen: The performance gains that technology is introducing are immense. A few years ago, we were using computation fluid dynamics to model storm surge. We were conducting the analysis through CPU [central processing unit] microprocessors, which was taking weeks. With the advent of GPU [graphics processing unit] microprocessors, we can carry out the same level of analysis in hours. When you add the supercomputing capabilities possible in the Cloud, which has enabled us to deliver HD-resolution models to our clients — in particular for flood, which requires a high-gradient hazard model to differentiate risk effectively — it has enhanced productivity significantly and in tandem price performance. Is an industry used to incremental change able to accept the stepwise change technology can introduce? Karen: Radical change often happens in increments. The change from client-server to Internet computing did not happen overnight, but was an incremental change that came in waves and enabled powerful market shifts. Amazon is a good example of market leadership out of digital transformation. It launched in 1994 as an online bookstore in a mature, relatively sleepy industry. It evolved into broad e-commerce and again with the introduction of Cloud services when it launched AWS [Amazon Web Services] 12 years ago — now a US$17 billion business that has disrupted the computer industry and is a huge portion of its profit. Amazon has total revenue of US$178 billion from nothing over 25 years, having disrupted the retail sector. Retail consumption has changed dramatically, but I can still go shopping on London’s Oxford Street and about 90 percent of retail is still offline. My point is, things do change incrementally but standing still is not a great option when technology-fueled market dynamics are underway. Getting out in front can be enormously rewarding and create new leadership. However, we must recognize that how we introduce technology must be driven by the challenges it is being introduced to address. I am already hearing people talk about developments such as AI, machine learning and neural networks as if they are fairy dust to sprinkle on the industry’s problems. That is not how this transformation process works. How are you approaching the challenges that this transformation poses? Karen: At RMS, we start by understanding the challenges and opportunities from our customers’ perspectives and then look at what value we can bring that we have not brought before. Only then can we look at how we deliver the required solution. Moe: It’s about having an “outward-in” perspective. We have amazing technology expertise across modeling, computer science and data science, but to deploy that effectively we must listen to what the market wants. We know that many companies are operating multiple disparate systems within their networks that have simply been built upon again and again. So, we must look at harnessing technology to change that, because where you have islands of data, applications and analysis, you lose fidelity, time and insight and costs rise. Moe: While there is a commonality of purpose spanning insurers, reinsurers and brokers, every organization is different. At RMS, we must incorporate that into our software and our platforms. There is no one-size-fits-all and we can’t force everyone to go down the same analytical path. That’s why we are adopting a more modular approach in terms of our software. Whether the focus is portfolio management or underwriting decision-making, it’s about choosing those modules that best meet your needs. “Data is the primary asset of our industry — it is the source of every risk decision, and every risk is itself an opportunity” Mohsen Rahmana, PhD Chief Risk Modeling Officer, RMS Mohsen: When constructing models, we focus on how we can bring the right technology to solve the specific problems our clients have. This requires a huge amount of critical thinking to bring the best solution to market. How strong is the talent base that is helping to deliver this level of capability? Mohsen: RMS is extremely fortunate to have such a fantastic array of talent. This caliber of expertise is what helps set us apart from competitors, enabling us to push boundaries and advance our modeling capabilities at the speed we are. Recently, we have set up teams of modelers and data and computer scientists tasked with developing a range of innovations. It’s fantastic having this depth of talent, and when you create an environment in which innovative minds can thrive you quickly reap the rewards — and that is what we are seeing. In fact, I have seen more innovation at RMS in the last six months than over the past several years. Moe: I would add though that the sector is not yet attracting the kind of talent seen at firms such as Google, Microsoft or Amazon, and it needs to. These companies are either large-scale customer-service providers capitalizing on big data platforms and leading-edge machine-learning techniques to achieve the scale, simplicity and flexibility their customers demand, or enterprises actually building these core platforms themselves. When you bring new blood into an organization or industry, you generate new ideas that challenge current thinking and practices, from the user interface to the underlying platform or the cost of performance. We need to do a better PR job as a technology sector. The best and brightest people in most cases just want the greatest problems to tackle — and we have a ton of those in our industry. Karen: The critical component of any successful team is a balance of complementary skills and capabilities focused on having a high impact on an interesting set of challenges. If you get that dynamic right, then that combination of different lenses correctly aligned brings real clarity to what you are trying to achieve and how to achieve it. I firmly believe at RMS we have that balance. If you look at the skills, experience and backgrounds of Moe, Mohsen and myself, for example, they couldn’t be more different. Bringing Moe and Mohsen together, however, has quickly sparked great and different thinking. They work incredibly well together despite their vastly different technical focus and career paths. In fact, we refer to them as the “Moe-Moes” and made them matching inscribed giant chain necklaces and presented them at an all-hands meeting recently. Moe: Some of the ideas we generate during our discussions and with other members of the modeling team are incredibly powerful. What’s possible here at RMS we would never have been able to even consider before we started working together. Mohsen: Moe’s vast experience of building platforms at companies such as HP, Intel and Microsoft is a great addition to our capabilities. Karen brings a history of innovation and building market platforms with the discipline and the focus we need to deliver on the vision we are creating. If you look at the huge amount we have been able to achieve in the months that she has been at RMS, that is a testament to the clear direction we now have. Karen: While we do come from very different backgrounds, we share a very well-defined culture. We care deeply about our clients and their needs. We challenge ourselves every day to innovate to meet those needs, while at the same time maintaining a hell-bent pragmatism to ensure we deliver. Mohsen: To achieve what we have set out to achieve requires harmony. It requires a clear vision, the scientific know-how, the drive to learn more, the ability to innovate and the technology to deliver — all working in harmony. Career Highlights Karen White is an accomplished leader in the technology industry, with a 25-year track record of leading, innovating and scaling global technology businesses. She started her career in Silicon Valley in 1993 as a senior executive at Oracle. Most recently, Karen was president and COO at Addepar, a leading fintech company serving the investment management industry with data and analytics solutions. Moe Khosravy (center) has over 20 years of software innovation experience delivering enterprise-grade products and platforms differentiated by data science, powerful analytics and applied machine learning to help transform industries. Most recently he was vice president of software at HP Inc., supporting hundreds of millions of connected devices and clients. Mohsen Rahnama leads a global team of accomplished scientists, engineers and product managers responsible for the development and delivery of all RMS catastrophe models and data. During his 20 years at RMS, he has been a dedicated, hands-on leader of the largest team of catastrophe modeling professionals in the industry.

A Risk-Driven Business

Following Tower Insurance’s switch to risk-based pricing in New Zealand, EXPOSURE examines how recent market developments may herald a more fundamental industry shift The ramifications of the Christchurch earthquakes of 2010-11 continue to reverberate through the New Zealand insurance market. The country’s Earthquake Commission (EQC), which provides government-backed natural disaster insurance, is forecast to have paid around NZ$11 billion (US$7.3 billion) by the time it settles its final claim. The devastating losses exposed significant shortfalls in the country’s insurance market. These included major deficiencies in insurer data, gaps in portfolio management and expansive policy wordings that left carriers exposed to numerous unexpected losses. Since then, much has changed. Policy terms have been tightened, restrictions have been introduced on coverage and concerted efforts have been made to bolster databases. On July 1, 2019, the EQC increased the cap limit on the government-mandated residential cover it provides to all householders from NZ$100,000 (US$66,000) (a figure set in 1993) to NZ$150,000. A significant increase, but well below the average house price in New Zealand as of December 2017, which stood at NZ$669,565, and an average rebuild cost of NZ$350,000. It has also removed contents coverage. More recently, however, one development has taken place that has the potential to have a much more profound impact on the market. Risk-Based Pricing In March 2018, New Zealand insurer Tower Insurance announced a move to risk-based pricing for home insurance. It aims to ensure premium levels are commensurate with individual property risk profiles, with those in highly exposed areas experiencing a price rise on the earthquake component of their coverage. Describing the shift as a “fairer and more equitable way of pricing risk,” Tower CEO Richard Harding says this was the “right thing to do” both for the “long-term benefit of New Zealand” and for customers, with risk-based pricing “the fairest way to distribute the costs we face as an insurer.” The move has generated much media coverage, with stories highlighting instances of triple-digit percentage hikes in earthquake-prone regions such as Wellington. Yet, what has generated significantly fewer column inches has been the marginal declines available to the vast majority of households in the less seismically active regions, as the high-risk earthquake burden on their premium is reduced. A key factor in Tower’s decision was the increasing quality and granularity of the underwriting data at its disposal. “Tower has always focused on the quality of its data and has invested heavily in ensuring it has the highest-resolution information available,” says Michael Drayton, senior risk modeler for RMS, based in New Zealand. “The earthquakes generated the most extensive liquefaction in a built-up area seen in a developed country” Michael Drayton RMS In fact, in the aftermath of the Christchurch earthquakes, RMS worked with Tower as RMS rebuilt its New Zealand High-Definition (HD) Earthquake Model due to the caliber of their data. Prior to the earthquake, claims data was in very short supply given that there had been few previous events with large-scale impacts on highly built-up areas. “On the vulnerability side,” Drayton explains, “we had virtually no local claims data to build our damage functions. Our previous model had used comparisons of building performance in other earthquake-exposed regions. After Christchurch, we suddenly had access to billions of dollars of claims information.” RMS sourced data from numerous parties, including EQC and Tower, as well as geoscience research firm GNS Science, as it reconstructed the model from this swell of data. “RMS had a model that had served the market well for many years,” he explains. “On the hazard side, the fundamentals remained the same — the highest hazard is along the plate boundary, which runs offshore along the east coast of North Island traversing over to the western edge of South Island. But we had now gathered new information on fault lines, activity rates, magnitudes and subduction zones. We also updated our ground motion prediction equations.” One of the most high-profile model developments was the advanced liquefaction module. “The 2010-11 earthquakes generated probably the most extensive liquefaction in a built-up area seen in a developed country. With the new information, we were now able to capture the risk at much higher gradients and in much greater resolution,” says Drayton. This data surge enabled RMS to construct its New Zealand Earthquake HD Model on a variable resolution grid set at a far more localized level. In turn, this has helped give Tower sufficient confidence in the granularity and accuracy of its data at the property level to adopt risk-based pricing. The Ripple Effects As homeowners received their renewal notices, the reality of risk-based pricing started to sink in. Tower is the third-largest insurer for domestic household, contents and private motor cover in New Zealand and faces stiff competition. Over 70 percent of the market is in the hands of two players, with IAG holding around 47 percent and Suncorp approximately 25 percent. News reports also suggested movement from the larger players. AMI and State, both owned by IAG, announced that three-quarters of its policyholders — those at heightened risk of earthquake, landslide or flood — will see an average annual premium increase of NZ$91 (US$60); the remaining quarter at lower risk will see decreases averaging NZ$54 per year. A handful of households could see increases or decreases of up to NZ$1,000. According to the news website Stuff, IAG has not changed premiums for its NZI policyholders, with NZI selling house insurance policies through brokers. “One interesting dynamic is that a small number of start-ups are now entering the market with the same risk-based pricing stance taken by Tower,” Drayton points out. “These are companies with new purpose-built IT systems that are small and nimble and able to target niche sectors.” “It’s certainly a development to watch closely,” he continues, “as it raises the potential for larger players, if they are not able to respond effectively, being selected against. It will be interesting to see if the rate of these new entrants increases.” The move from IAG suggests risk-based pricing will extend beyond the earthquake component of cover to flood-related elements. “Flood is not a reinsurance peril for New Zealand, but it is an attritional one,” Drayton points out. “Then there is the issue of rising sea levels and the potential for coastal flooding, which is a major cause for concern. So, the risk-based pricing shift is feeding into climate change discussions too.” A Fundamental Shift Policyholders in risk-exposed areas such as Wellington were almost totally unaware of how much higher their insurance should be based on their property exposure, largely shielded away from the risk reality of earthquakes in recent years. The move to risk-based pricing will change that. “The market shifts we are seeing today pose a multitude of questions and few clear answers” Michael Drayton RMS Drayton agrees that recent developments are opening the eyes of homeowners. “There is a growing realization that New Zealand’s insurance market has operated very differently from other insurance markets and that that is now changing.” One major marketwide development in recent years has been the move from full replacement cover to fixed sums insured in household policies. “This has a lot of people worried they might not be covered,” he explains. “Whereas before, people simply assumed that in the event of a big loss the insurer would cover it all, now they’re slowly realizing it no longer works like that. This will require a lot of policyholder education and will take time.” At a more foundational level, current market dynamics also address the fundamental role of insurance, exposing the conflicted role of the insurer as both a facilitator of risk pooling and a profit-making enterprise. When investment returns outweighed underwriting profit, it appeared as if cross-subsidization wasn’t a big issue. However, current dynamics has meant the operating model is squarely focused on underwriting returns — to favor risk-based pricing. Cross-subsidization is the basis upon which EQC is built, but is it fair? Twenty cents in every NZ$100 (US$66) of home or contents fire insurance premium, up to a maximum of NZ$100,000 insured, is passed on to the EQC. While to date there has been limited government response to risk-based pricing, it is monitoring the situation closely given the broader implications. Looking globally, in an RMS blog, chief research officer Robert Muir-Wood also raises the question whether “flat-rated” schemes, like the French cat nat scheme, will survive now that it has become clear how to use risk models to calculate the wide differentials in the underlying cost of the risk. He asks whether “such schemes are established in the name of ‘solidarity’ or ignorance?” While there is no evidence yet, current developments raise the potential for certain risks to become uninsurable. Increasingly granular data combined with the drive for greater profitability may cause a downward spiral in a market built on a shared burden. Drayton adds: “Potential uninsurability has more to do with land-use planning and building consent regimes, and insurers shouldn’t be paying the price for poor planning decisions. Ironically, earthquake loading codes are very sophisticated and have evolved to recognize the fine gradations in earthquake risk provided by localized data. In fact, they are so refined that structural engineers remark that they are too nuanced and need to be simpler. But if you are building in a high-risk area, it’s not just designing for the hazard, it is also managing the potential financial risk.” He concludes: “The market shifts we are seeing today pose a multitude of questions and few clear answers. However, the only constant running through all these discussions is that they are all data driven.” Making the Move Key to understanding the rationale behind the shift to risk-based pricing is understanding the broader economic context of New Zealand, says Tower CEO Richard Harding. “The New Zealand economy is comparatively small,” he explains, “and we face a range of unique climatic and geological risks. If we don’t plan for and mitigate these risks, there is a chance that reinsurers will charge insurers more or restrict cover. “Before this happens, we need to educate the community, government, councils and regulators, and by moving toward risk-based pricing, we’re putting a signal into the market to drive social change through these organizations. “These signals will help demonstrate to councils and government that more needs to be done to plan for and mitigate natural disasters and climate change.” Harding feels that this risk-based pricing shift is a natural market evolution. “When you look at global trends, this is happening around the world. So, given that we face a number of large risks here in New Zealand, in some respects, it’s surprising it hasn’t happened sooner,” he says. While some parties have raised concerns that there may be a fall in insurance uptake in highly exposed regions, Harding does not believe this will be the case. “For the average home, insurance may be more expensive than it currently is, but it won’t be unattainable,” he states. Moving forward, he says that Tower is working to extend its risk-based pricing approach beyond the earthquake component of its cover, stating that the firm “is actively pursuing risk-based pricing for flood and other natural perils, and over the long term we would expect other insurers to follow in our footsteps.” In terms of the potential wider implications if this occurs, Harding says that such a development would compel government, councils and other organizations to change how they view risk in their planning processes. “I think it will start to drive customers to consider risk more holistically and take this into account when they build and buy homes,” he concludes.

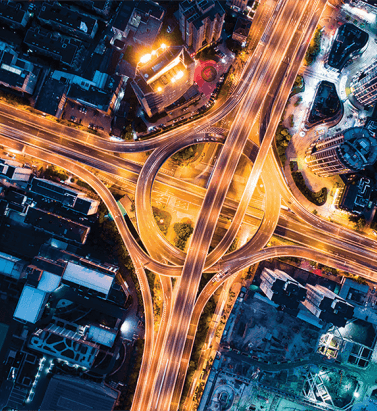

Data Flow in a Digital Ecosystem

There has been much industry focus on the value of digitization at the customer interface, but what is its role in risk management and portfolio optimization? In recent years, the perceived value of digitization to the insurance industry has been increasingly refined on many fronts. It now serves a clear function in areas such as policy administration, customer interaction, policy distribution and claims processing, delivering tangible, measurable benefits. However, the potential role of digitization in supporting the underwriting functions, enhancing the risk management process and facilitating portfolio optimization is sometimes less clear. That this is the case is perhaps a reflection of the fact that risk assessment is by its very nature a more nebulous task, isolated to only a few employees, and clarifying the direct benefits of digitization is therefore challenging. To grasp the potential of digitalization, we must first acknowledge the limitations of existing platforms and processes, and in particular the lack of joined-up data in a consistent format. But connecting data sets and being able to process analytics is just the start. There needs to be clarity in terms of the analytics an underwriter requires, including building or extending core business workflow to deliver insights at the point of impact. Data Limitation For Louise Day, director of operations at the International Underwriting Association (IUA), a major issue is that much of the data generated across the industry is held remotely from the underwriter. “You have data being keyed in at numerous points and from multiple parties in the underwriting process. However, rather than being stored in a format accessible to the underwriter, it is simply transferred to a repository where it becomes part of a huge data lake with limited ability to stream that data back out.” That data is entering the “lake” via multiple different systems and in different formats. These amorphous pools severely limit the potential to extract information in a defined, risk-specific manner, conduct impactful analytics and do so in a timeframe relevant to the underwriting decision-making process. “The underwriter is often disconnected from critical risk data,” believes Shaheen Razzaq, senior product director at RMS. “This creates significant challenges when trying to accurately represent coverage, generate or access meaningful analysis of metrics and grasp the marginal impacts of any underwriting decisions on overall portfolio performance. “Success lies not just in attempting to connect the different data sources together, but to do it in such a way that can generate the right insight within the right context and get this to the underwriter to make smarter decisions.” Without the digital capabilities to connect the various data sets and deliver information in a digestible format to the underwriter, their view of risk can be severely restricted — particularly given that server storage limits often mean their data access only extends as far as current information. Many businesses find themselves suffering from DRIP, being data rich but information poor, without the ability to transform their data into valuable insight. “You need to be able to understand risk in its fullest context,” Razzaq says. “What is the precise location of the risk? What policy history information do we have? How has the risk performed? How have the modeled numbers changed? What other data sources can I tap? What are the wider portfolio implications of binding it? How will it impact my concentration risk? How can I test different contract structures to ensure the client has adequate cover but is still profitable business for me? These are all questions they need answers to in real time at the decision-making point, but often that’s simply not possible.” When extrapolating this lack of data granularity up to the portfolio level and beyond, the potential implications of poor risk management at the point of underwriting can be extreme. With a high-resolution peril like U.S. flood, where two properties meters apart can have very different risk profiles, without granular data at the point of impact, the ability to make accurate risk decisions is restricted. Rolling up that degree of inaccuracy to the line of business and to the portfolio level, and the ramifications are significant. Looking beyond the organization and out to the wider flow of data through the underwriting ecosystem, the lack of format consistency is creating a major data blockage, according to Jamie Garratt, head of innovation at Talbot. “You are talking about trying to transfer data which is often not in any consistent format along a value chain that contains a huge number of different systems and counterparties,” he explains. “And the inability to quickly and inexpensively convert that data into a format that enables that flow, is prohibitive to progress. “You are looking at the formatting of policies, schedules and risk information, which is being passed through a number of counterparties all operating different systems. It then needs to integrate into pricing models, policy administration systems, exposure management systems, payment systems, et cetera. And when you consider this process replicated across a subscription market the inefficiencies are extensive.” A Functioning Ecosystem There are numerous examples of sectors that have transitioned successfully to a digitized data ecosystem that the insurance industry can learn from. One such industry is health care, which over the last decade has successfully adopted digital processes across the value chain and overcome the data formatting challenge. It can be argued that health care has a value chain similar to that in the insurance industry. Data is shared between various stakeholders — including competitors — to create the analytical backbone it needs to function effectively. Data is retained and shared at the individual level and combines multiple health perspectives to gain a holistic view of the patient. The sector has also overcome the data-consistency hurdle by collectively agreeing on a data standard, enabling the effective flow of information across all parties in the chain, from the health care facilities through to the services companies that support them. Garratt draws attention to the way the broader financial markets function. “There are numerous parallels that can be drawn between the financial and the insurance markets, and much that we can learn from how that industry has evolved over the last 10 to 20 years.” “As the capital markets become an increasingly prevalent part of the insurance sector,” he continues, “this will inevitably have a bearing on how we approach data and the need for greater digitization. If you look, for example, at the advances that have been made in how risk is transferred on the insurance-linked securities (ILS) front, what we now have is a fairly homogenous financial product where the potential for data exchange is more straightforward and transaction costs and speed have been greatly reduced. “It is true that pure reinsurance transactions are more complex given the nature of the market, but there are lessons that can be learned to improve transaction execution and the binding of risks.” For Razzaq, it’s also about rebalancing the data extrapolation versus data analysis equation. “By removing data silos and creating straight-through access to detailed, relevant, real-time data, you shift this equation on its axis. At present, some 70 to 80 percent of analysts’ time is spent sourcing data and converting it into a consistent format, with only 20 to 30 percent spent on the critical data analysis. An effective digital infrastructure can switch that equation around, greatly reducing the steps involved, and re-establishing analytics as the core function of the analytics team.” The Analytical Backbone So how does this concept of a functioning digital ecosystem map to the (re)insurance environment? The challenge, of course, is not only to create joined-up, real-time data processes at the organizational level, but also look at how that unified infrastructure can extend out to support improved data interaction at the industry level. An ideal digital scenario from a risk management perspective is where all parties operate on a single analytical framework or backbone built on the same rules, with the same data and using the same financial calculation engines, ensuring that on all risk fronts you are carrying out an ‘apples-to-apples’ comparison. That consistent approach would need to extend from the individual risk decision, to the portfolio, to the line of business, right up to the enterprise-wide level. At the underwriting trenches, it is about enhancing and improving the decision-making process and understanding the portfolio-level implications of those decisions. “A modern pricing and portfolio risk evaluation framework can reduce assessment times, providing direct access to relevant internal and external data in almost real time,” states Ben Canagaretna, managing director at Barbican Insurance Group. “Creating a data flow, designed specifically to support agile decision-making, allows underwriters to price complex business in a much shorter time period.” “It’s about creating a data flow designed specifically to support decision-making” Ben Canagaretna Barbican Insurance Group “The feedback loop around decisions surrounding overall reinsurance costs and investor capital exposure is paramount in order to maximize returns on capital for shareholders that are commensurate to risk appetite. At the heart of this is the portfolio marginal impact analysis – the ability to assess the impact of each risk on the overall portfolio in terms of exceedance probability curves, realistic disaster scenarios and regional exposures. Integrated historical loss information is a must in order to quickly assess the profitability of relevant brokers, trade groups and specific policies.” There is, of course, the risk of data overload in such an environment, with multiple information streams threatening to swamp the process if not channeled effectively. “It’s about giving the underwriter much better visibility of the risk,” says Garratt, “but to do that the information must be filtered precisely to ensure that the most relevant data is prioritized, so it can then inform underwriters about a specific risk or feed directly into pricing models.” Making the Transition There are no organizations in today’s (re)insurance market that cannot perceive at least a marginal benefit from integrating digital capabilities into their current underwriting processes. And for those that have started on the route, tangible benefits are already emerging. Yet making the transition, particularly given the clear scale of the challenge, is daunting. “You can’t simply unplug all of your legacy systems and reconnect a new digital infrastructure,” says IUA’s Day. “You have to find a way of integrating current processes into a data ecosystem in a manageable and controlled manner. From a data-gathering perspective, that process could start with adopting a standard electronic template to collect quote data and storing that data in a way that can be easily accessed and transferred.” “There are tangible short-term benefits of making the transition,” adds Razzaq. “Starting small and focusing on certain entities within the group. Only transferring certain use cases and not all at once. Taking a steady step approach rather than simply acknowledging the benefits but being overwhelmed by the potential scale of the challenge.” There is no doubting, however, that the task is significant, particularly integrating multiple data types into a single format. “We recognize that companies have source-data repositories and legacy systems, and the initial aim is not to ‘rip and replace’ those, but rather to create a path to a system that allows all of these data sets to move. For RMS, we have the ability to connect these various data hubs via open APIs to our Risk Intelligence platform to create that information superhighway, with an analytics layer that can turn this data into actionable insights.” Talbot has already ventured further down this path than many other organizations, and its pioneering spirit is already bearing fruit. “We have looked at those areas,” explains Garratt, “where we believe it is more likely we can secure short-term benefits that demonstrate the value of our longer-term strategy. For example, we recently conducted a proof of concept using quite powerful natural-language processing supported by machine-learning capabilities to extract and then analyze historic data in the marine space, and already we are generating some really valuable insights. “I don’t think the transition is reliant on having a clear idea of what the end state is going to look like, but rather taking those initial steps that start moving you in a particular direction. There also has to be an acceptance of the need to fail early and learn fast, which is hard to grasp in a risk-averse industry. Some initiatives will fail — you have to recognize that and be ready to pivot and move in a different direction if they do.”

The Future of (Re)Insurance: Evolution of the Insurer DNA

The (re)insurance industry is at a tipping point. Rapid technological change, disruption through new, more efficient forms of capital and an evolving risk landscape are challenging industry incumbents like never before. Inevitably, as EXPOSURE reports, the winners will be those who find ways to harmonize analytics, technology, industry innovation, and modelling. There is much talk of disruptive innovation in the insurance industry. In personal lines insurance, disintermediation, the rise of aggregator websites and the Internet of Things (IoT) – such as connected car, home, and wearable devices – promise to transform traditional products and services. In the commercial insurance and reinsurance space, disruptive technological change has been less obvious, but behind the scenes the industry is undergoing some fundamental changes. The Tipping Point The ‘Uber’ moment has yet to arrive in reinsurance, according to Michael Steel, global head of business development at RMS. “The change we’re seeing in the industry is constant. We’re seeing disruption throughout the entire insurance journey. It’s not the case that the industry is suffering from a short-term correction and then the market will go back to the way it has done business previously. The industry is under huge competitive pressures and the change we’re seeing is permanent and it will be continuous over time.” Experts feel the industry is now at a tipping point. Huge competitive pressures, rising expense ratios, an evolving risk landscape and rapid technological advances are forcing change upon an industry that has traditionally been considered somewhat of a laggard. And the revolution, when it comes, will be a quick one, thinks Rupert Swallow, co-founder and CEO of Capsicum Re. “WE’RE SEEING DISRUPTION THROUGHOUT THE ENTIRE INSURANCE JOURNEY” MICHAEL STEEL RMS Other sectors have plenty of cautionary tales on what happens when businesses fail to adapt to a changing world, he explains. “Kodak was a business that in 1998 had 120,000 employees and printed 95 percent of the world’s photographs. Two years later, that company was bankrupt as digital cameras built their presence in the marketplace. When the tipping point is reached, the change is radical and fast and fundamental.” While it is impossible to predict exactly how the industry will evolve going forward, it is clear that tomorrow’s leading (re)insurance companies will share certain attributes. This includes a strong appetite to harness data and invest in new technology and analytics capabilities, the drive to differentiate and design new products and services, and the ability to collaborate. In particular, the goal of an analytic-driven organization is to leverage the right technologies to bring data, workflow and business analytics together to continuously drive more informed, timely and collaborative decision making across the enterprise. And while there are many choices with the rise of insurtech firms, history shows us that success is achieved only when the proper due diligence is done to really understand and assess how these technologies enable the longer term business strategy, goals and objectives. One of the most important ingredients to success is the ability to effectively blend the right team of technologists, data scientists and domain experts who can work together to understand and deliver upon these key objectives. The most successful companies will also look to attract and retain the best talent, with succession planning that puts a strong emphasis on bringing Millennials up through the ranks. “There is a huge difference between the way Millennials look at the workplace and live their lives, versus industry professionals born in the 1960s or 1970s – the two generations are completely different,” says Swallow. “Those guys [Millennials] would no sooner write a cheque to pay for something than fly to the moon.” Case for Collaboration If (re)insurers drag their heels in embracing and investing in new technology and analytics capabilities, disruption could well come from outside the industry. Back in 2015, Lloyd’s CEO Inga Beale warned that insurers were in danger of being “Uber-ized” as technology allows companies from Google to Walmart to undermine the sector’s role of managing risk. Her concerns are well founded, with Google launching a price comparison site in the U.S. and Rakuten and Alibaba, Japan and China’s answers to Amazon respectively, selling a range of insurance products on their platforms. “No area of the market is off-limits to well-organized technology companies that are increasingly encroaching everywhere,” says Rob Procter, CEO of Securis Investment Partners. “Why wouldn’t Google write insurance… particularly given what they are doing with autonomous vehicles? They may not be insurance experts but these technology firms are driving the advances in terms of volumes of data, data manipulation, and speed of data processing.” Procter makes the point that the reinsurance industry has already been disrupted by the influx of third-party capital into the ILS space over the past decade to 15 years. Collateralized products such as catastrophe bonds, sidecars and non-traditional reinsurance have fundamentally altered the reinsurance cycle and exposed the industry’s inefficiencies like never before. “We’ve been innovators in this industry because we came in ten or 15 years ago, and we’ve changed the way the industry is structured and is capitalized and how the capital connects with the customer,” he says. “But more change is required to bring down expenses and to take out what are massive friction costs, which in turn will allow reinsurance solutions to be priced competitively in situations where they are not currently. “It’s astounding that 70 percent of the world’s catastrophe losses are still uninsured,” he adds. “That statistic has remained unchanged for the last 20 years. If this industry was more efficient it would be able to deliver solutions that work to close that gap.” Collaboration is the key to leveraging technology – or insurtech – expertise and getting closer to the original risk. There are numerous examples of tie-ups between (re)insurance industry incumbents and tech firms. Others have set up innovation garages or bought their way into innovation, acquiring or backing niche start-up firms. Silicon Valley, Israel’s Silicon Wadi, India’s tech capital Bangalore and Shanghai in China are now among the favored destinations for scouting visits by insurance chief innovation officers. One example of a strategic collaboration is the MGA Attune, set up last year by AIG, Hamilton Insurance Group, and affiliates of Two Sigma Investments. Through the partnership, AIG gained access to Two Sigma’s vast technology and data-science capabilities to grow its market share in the U.S. small to mid-sized commercial insurance space. “The challenge for the industry is to remain relevant to our customers,” says Steel. “Those that fail to adapt will get left behind. To succeed you’re going to need greater information about the underlying risk, the ability to package the risk in a different way, to select the appropriate risks, differentiate more, and construct better portfolios.” Investment in technology in and of itself is not the solution, thinks Swallow. He thinks there has been too much focus on process and not enough on product design. “Insurtech is an amazing opportunity but a lot of people seem to spend time looking at the fulfilment of the product – what ‘Chily’ [Swallow’s business partner and industry guru Grahame Chilton] would call ‘plumbing’. “In our industry, there is still so much attention on the ‘plumbing’ and the fact that the plumbing doesn’t work, that insurtech isn’t yet really focused on compliance, regulation of product, which is where all the real gains can be found, just as they have been in the capital markets,” adds Swallow. Taking out the Friction Blockchain however, states Swallow, is “plumbing on steroids”. “Blockchain is nothing but pure, unadulterated, disintermediation. My understanding is that if certain events happen at the beginning of the chain, then there is a defined outcome that actually happens without any human intervention at the other end of the chain.” In January, Aegon, Allianz, Munich Re, Swiss Re, and Zurich launched the Blockchain Insurance Industry Initiative, a “US$5 billion opportunity” according to PwC. The feasibility study will explore the potential of distributed ledger technologies to better serve clients through faster, more convenient and secure services. “BLOCKCHAIN FOR THE REINSURANCE SPACE IS AN EFFICIENCY TOOL. AND IF WE ALL GET MORE EFFICIENT, YOU ARE ABLE TO INCREASE INSURABILITY BECAUSE YOUR PRICES COME DOWN” KURT KARL SWISS RE Blockchain offers huge potential to reduce some of the significant administrative burdens in the industry, thinks Kurt Karl, chief economist at Swiss Re. “Blockchain for the reinsurance space is an efficiency tool. And if we all get more efficient, you are able to increase insurability because your prices come down, and you can have more affordable reinsurance and therefore more affordable insurance. So I think we all win if it’s a cost saving for the industry.” Collaboration will enable those with scale to behave like nimble start-ups, explains Karl. “We like scale. We’re large. I’ll be blunt about that,” he says. “For the reinsurance space, what we do is to leverage our size to differentiate ourselves. With size, we’re able to invest in all these new technologies and then understand them well enough to have a dialogue with our clients. The nimbleness doesn’t come from small insurers; the nimbleness comes from insurance tech start-ups.” He gives the example of Lemonade, the peer-to-peer start-up insurer that launched in 2016, selling discounted homeowners’ insurance in New York. Working off the premise that insurance customers lack trust in the industry, Lemonade’s business model is based around returning premium to customers when claims are not made. In its second round of capital raising, Lemonade secured funding from XL Group’s venture fund, also a reinsurance partner of the innovative new firm. The firm is also able to offer faster, more efficient, claims processing. “Lemonade’s [business model] is all about efficiency and the cost saving,” says Karl. “But it’s also clearly of benefit to the client, which is a lot more appealing than a long, drawn-out claims process.” Tearing up the Rule Book By collecting and utilizing data from customers and third parties, personal lines insurers are now able to offer more customized products and, in many circumstances, improve the underlying risk. Customers can win discounts for protecting their homes and other assets, maintaining a healthy lifestyle and driving safely. In a world where products are increasingly designed with the digital native in mind, drivers can pay-as-they-go and property owners can access cheaper home insurance via peer-to-peer models. Reinsurers may be one step removed from this seismic shift in how the original risk is perceived and underwritten, but just as personal lines insurers are tearing up the rule book, so too are their risk partners. It is over 300 years since the first marine and fire insurance policies were written. In that time (re)insurance has expanded significantly with a range of property, casualty, and specialty products. However, the wordings contained in standard (re)insurance policies, the involvement of a broker in placing the business and the face-to-face transactional nature of the business – particularly within the London market – has not altered significantly over the past three centuries. Some are questioning whether these traditional indemnity products are the right solution for all classes of risk. “We think people are often insuring cyber against the wrong things,” says Dane Douetil, group CEO of Minova Insurance. “They probably buy too much cover in some places and not nearly enough in areas where they don’t really understand they’ve got a risk. So we’re starting from the other way around, which is actually providing analysis about where their risks are and then creating the policy to cover it.” “There has been more innovation in intangible type risks, far more in the last five to ten years than probably people give credit for. Whether you’re talking about cyber, product recall, new forms of business interruption, intellectual property or the huge growth in mergers and acquisition coverages against warranty and indemnity claims – there’s been a lot of development in all of those areas and none of that existed ten years ago.” Closing the Gap Access to new data sources along with the ability to interpret and utilize that information will be a key instrument in improving the speed of settlement and offering products that are fit for purpose and reflect today’s risk landscape. “We’ve been working on a product that just takes all the information available from airlines, about delays and how often they happen,” says Karl. “And of course you can price off that; you don’t need the loss history, all you need is the probability of the loss, how often does the plane have a five-hour delay?” “All the travel underwriters then need to do is price it ‘X’, and have a little margin built-in, and then they’re able to offer a nice new product to consumers who get some compensation for the frustration of sitting there on the tarmac.” With more esoteric lines of business such as cyber, parametric products could be one solution to providing meaningful coverage for a rapidly-evolving corporate risk. “The corporates of course want indemnity protection, but that’s extremely difficult to do,” says Karl. “I think there will be some of that but also some parametric, because it’s often a fixed payout that’s capped and is dependent upon the metric, as opposed to indemnity, which could well end up being the full value of the company. Because you can potentially have a company destroyed by a cyber-attack at this point.” One issue to overcome with parametric products is the basis risk aspect. This is the risk that an insured suffers a significant loss of income, but its cover is not triggered. However, as data and risk management improves, the concerns surrounding basis risk should reduce. Improving the Underlying Risk The evolution of the cyber (re)insurance market also points to a new opportunity in a data-rich age: pre-loss services. By tapping into a wealth of claims and third-party data sources, successful (re)insurers of the future will be in an even stronger position to help their insureds become resilient and incident-ready. In cyber, these services are already part of the package and include security consultancy, breach-response services and simulated cyber attacks to test the fortitude of corporate networks and raise awareness among staff. “WE DO A DISSERVICE TO OUR INDUSTRY BY SAYING THAT WE’RE NOT INNOVATORS, THAT WE’RE STUCK IN THE PAST” DANE DOUETIL MINOVA INSURANCE IoT is not just an instrument for personal lines. Just as insurance companies are utilizing data collected from connected devices to analyze individual risks and feedback information to improve the risk, (re)insurers also have an opportunity to utilize third-party data. “GPS sensors on containers can allow insurers to monitor cargo as it flows around the world – there is a use for this technology to help mitigate and manage the risk on the front end of the business,” states Steel. Information is only powerful if it is analyzed effectively and available in real-time as transactional and pricing decisions are made, thinks RMS’ Steel. “The industry is getting better at using analytics and ensuring the output of analytics is fed directly into the hands of key business decision makers.” “It’s about using things like portfolio optimization, which even ten years ago would have been difficult,” he adds. “As you’re using the technologies that are available now you’re creating more efficient capital structures and better, more efficient business models.” Minova’s Douetil thinks the industry is stepping up to the plate. “Insurance is effectively the oil that lubricates the economy,” he says. “Without insurance, as we saw with the World Trade Center disaster and other catastrophes, the whole economy could come to a grinding halt pretty quickly if you take the ‘oil’ away.” “That oil has to continually adapt and be innovative in terms of being able to serve the wider economy,” he continues. “But I think we do a disservice to our industry by saying that we’re not innovators, that we’re stuck in the past. I just think about how much this business has changed over the years.” “It can change more, without a doubt, and there is no doubt that the communication capabilities that we have now mean there will be a shortening of the distribution chain,” he adds. “That’s already happening quite dramatically and in the personal lines market, obviously even more rapidly.”