Tag: Issue 5

In Total Harmony

September 05, 2018Karen White joined RMS as CEO in March 2018, followed closely by Moe Khosravy, general manager of software and platform activities. EXPOSURE talks to both, along with Mohsen Rahnama, chief risk modeling officer and one of the firm’s most long-standing team members, about their collective vision for the company, innovation, transformation and technology in risk management Karen and Moe, what was it that sparked your interest in joining RMS? Karen: What initially got me excited was the strength of the hand we have to play here and the fact that the insurance sector is at a very interesting time in its evolution. The team is fantastic — one of the most extraordinary groups of talent I have come across. At our core, we have hundreds of Ph.D.s, superb modelers and scientists, surrounded by top engineers, and computer and data scientists. I firmly believe no other modeling firm holds a candle to the quality of leadership and depth and breadth of intellectual property at RMS. We are years ahead of our competitors in terms of the products we deliver. Moe: For me, what can I say? When Karen calls with an idea it’s very hard to say no! However, when she called about the RMS opportunity, I hadn’t ever considered working in the insurance sector. My eureka moment came when I looked at the industry’s challenges and the technology available to tackle them. I realized that this wasn’t simply a cat modeling property insurance play, but was much more expansive. If you generalize the notion of risk and loss, the potential of what we are working on and the value to the insurance sector becomes much greater. I thought about the technologies entering the sector and how new developments on the AI [artificial intelligence] and machine learning front could vastly expand current analytical capabilities. I also began to consider how such technologies could transform the sector’s cost base. In the end, the decision to join RMS was pretty straightforward. “Developments such as AI and machine learning are not fairy dust to sprinkle on the industry’s problems” Karen White CEO, RMS Karen: The industry itself is reaching a eureka moment, which is precisely where I love to be. It is at a transformational tipping point — the technology is available to enable this transformation and the industry is compelled to undertake it. I’ve always sought to enter markets at this critical point. When I joined Oracle in the 1990s, the business world was at a transformational point — moving from client-server computing to Internet computing. This has brought about many of the huge changes we have seen in business infrastructure since, so I had a bird’s-eye view of what was a truly extraordinary market shift coupled with a technology shift. That experience made me realize how an architectural shift coupled with a market shift can create immense forward momentum. If the technology can’t support the vision, or if the challenges or opportunities aren’t compelling enough, then you won’t see that level of change occur. Do (re)insurers recognize the need to change and are they willing to make the digital transition required? Karen: I absolutely think so. There are incredible market pressures to become more efficient, assess risks more effectively, improve loss ratios, achieve better business outcomes and introduce more beneficial ways of capitalizing risk. You also have numerous new opportunities emerging. New perils, new products and new ways of delivering those products that have huge potential to fuel growth. These can be accelerated not just by market dynamics but also by a smart embrace of new technologies and digital transformation. Mohsen: Twenty-five years ago when we began building models at RMS, practitioners simply had no effective means of assessing risk. So, the adoption of model technology was a relatively simple step. Today, the extreme levels of competition are making the ability to differentiate risk at a much more granular level a critical factor, and our model advances are enabling that. In tandem, many of the Silicon Valley technologies have the potential to greatly enhance efficiency, improve processing power, minimize cost, boost speed to market, enable the development of new products, and positively impact every part of the insurance workflow. Data is the primary asset of our industry — it is the source of every risk decision, and every risk is itself an opportunity. The amount of data is increasing exponentially, and we can now capture more information much faster than ever before, and analyze it with much greater accuracy to enable better decisions. It is clear that the potential is there to change our industry in a positive way. The industry is renowned for being risk averse. Is it ready to adopt the new technologies that this transformation requires? Karen: The risk of doing nothing given current market and technology developments is far greater than that of embracing emerging tech to enable new opportunities and improve cost structures, even though there are bound to be some bumps in the road. I understand the change management can be daunting. But many of the technologies RMS is leveraging to help clients improve price performance and model execution are not new. AI, the Cloud and machine learning are already tried and trusted, and the insurance market will benefit from the lessons other industries have learned as it integrates these technologies. “The sector is not yet attracting the kind of talent that is attracted to firms such as Google, Microsoft or Amazon — and it needs to” Moe Khosravy EVP, Software and Platform, RMS Moe: Making the necessary changes will challenge the perceived risk-averse nature of the insurance market as it will require new ground to be broken. However, if we can clearly show how these capabilities can help companies be measurably more productive and achieve demonstrable business gains, then the market will be more receptive to new user experiences. Mohsen: The performance gains that technology is introducing are immense. A few years ago, we were using computation fluid dynamics to model storm surge. We were conducting the analysis through CPU [central processing unit] microprocessors, which was taking weeks. With the advent of GPU [graphics processing unit] microprocessors, we can carry out the same level of analysis in hours. When you add the supercomputing capabilities possible in the Cloud, which has enabled us to deliver HD-resolution models to our clients — in particular for flood, which requires a high-gradient hazard model to differentiate risk effectively — it has enhanced productivity significantly and in tandem price performance. Is an industry used to incremental change able to accept the stepwise change technology can introduce? Karen: Radical change often happens in increments. The change from client-server to Internet computing did not happen overnight, but was an incremental change that came in waves and enabled powerful market shifts. Amazon is a good example of market leadership out of digital transformation. It launched in 1994 as an online bookstore in a mature, relatively sleepy industry. It evolved into broad e-commerce and again with the introduction of Cloud services when it launched AWS [Amazon Web Services] 12 years ago — now a US$17 billion business that has disrupted the computer industry and is a huge portion of its profit. Amazon has total revenue of US$178 billion from nothing over 25 years, having disrupted the retail sector. Retail consumption has changed dramatically, but I can still go shopping on London’s Oxford Street and about 90 percent of retail is still offline. My point is, things do change incrementally but standing still is not a great option when technology-fueled market dynamics are underway. Getting out in front can be enormously rewarding and create new leadership. However, we must recognize that how we introduce technology must be driven by the challenges it is being introduced to address. I am already hearing people talk about developments such as AI, machine learning and neural networks as if they are fairy dust to sprinkle on the industry’s problems. That is not how this transformation process works. How are you approaching the challenges that this transformation poses? Karen: At RMS, we start by understanding the challenges and opportunities from our customers’ perspectives and then look at what value we can bring that we have not brought before. Only then can we look at how we deliver the required solution. Moe: It’s about having an “outward-in” perspective. We have amazing technology expertise across modeling, computer science and data science, but to deploy that effectively we must listen to what the market wants. We know that many companies are operating multiple disparate systems within their networks that have simply been built upon again and again. So, we must look at harnessing technology to change that, because where you have islands of data, applications and analysis, you lose fidelity, time and insight and costs rise. Moe: While there is a commonality of purpose spanning insurers, reinsurers and brokers, every organization is different. At RMS, we must incorporate that into our software and our platforms. There is no one-size-fits-all and we can’t force everyone to go down the same analytical path. That’s why we are adopting a more modular approach in terms of our software. Whether the focus is portfolio management or underwriting decision-making, it’s about choosing those modules that best meet your needs. “Data is the primary asset of our industry — it is the source of every risk decision, and every risk is itself an opportunity” Mohsen Rahmana, PhD Chief Risk Modeling Officer, RMS Mohsen: When constructing models, we focus on how we can bring the right technology to solve the specific problems our clients have. This requires a huge amount of critical thinking to bring the best solution to market. How strong is the talent base that is helping to deliver this level of capability? Mohsen: RMS is extremely fortunate to have such a fantastic array of talent. This caliber of expertise is what helps set us apart from competitors, enabling us to push boundaries and advance our modeling capabilities at the speed we are. Recently, we have set up teams of modelers and data and computer scientists tasked with developing a range of innovations. It’s fantastic having this depth of talent, and when you create an environment in which innovative minds can thrive you quickly reap the rewards — and that is what we are seeing. In fact, I have seen more innovation at RMS in the last six months than over the past several years. Moe: I would add though that the sector is not yet attracting the kind of talent seen at firms such as Google, Microsoft or Amazon, and it needs to. These companies are either large-scale customer-service providers capitalizing on big data platforms and leading-edge machine-learning techniques to achieve the scale, simplicity and flexibility their customers demand, or enterprises actually building these core platforms themselves. When you bring new blood into an organization or industry, you generate new ideas that challenge current thinking and practices, from the user interface to the underlying platform or the cost of performance. We need to do a better PR job as a technology sector. The best and brightest people in most cases just want the greatest problems to tackle — and we have a ton of those in our industry. Karen: The critical component of any successful team is a balance of complementary skills and capabilities focused on having a high impact on an interesting set of challenges. If you get that dynamic right, then that combination of different lenses correctly aligned brings real clarity to what you are trying to achieve and how to achieve it. I firmly believe at RMS we have that balance. If you look at the skills, experience and backgrounds of Moe, Mohsen and myself, for example, they couldn’t be more different. Bringing Moe and Mohsen together, however, has quickly sparked great and different thinking. They work incredibly well together despite their vastly different technical focus and career paths. In fact, we refer to them as the “Moe-Moes” and made them matching inscribed giant chain necklaces and presented them at an all-hands meeting recently. Moe: Some of the ideas we generate during our discussions and with other members of the modeling team are incredibly powerful. What’s possible here at RMS we would never have been able to even consider before we started working together. Mohsen: Moe’s vast experience of building platforms at companies such as HP, Intel and Microsoft is a great addition to our capabilities. Karen brings a history of innovation and building market platforms with the discipline and the focus we need to deliver on the vision we are creating. If you look at the huge amount we have been able to achieve in the months that she has been at RMS, that is a testament to the clear direction we now have. Karen: While we do come from very different backgrounds, we share a very well-defined culture. We care deeply about our clients and their needs. We challenge ourselves every day to innovate to meet those needs, while at the same time maintaining a hell-bent pragmatism to ensure we deliver. Mohsen: To achieve what we have set out to achieve requires harmony. It requires a clear vision, the scientific know-how, the drive to learn more, the ability to innovate and the technology to deliver — all working in harmony. Career Highlights Karen White is an accomplished leader in the technology industry, with a 25-year track record of leading, innovating and scaling global technology businesses. She started her career in Silicon Valley in 1993 as a senior executive at Oracle. Most recently, Karen was president and COO at Addepar, a leading fintech company serving the investment management industry with data and analytics solutions. Moe Khosravy (center) has over 20 years of software innovation experience delivering enterprise-grade products and platforms differentiated by data science, powerful analytics and applied machine learning to help transform industries. Most recently he was vice president of software at HP Inc., supporting hundreds of millions of connected devices and clients. Mohsen Rahnama leads a global team of accomplished scientists, engineers and product managers responsible for the development and delivery of all RMS catastrophe models and data. During his 20 years at RMS, he has been a dedicated, hands-on leader of the largest team of catastrophe modeling professionals in the industry.

IFRS17: Under the Microscope

September 05, 2018How new accounting standards could reduce demand for reinsurance as cedants are forced to look more closely at underperforming books of business They may not be coming into effect until January 1, 2021, but the new IFRS 17 accounting standards are already shaking up the insurance industry. And they are expected to have an impact on the January 1, 2019, renewals as insurers ready themselves for the new regime. Crucially, IFRS 17 will require insurers to recognize immediately the full loss on any unprofitable insurance business. “The standard states that reinsurance contracts must now be valued and accounted for separate to the underlying contracts, meaning that traditional ‘netting down’ (gross less reinsured) and approximate methods used for these calculations may no longer be valid,” explained PwC partner Alex Bertolotti in a blog post. “Even an individual reinsurance contract could be material in the context of the overall balance sheet, and so have the potential to create a significant mismatch between the value placed on reinsurance and the value placed on the underlying risks,” he continued. “This problem is not just an accounting issue, and could have significant strategic and operational implications as well as an impact on the transfer of risk, on tax, on capital and on Solvency II for European operations.” In fact, the requirements under IFRS 17 could lead to a drop in reinsurance purchasing, according to consultancy firm Hymans Robertson, as cedants are forced to question why they are deriving value from reinsurance rather than the underlying business on unprofitable accounts. “This may dampen demand for reinsurance that is used to manage the impact of loss making business,” it warned in a white paper. Cost of Compliance The new accounting standards will also be a costly compliance burden for many insurance companies. Ernst & Young estimates that firms with over US$25 billion in Gross Written Premium (GWP) could be spending over US$150 million preparing for IFRS 17. Under the new regime, insurers will need to account for their business performance at a more granular level. In order to achieve this, it is important to capture more detailed information on the underlying business at the point of underwriting, explained Corina Sutter, director of government and regulatory affairs at RMS. This can be achieved by deploying systems and tools that allow insurers to capture, manage and analyze such granular data in increasingly high volumes, she said. “It is key for those systems or tools to be well-integrated into any other critical data repositories, analytics systems and reporting tools. “From a modeling perspective, analyzing performance at contract level means precisely understanding the risk that is being taken on by insurance firms for each individual account,” continued Sutter. “So, for P&C lines, catastrophe risk modeling may be required at account level. Many firms already do this today in order to better inform their pricing decisions. IFRS 17 is a further push to do so. “It is key to use tools that not only allow the capture of the present risk, but also the risk associated with the future expected value of a contract,” she added. “Probabilistic modeling provides this capability as it evaluates risk over time.”

A Model Operation

September 05, 2018EXPOSURE explores the rationale, challenges and benefits of adopting an outsourced model function Business process outsourcing has become a mainstay of the operational structure of many organizations. In recent years, reflecting new technologies and changing market dynamics, the outsourced function has evolved significantly to fit seamlessly within existing infrastructure. On the modeling front, the exponential increase in data coupled with the drive to reduce expense ratios while enhancing performance levels is making the outsourced model proposition an increasingly attractive one. The Business Rationale The rationale for outsourcing modeling activities spans multiple possible origin points, according to Neetika Kapoor Sehdev, senior manager at RMS. “Drivers for adopting an outsourced modeling strategy vary significantly depending on the company itself and their specific ambitions. It may be a new startup that has no internal modeling capabilities, with outsourcing providing access to every component of the model function from day one.” There is also the flexibility that such access provides, as Piyush Zutshi, director of RMS Analytical Services points out. “That creates a huge value-add in terms of our catastrophe response capabilities — knowing that we are able to report our latest position has made a big difference on this front” Judith Woo Starstone “In those initial years, companies often require the flexibility of an outsourced modeling capability, as there is a degree of uncertainty at that stage regarding potential growth rates and the possibility that they may change track and consider alternative lines of business or territories should other areas not prove as profitable as predicted.” Another big outsourcing driver is the potential to free up valuable internal expertise, as Sehdev explains. “Often, the daily churn of data processing consumes a huge amount of internal analytical resources,” she says, “and limits the opportunities for these highly skilled experts to devote sufficient time to analyzing the data output and supporting the decision-making process.” This all-too-common data stumbling block for many companies is one that not only affects their ability to capitalize fully on their data, but also to retain key analytical staff. “Companies hire highly skilled analysts to boost their data performance,” Zutshi says, “but most of their working day is taken up by data crunching. That makes it extremely challenging to retain that caliber of staff as they are massively overqualified for the role and also have limited potential for career growth.” Other reasons for outsourcing include new model testing. It provides organizations with a sandbox testing environment to assess the potential benefits and impact of a new model on their underwriting processes and portfolio management capabilities before committing to the license fee. The flexibility of outsourced model capabilities can also prove critical during renewal periods. These seasonal activity peaks can be factored into contracts to ensure that organizations are able to cope with the spike in data analysis required as they reanalyze portfolios, renew contracts, add new business and write off old business. “At RMS Analytical Services,” Zutshi explains, “we prepare for data surge points well in advance. We work with clients to understand the potential size of the analytical spike, and then we add a factor of 20 to 30 percent to that to ensure that we have the data processing power on hand should that surge prove greater than expected.” Things to Consider Integrating an outsourced function into existing modeling processes can prove a demanding undertaking, particularly in the early stages where companies will be required to commit time and resources to the knowledge transfer required to ensure a seamless integration. The structure of the existing infrastructure will, of course, be a major influencing factor in the ease of transition. “There are those companies that over the years have invested heavily in their in-house capabilities and developed their own systems that are very tightly bound within their processes,” Sehdev points out, “which can mean decoupling certain aspects is more challenging. For those operations that run much leaner infrastructures, it can often be more straightforward to decouple particular components of the processing.” RMS Analytical Services has, however, addressed this issue and now works increasingly within the systems of such clients, rather than operating as an external function. “We have the ability to work remotely, which means our teams operate fully within their existing framework. This removes the need to decouple any parts of the data chain, and we can fit seamlessly into their processes.” This also helps address any potential data transfer issues companies may have, particularly given increasingly stringent information management legislation and guidelines. There are a number of factors that will influence the extent to which a company will outsource its modeling function. Unsurprisingly, smaller organizations and startup operations are more likely to take the fully outsourced option, while larger companies tend to use it as a means of augmenting internal teams — particularly around data engineering. RMS Analytical Services operate various different engagement models. Managed services are based on annual contracts governed by volume for data engineering and risk analytics. On-demand services are available for one-off risk analytics projects, renewals support, bespoke analysis such as event response, and new IP adoption. “Modeler down the hall” is a third option that provides ad hoc work, while the firm also offers consulting services around areas such as process optimization, model assessment and transition support. Making the Transition Work Starstone Insurance, a global specialty insurer providing a diversified range of property, casualty and specialty insurance to customers worldwide, has been operating an outsourced modeling function for two and a half years. “My predecessor was responsible for introducing the outsourced component of our modeling operations,” explains Judith Woo, head of exposure management at Starstone. “It was very much a cost-driven decision as outsourcing can provide a very cost-effective model.” The company operates a hybrid model, with the outsourced team working on most of the pre- and post-bind data processing, while its internal modeling team focuses on the complex specialty risks that fall within its underwriting remit. “The volume of business has increased over the years as has the quality of data we receive,” she explains. “The amount of information we receive from our brokers has grown significantly. A lot of the data processing involved can be automated and that allows us to transfer much of this work to RMS Analytical Services.” On a day-to-day basis, the process is straightforward, with the Starstone team uploading the data to be processed via the RMS data portal. The facility also acts as a messaging function with the two teams communicating directly. “In fact,” Woo points out, “there are email conversations that take place directly between our underwriters and the RMS Analytical Service team that do not always require our modeling division’s input.” However, reaching this level of integration and trust has required a strong commitment from Starstone to making the relationship work. “You are starting to work with a third-party operation that does not understand your business or its data processes. You must invest time and energy to go through the various systems and processes in detail,” she adds, “and that can take months depending on the complexity of the business. “You are essentially building an extension of your team, and you have to commit to making that integration work. You can’t simply bring them in, give them a particular problem and expect them to solve it without there being the necessary knowledge transfer and sharing of information.” Her internal modeling team of six has access to an outsourced team of 26, she explains, which greatly enhances the firm’s data-handling capabilities. “With such a team, you can import fresh data into the modeling process on a much more frequent basis, for example. That creates a huge value-add in terms of our catastrophe response capabilities — knowing that we are able to report our latest position has made a big difference on this front.” Creating a Partnership As with any working partnership, the initial phases are critical as they set the tone for the ongoing relationship. “We have well-defined due diligence and transition methodologies,” Zutshi states. “During the initial phase, we work to understand and evaluate their processes. We then create a detailed transition methodology, in which we define specific data templates, establish monthly volume loads, lean periods and surge points, and put in place communication and reporting protocols.” At the end, both parties have a full documented data dictionary with business rules governing how data will be managed, coupled with the option to choose from a repository of 1,000+ validation rules for data engineering. This is reviewed on a regular basis to ensure all processes remain aligned with the practices and direction of the organization. “Often, the daily churn of data processing consumes a huge amount of internal analytical resources and limits the opportunities to devote sufficient time to analyzing the data output” — Neetika Kapoor Sehdev, RMS Service level agreements (SLAs) also form also form a central tenet of the relationship plus stringent data compliance procedures. “Robust data security and storage is critical,” says Woo. “We have comprehensive NDAs [non-disclosure agreements] in place that are GDPR compliant to ensure that the integrity of our data is maintained throughout. We also have stringent SLAs in place to guarantee data processing turnaround times. Although, you need to agree on a reasonable time period reflecting the data complexity and also when it is delivered.” According to Sehdev, most SLAs that the analytical team operates require a 24-hour data turnaround rising to 48-72 hours for more complex data requirements, but clients are able to set priorities as needed. “However, there is no point delivering on turnaround times,” she adds, “if the quality of the data supplied is not fit for purpose. That’s why we apply a number of data quality assurance processes, which means that our first-time accuracy level is over 98 percent.” The Value-Add Most clients of RMS Analytical Services have outsourced modeling functions to the division for over seven years, with a number having worked with the team since it launched in 2004. The decision to incorporate their services is not taken lightly given the nature of the information involved and the level of confidence required in their capabilities. “The majority of our large clients bring us on board initially in a data-engineering capacity,” explains Sehdev. “It’s the building of trust and confidence in our ability, however, that helps them move to the next tranche of services.” The team has worked to strengthen and mature these relationships, which has enabled them to increase both the size and scope of the engagements they undertake. “With a number of clients, our role has expanded to encompass account modeling, portfolio roll-up and related consulting services,” says Zutshi. “Central to this maturing process is that we are interacting with them daily and have a dedicated team that acts as the primary touch point. We’re also working directly with the underwriters, which helps boost comfort and confidence levels. “For an outsourced model function to become an integral part of the client’s team,” he concludes, “it must be a close, coordinated effort between the parties. That’s what helps us evolve from a standard vendor relationship to a trusted partner.”

Taking Cloud Adoption to the Core

September 05, 2018Insurance and reinsurance companies have been more reticent than other business sectors in embracing Cloud technology. EXPOSURE explores why it is time to ditch “the comfort blanket” The main benefits of Cloud computing are well-established and include scale, efficiency and cost effectiveness. The Cloud also offers economical access to huge amounts of computing power, ideal to tackle the big data/big analytics challenge. And exciting innovations such as microservices — allowing access to prebuilt, Cloud-hosted algorithms, artificial intelligence (AI) and machine learning applications, which can be assembled to build rapidly deployed new services — have the potential to transform the (re)insurance industry. And yet the industry has continued to demonstrate a reluctance in moving its core services onto a Cloud-based infrastructure. While a growing number of insurance and reinsurance companies are using Cloud services (such as those offered by Amazon Web Services, Microsoft Azure and Google Cloud) for nonessential office and support functions, most have been reluctant to consider Cloud for their mission-critical infrastructure. In its research of Cloud adoption rates in regulated industries, such as banking, insurance and health care, McKinsey found, “Many enterprises are stuck supporting both their inefficient traditional data-center environments and inadequately planned Cloud implementations that may not be as easy to manage or as affordable as they imagined.” No Magic Bullet It also found that “lift and shift” is not enough, where companies attempt to move existing, monolithic business applications to the Cloud, expecting them to be “magically endowed with all the dynamic features.” “We’ve come up against a lot of that when explaining the difference what a cloud-based risk platform offers,” says Farhana Alarakhiya, vice president of products at RMS. “Basically, what clients are showing us is their legacy offering placed on a new Cloud platform. It’s potentially a better user interface, but it’s not really transforming the process.” Now is the time for the market-leading (re)insurers to make that leap and really transform how they do business, she says. “It’s about embracing the new and different and taking comfort in what other industries have been able to do. A lot of Cloud providers are making it very easy to deliver analytics on the Cloud. So, you’ve got the story of agility, scalability, predictability, compliance and security on the Cloud and access to new analytics, new algorithms, use of microservices when it comes to delivering predictive analytics.” This ease to tap into highly advanced analytics and new applications, unburdened from legacy systems, makes the Cloud highly attractive. Hussein Hassanali, managing partner at VTX Partners, a division of Volante Global, commented: “Cloud can also enhance long-term pricing adequacy and profitability driven by improved data capture, historical data analytics and automated links to third-party market information. Further, the ‘plug-and-play’ aspect allows you to continuously innovate by connecting to best-in-class third-party applications.” While moving from a server-based platform to the Cloud can bring numerous advantages, there is a perceived unwillingness to put high-value data into the environment, with concerns over security and the regulatory implications that brings. This includes data protection rules governing whether or not data can be moved across borders. “There are some interesting dichotomies in terms of attitude and reality,” says Craig Beattie, analyst at Celent Consulting. “Cloud-hosting providers in western Europe and North America are more likely to have better security than (re)insurers do in their internal data centers, but the board will often not support a move to put that sort of data outside of the company’s infrastructure. “Today, most CIOs and executive boards have moved beyond the knee-jerk fears over security, and the challenges have become more practical,” he continues. “They will ask, ‘What can we put in the Cloud? What does it cost to move the data around and what does it cost to get the data back? What if it fails? What does that backup look like?’” With a hybrid Cloud solution, insurers wanting the ability to tap into the scalability and cost efficiencies of a software-as-a-service (SaaS) model, but unwilling to relinquish their data sovereignty, dedicated resources can be developed in which to place customer data alongside the Cloud infrastructure. But while a private or hybrid solution was touted as a good compromise for insurers nervous about data security, these are also more costly options. The challenge is whether the end solution can match the big Cloud providers with global footprints that have compliance and data sovereignty issues already covered for their customers. “We hear a lot of things about the Internet being cheap — but if you partially adopt the Internet and you’ve got significant chunks of data, it gets very costly to shift those back and forth,” says Beattie. A Cloud-first approach Not moving to the Cloud is no longer a viable option long term, particularly as competitors make the transition and competition and disruption change the industry beyond recognition. Given the increasing cost and complexity involved in updating and linking legacy systems and expanding infrastructure to encompass new technology solutions, Cloud is the obvious choice for investment, thinks Beattie. “If you’ve already built your on-premise infrastructure based on classic CPU-based processing, you’ve tied yourself in and you’re committed to whatever payback period you were expecting,” he says. “But predictive analytics and the infrastructure involved is moving too quickly to make that capital investment. So why would an insurer do that? In many ways it just makes sense that insurers would move these services into the Cloud. “State-of-the-art for machine learning processing 10 years ago was grids of generic CPUs,” he adds. “Five years ago, this was moving to GPU-based neural network analyses, and now we’ve got ‘AI chips’ coming to market. In an environment like that, the only option is to rent the infrastructure as it’s needed, lest we invest in something that becomes legacy in less time than it takes to install.” Taking advantage of the power and scale of Cloud computing also advances the march toward real-time, big data analytics. Ricky Mahar, managing partner at VTX Partners, a division of Volante Global, added: “Cloud computing makes companies more agile and scalable, providing flexible resources for both power and space. It offers an environment critical to the ability of companies to fully utilize the data available and capitalize on real-time analytics. Running complex analytics using large data sets enhances both internal decision-making and profitability.” As discussed, few (re)insurers have taken the plunge and moved their mission-critical business to a Cloud-based SaaS platform. But there are a handful. Among these first movers are some of the newer, less legacy-encumbered carriers, but also some of the industry’s more established players. The latter includes U.S.-based life insurer MetLife, which announced it was collaborating with IBM Cloud last year to build a platform designed specifically for insurers. Meanwhile Munich Re America is offering a Cloud-hosted AI platform to its insurer clients. “The ice is thawing and insurers and reinsurers are changing,” says Beattie. “Reinsurers [like Munich Re] are not just adopting Cloud but are launching new innovative products on the Cloud.” What’s the danger of not adopting the Cloud? “If your reasons for not adopting the Cloud are security-based, this reason really doesn’t hold up any more. If it is about reliability, scalability, remember that the largest online enterprises such as Amazon, Netflix are all Cloud-based,” comments Farhana Alarakhiya. “The real worry is that there are so many exciting, groundbreaking innovations built in the Cloud for the (re)insurance industry, such as predictive analytics, which will transform the industry, that if you miss out on these because of outdated fears, you will damage your business. The industry is waiting for transformation, and it’s progressing fast in the Cloud.”

Pushing Back the Water

September 05, 2018Flood Re has been tasked with creating a risk-reflective, affordable U.K. flood insurance market by 2039. Moving forward, data resolution that supports critical investment decisions will be key Millions of properties in the U.K. are exposed to some form of flood risk. While exposure levels vary massively across the country, coastal, fluvial and pluvial floods have the potential to impact most locations across the U.K. Recent flood events have dramatically demonstrated this with properties in perceived low-risk areas being nevertheless severely affected. Before the launch of Flood Re, securing affordable household cover in high-risk areas had become more challenging — and for those impacted by flooding, almost impossible. To address this problem, Flood Re — a joint U.K. Government and insurance-industry initiative — was set up in April 2016 to help ensure available, affordable cover for exposed properties. The reinsurance scheme’s immediate aim was to establish a system whereby insurers could offer competitive premiums and lower excesses to highly exposed households. To date it has achieved considerable success on this front. Of the 350,000 properties deemed at high risk, over 150,000 policies have been ceded to Flood Re. Over 60 insurance brands representing 90 percent of the U.K. home insurance market are able to cede to the scheme. Premiums for households with prior flood claims fell by more than 50 percent in most instances, and a per-claim excess of £250 per claim (as opposed to thousands of pounds) was set. While there is still work to be done, Flood Re is now an effective, albeit temporary, barrier to flood risk becoming uninsurable in high-risk parts of the U.K. However, in some respects, this success could be considered low-hanging fruit. A Temporary Solution Flood Re is intended as a temporary solution, granted with a considerable lifespan. By 2039, when the initiative terminates, it must leave behind a flood insurance market based on risk-reflective pricing that is affordable to most households. To achieve this market nirvana, it is also tasked with working to manage flood risks. According to Gary McInally, chief actuary at Flood Re, the scheme must act as a catalyst for this process. “Flood Re has a very clear remit for the longer term,” he explains. “That is to reduce the risk of flooding over time, by helping reduce the frequency with which properties flood and the impact of flooding when it does occur. Properties ought to be presenting a level of risk that is insurable in the future. It is not about removing the risk, but rather promoting the transformation of previously uninsurable properties into insurable properties for the future.” To facilitate this transition to improved property-level resilience, Flood Re will need to adopt a multifaceted approach promoting research and development, consumer education and changes to market practices to recognize the benefit. Firstly, it must assess the potential to reduce exposure levels through implementing a range of resistance (the ability to prevent flooding) and resilience (the ability to recover from flooding) measures at the property level. Second, it must promote options for how the resulting risk reduction can be reflected in reduced flood cover prices and availability requiring less support from Flood Re. According to Andy Bord, CEO of Flood Re: “There is currently almost no link between the action of individuals in protecting their properties against floods and the insurance premium which they are charged by insurers. In principle, establishing such a positive link is an attractive approach, as it would provide a direct incentive for households to invest in property-level protection. “Flood Re is building a sound evidence base by working with academics and others to quantify the benefits of such mitigation measures. We are also investigating ways the scheme can recognize the adoption of resilience measures by householders and ways we can practically support a ‘build-back-better’ approach by insurers.” Modeling Flood Resilience Multiple studies and reports have been conducted in recent years into how to reduce flood exposure levels in the U.K. However, an extensive review commissioned by Flood Re spanning over 2,000 studies and reports found that while helping to clarify potential appropriate measures, there is a clear lack of data on the suitability of any of these measures to support the needs of the insurance market. A 2014 report produced for the U.K. Environment Agency identified a series of possible packages of resistance and resilience measures. The study was based on the agency’s Long-Term Investment Scenario (LTIS) model and assessed the potential benefit of the various packages to U.K. properties at risk of flooding. The 2014 study is currently being updated by the Environment Agency, with the new study examining specific subsets based on the levels of benefit delivered. “It is not about removing the risk, but rather promoting the transformation of previously uninsurable properties into insurable properties” Gary McInally Flood Re Packages considered will encompass resistance and resilience measures spanning both active and passive components. These include: waterproof external walls, flood-resistant doors, sump pumps and concrete flooring. The effectiveness of each is being assessed at various levels of flood severity to generate depth damage curves. While the data generated will have a foundational role in helping support outcomes around flood-related investments, it is imperative that the findings of the study undergo rigorous testing, as McInally explains. “We want to promote the use of the best-available data when making decisions,” he says. “That’s why it was important to independently verify the findings of the Environment Agency study. If the findings differ from studies conducted by the insurance industry, then we should work together to understand why.” To assess the results of key elements of the study, Flood Re called upon the flood modeling capabilities of RMS, and its Europe Inland Flood High-Definition (HD) Models, which provide the most comprehensive and granular view of flood risk currently available in Europe, covering 15 countries including the U.K. The models enable the assessment of flood risk and the uncertainties associated with that risk right down to the individual property and coverage level. In addition, it provides a much longer simulation timeline, capitalizing on advances in computational power through Cloud-based computing to span 50,000 years of possible flood events across Europe, generating over 200,000 possible flood scenarios for the U.K. alone. The model also enables a much more accurate and transparent means of assessing the impact of permanent and temporary flood defenses and their role to protect against both fluvial and pluvial flood events. Putting Data to the Test “The recent advances in HD modeling have provided greater transparency and so allow us to better understand the behavior of the model in more detail than was possible previously,” McInally believes. “That is enabling us to pose much more refined questions that previously we could not address.” While the Environment Agency study provided significant data insights, the LTIS model does not incorporate the capability to model pluvial and fluvial flooding at the individual property level, he explains. RMS used its U.K. Flood HD model to conduct the same analysis recently carried out by the Environment Agency, benefiting from its comprehensive set of flood events together with the vulnerability, uncertainty and loss modeling framework. This meant that RMS could model the vulnerability of each resistance/resilience package for a particular building at a much more granular level. RMS took the same vulnerability data used by the Environment Agency, which is relatively similar to the one used within the model, and ran this through the flood model, to assess the impact of each of the resistance and resilience packages against a vulnerability baseline to establish their overall effectiveness. The results revealed a significant difference between the model numbers generated by the LTIS model and those produced by the RMS Europe Inland Flood HD Models. Since hazard data used by the Environment Agency did not include pluvial flood risk, combined with general lower resolution layers than used in the RMS model, the LTIS study presented an overconcentration and hence overestimation of flood depths at the property level. As a result, the perceived benefits of the various resilience and resistance measures were underestimated — the potential benefits attributed to each package in some instances were almost double those of the original study. The findings can show how using a particular package across a subset of about 500,000 households in certain specific locations, could achieve a potential reduction in annual average losses from flood events of up to 40 percent at a country level. This could help Flood Re understand how to allocate resources to generate the greatest potential and achieve the most significant benefit. A Return on Investment? There is still much work to be done to establish an evidence base for the specific value of property-level resilience and resistance measures of sufficient granularity to better inform flood-related investment decisions. “The initial indications from the ongoing Flood Re cost-benefit analysis work are that resistance measures, because they are cheaper to implement, will prove a more cost-effective approach across a wider group of properties in flood-exposed areas,” McInally indicates. “However, in a post-repair scenario, the cost-benefit results for resilience measures are also favorable.” However, he is wary about making any definitive statements at this early stage based on the research to date. “Flood by its very nature includes significant potential ‘hit-and-miss factors’,” he points out. “You could, for example, make cities such as Hull or Carlisle highly flood resistant and resilient, and yet neither location might experience a major flood event in the next 30 years while the Lake District and West Midlands might experience multiple floods. So the actual impact on reducing the cost of flooding from any program of investment will, in practice, be very different from a simple modeled long-term average benefit. Insurance industry modeling approaches used by Flood Re, which includes the use of the RMS Europe Inland Flood HD Models, could help improve understanding of the range of investment benefit that might actually be achieved in practice.”

Are We Moving Off The Baseline?

September 05, 2018How is climate change influencing natural perils and weather extremes, and what should reinsurance companies do to respond? Reinsurance companies may feel they are relatively insulated from the immediate effects of climate change on their business, given that most property catastrophe policies are renewed on an annual basis. However, with signs that we are already moving off the historical baseline when it comes to natural perils, there is evidence to suggest that underwriters should already be selectively factoring the influence of climate change into their day-to-day decision-making. Most climate scientists agree that some of the extreme weather anticipated by the United Nations Intergovernmental Panel on Climate Change (IPCC) in 2013 is already here and can be linked to climate change in real time via the burgeoning field of extreme weather attribution. “It’s a new area of science that has grown up in the last 10 to 15 years,” explains Dr. Robert Muir-Wood, chief research officer at RMS. “Scientists run two climate models for the whole globe, both of them starting in 1950. One keeps the atmospheric chemistry static since then, while the other reflects the actual increase in greenhouse gases. By simulating thousands of years of these alternative worlds, we can find the difference in the probability of a particular weather extreme.” “Underwriters should be factoring the influence of climate change into their day-to-day decision-making” For instance, climate scientists have run their models in an effort to determine how much the intensity of the precipitation that caused such devastating flooding during last year’s Hurricane Harvey can be attributed to anthropogenic climate change. Research conducted by scientists at the World Weather Attribution (WWA) project has found that the record rainfall produced by Harvey was at least three times more likely to be due to the influence of global warming. This suggests, for certain perils and geographies, reinsurers need to be considering the implications of an increased potential for certain climate extremes in their underwriting. “If we can’t rely on the long-term baseline, how and where do we modify our perspective?” asks Muir-Wood. “We need to attempt to answer this question peril by peril, region by region and by return period. You cannot generalize and say that all perils are getting worse everywhere, because they’re not. In some countries and perils there is evidence that the changes are already material, and then in many other areas the jury is out and it’s not clear.” Keeping Pace With the Change While the last IPCC Assessment Report (AR5) was published in 2014 (the next is due in 2021), there is some consensus on how climate change is beginning to influence natural perils and climate extremes. Many regional climates naturally have large variations at interannual and even interdecadal timescales, which makes observation of climate change, and validation of predictions, more difficult. “There is always going to be uncertainty when it comes to climate change,” emphasizes Swenja Surminski, head of adaptation research at the Grantham Research Institute on Climate Change and the Environment, part of the London School of Economics and Political Science (LSE). “But when you look at the scientific evidence, it’s very clear what’s happening to temperature, how the average temperature is increasing, and the impact that this can have on fundamental things, including extreme events.” According to the World Economic Forum’s Global Risks Report in 2018, “Too little has been done to mitigate climate change and … our own analysis shows that the likelihood of missing the Paris Agreement target of limiting global warming to two degrees Celsius or below is greater than the likelihood of achieving it.” The report cites extreme weather events and natural disasters as the top two “most likely” risks to happen in the next 10 years and the second- and third-highest risks (in the same order) to have the “biggest impact” over the next decade, after weapons of mass destruction. The failure of climate change mitigation and adaptation is also ranked in the top five for both likelihood and impact. It notes that 2017 was among the three hottest years on record and the hottest ever without an El Niño. It is clear that climate change is already exacerbating climate extremes, says Surminski, causing dry regions to become drier and hot regions to become hotter. “By now, based on our scientific understanding and also thanks to modeling, we get a much better picture of what our current exposure is and how that might be changing over the next 10, 20, even 50 to 100 years,” she says. “There is also an expectation we will have more freak events, when suddenly the weather produces really unexpected, very unusual phenomena,” she continues. “That’s not just climate change. It’s also tied into El Niño and other weather phenomena occurring, so it’s a complex mix. But right now, we’re in a much better position to understand what’s going on and to appreciate that climate change is having an impact.” Pricing for Climate Change For insurance and reinsurance underwriters, the challenge is to understand the extent to which we have already deviated from the historical record and to manage and price for that appropriately. It is not an easy task given the inherent variability in existing weather patterns, according to Andy Bord, CEO of Flood Re, the U.K.’s flood risk pool, which has a panel of international reinsurers. “The existing models are calibrated against data that already includes at least some of the impact of climate change,” he says. “Some model vendors have also recently produced models that aim to assess the impact of climate change on the future level of flood risk in the U.K. We know at least one larger reinsurer has undertaken their own climate change impact analyses. “We view improving the understanding of the potential variability of weather given today’s climate as being the immediate challenge for the insurance industry, given the relatively short-term view of markets,” he adds. The need for underwriters to appreciate the extent to which we may have already moved off the historical baseline is compounded by the conflicting evidence on how climate change is influencing different perils. And by the counterinfluence or confluence, in many cases, of naturally occurring climate patterns, such as El Niño and the Atlantic Multidecadal Oscillation (AMO). The past two decades have seen below-normal European windstorm activity, for instance, and evidence builds that the unprecedented reduction in Arctic sea ice during the autumn months is the main cause, according to Dr. Stephen Cusack, director of model development at RMS. “In turn, the sea ice declines have been driven both by the ‘polar amplification’ aspect of anthropogenic climate change and the positive phase of the AMO over the past two decades, though their relative roles are uncertain. “We view improving the understanding of the potential variability of weather given today’s climate as being the immediate challenge for the insurance industry, given the relatively short-term view of markets” Andy Bord Flood Re “The (re)insurance market right now is saying, ‘Your model has higher losses than our recent experience.’ And what we are saying is that the recent lull is not well understood, and we are unsure how long it will last. Though for pricing future risk, the question is when, and not if, the rebound in European windstorm activity happens. Regarding anthropogenic climate change, other mechanisms will strengthen and counter the currently dominant ‘polar amplification’ process. Also, the AMO goes into positive and negative phases,” he continues. “It’s been positive for the last 20 to 25 years and that’s likely to change within the next decade or so.” And while European windstorm activity has been somewhat muted by the AMO, the same cannot be said for North Atlantic hurricane activity. Hurricanes Harvey, Irma and Maria (HIM) caused an estimated US$92 billion in insured losses, making 2017 the second costliest North Atlantic hurricane season, according to Swiss Re Sigma. “The North Atlantic seems to remain in an active phase of hurricane activity, irrespective of climate change influences that may come on top of it,” the study states. While individual storms are never caused by one factor alone, stressed the Sigma study, “Some of the characteristics observed in HIM are those predicted to occur more frequently in a warmer world.” In particular, it notes the high level of rainfall over Houston and hurricane intensification. While storm surge was only a marginal contributor to the losses from Hurricane Harvey, Swiss Re anticipates the probability of extreme storm surge damage in the northeastern U.S. due to higher seas will almost double in the next 40 years. “From a hurricane perspective, we can talk about the frequency of hurricanes in a given year related to the long-term average, but what’s important from the climate change point of view is that the frequency and the intensity on both sides of the distribution are increasing,” says Dr. Pete Dailey, vice president at RMS. “This means there’s more likelihood of quiet years and more likelihood of very active years, so you’re moving away from the mean, which is another way of thinking about moving away from the baseline. “So, we need to make sure that we are modeling the tail of the distribution really well, and that we’re capturing the really wet years — the years where there’s a higher frequency of torrential rain in association with events that we model.” The Edge of Insurability Over the long term, the industry likely will be increasingly insuring the impact of anthropogenic climate change. One question is whether we will see “no-go” areas in the future, where the risk is simply too high for insurance and reinsurance companies to take on. As Robert Muir-Wood of RMS explains, there is often a tension between the need for (re)insurers to charge an accurate price for the risk and the political pressure to ensure cover remains available and affordable. He cites the community at Queen’s Cove in Grand Bahama, where homes were unable to secure insurance given the repeated storm surge flood losses they have sustained over the years from a number of hurricanes. Unable to maintain a mortgage without insurance, properties were left to fall into disrepair. “Natural selection came up with a solution,” says Muir-Wood, whereby some homeowners elevated buildings on concrete stilts thereby making them once again insurable. “In high-income, flood-prone countries, such as Holland, there has been sustained investment in excellent flood defenses,” he says. “The challenge in developing countries is there may not be the money or the political will to build adequate flood walls. In a coastal city like Jakarta, Indonesia, where the land is sinking as a result of pumping out the groundwater, it’s a huge challenge. “It’s not black and white as to when it becomes untenable to live somewhere. People will find a way of responding to increased incidence of flooding. They may simply move their life up a level, as already happens in Venice, but insurability will be a key factor and accommodating the changes in flood hazard is going to be a shared challenge in coastal areas everywhere.” Political pressure to maintain affordable catastrophe insurance was a major driver of the U.S. residual market, with state-backed Fair Access to Insurance Requirements (FAIR) plans providing basic property insurance for homes that are highly exposed to natural catastrophes. Examples include the California Earthquake Association, Texas Windstorm Insurance Association and Florida Citizens Property Insurance Corporation (and state reinsurer, the FHCF). However, the financial woes experienced by FEMA’s National Flood Insurance Program (NFIP), currently the principal provider of residential flood insurance in the U.S., demonstrates the difficulties such programs face in terms of being sustainable over the long term. With the U.K.’s Flood Re scheme, investment in disaster mitigation is a big part of the solution, explains CEO Andy Bord. However, even then he acknowledges that “for some homes at the very greatest risk of flooding, the necessary investment needed to reduce risks and costs would simply be uneconomic.”

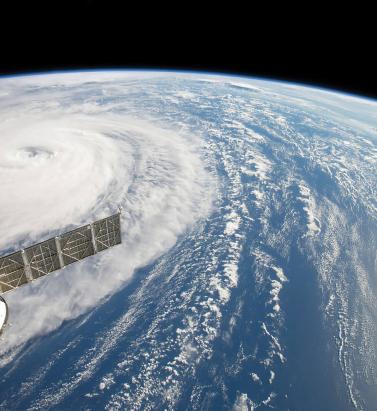

Making it Clear

September 05, 2018Pete Dailey of RMS explains why model transparency is critical to client confidence View of Hurricane Harvey from space In the aftermath of Hurricances Harvey, Irma and Maria (HIM), there was much comment on the disparity among the loss estimates produced by model vendors. Concerns have been raised about significant outlier results released by some modelers. “It’s no surprise,” explains Dr. Pete Dailey, vice president at RMS, “that vendors who approach the modeling differently will generate different estimates. But rather than pushing back against this, we feel it’s critical to acknowledge and understand these differences. “At RMS, we develop probabilistic models that operate across the full model space and deliver that insight to our clients. Uncertainty is inherent within the modeling process for any natural hazard, so we can’t rely solely on past events, but rather simulate the full range of plausible future events.” There are multiple components that contribute to differences in loss estimates, including the scientific approaches and technologies used and the granularity of the exposure data. “Increased demand for more immediate data is encouraging modelers to push the envelope” “As modelers, we must be fully transparent in our loss-estimation approach,” he states. “All apply scientific and engineering knowledge to detailed exposure data sets to generate the best possible estimates given the skill of the model. Yet the models always provide a range of opinion when events happen, and sometimes that is wider than expected. Clients must know exactly what steps we take, what data we rely upon, and how we apply the models to produce our estimates as events unfold. Only then can stakeholders conduct the due diligence to effectively understand the reasons for the differences and make important financial decisions accordingly.” Outlier estimates must also be scrutinized in greater detail. “There were some outlier results during HIM, and particularly for Hurricane Maria. The onus is on the individual modeler to acknowledge the disparity and be fully transparent about the factors that contributed to it. And most importantly, how such disparity is being addressed going forward,” says Dailey. “A ‘big miss’ in a modeled loss estimate generates market disruption, and without clear explanation this impacts the credibility of all catastrophe models. RMS models performed quite well for Maria. One reason for this was our detailed local knowledge of the building stock and engineering practices in Puerto Rico. We’ve built strong relationships over the years and made multiple visits to the island, and the payoff for us and our client comes when events like Maria happen.” As client demand for real-time and pre-event estimates grows, the data challenge placed on modelers is increasing. “Demand for more immediate data is encouraging modelers like RMS to push the scientific envelope,” explains Dailey, “as it should. However, we need to ensure all modelers acknowledge, and to the degree possible quantify, the difficulties inherent in real-time loss estimation — especially since it’s often not possible to get eyes on the ground for days or weeks after a major catastrophe.” Much has been said about the need for modelers to revise initial estimates months after an event occurs. Dailey acknowledges that while RMS sometimes updates its estimates, during HIM the strength of early estimates was clear. “In the months following HIM, we didn’t need to significantly revise our initial loss figures even though they were produced when uncertainty levels were at their peak as the storms unfolded in real time,” he states. “The estimates for all three storms were sufficiently robust in the immediate aftermath to stand the test of time. While no one knows what the next event will bring, we’re confident our models and, more importantly, our transparent approach to explaining our estimates will continue to build client confidence.”