Tag: Solvency II

Filter by:

Outcomes from The Solvency II “Lessons Learned” ...

For insurers and consumers in the European Union, 2016 is a key year, since it is when the industry gets real experience of Solvency II, the…

Integrating Catastrophe Models Under Solvency II

In terms of natural catastrophe risk, ensuring capital adequacy and managing an effective risk management framework under Solvency II,…

European Windstorm: Such A Peculiarly Uncertain ...

Europe’s windstorm season is upon us. As always, the risk is particularly uncertain, and with Solvency II due smack in the middle of the…

Exposure Data: The Undervalued Competitive Edge

High-quality catastrophe exposure data is key to a resilient and competitive insurer’s business. It can improve a wide range of risk…

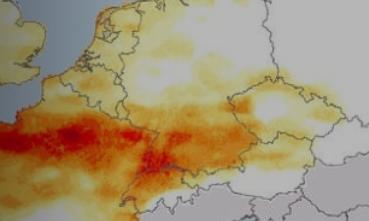

The Challenges Around Modeling European Windstor...

In December I wrote about Lothar and Daria, a cluster of windstorms that emphasized the significance of ‘location’ when assessing windstorm…

Matching Modeled Loss Against Historic Loss in E...

To be Solvency II compliant, re/insurers must validate the models they use, which can include comparisons to historical loss experience. In…